No More Pencils, No More Books

Artificially intelligent software is replacing the textbook—and reshaping American education.

Illustration by Natalie Matthews-Ramo

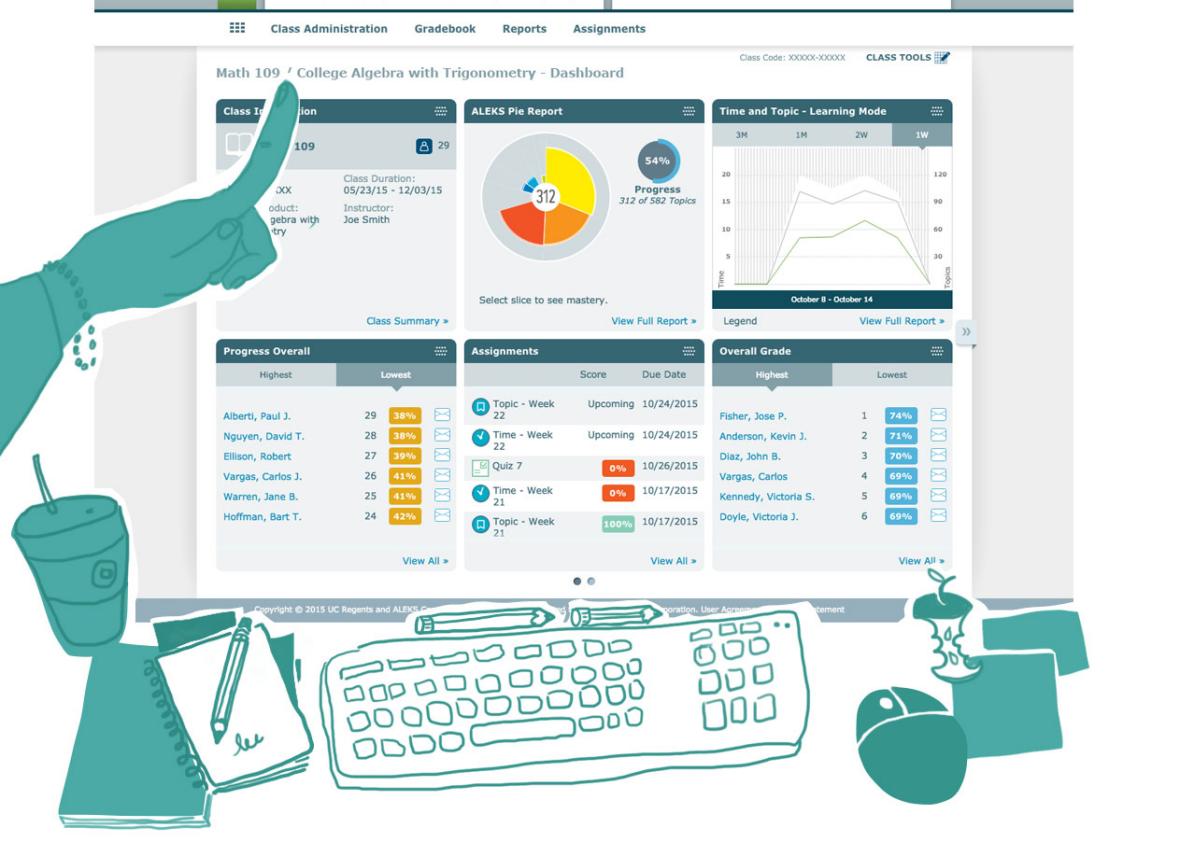

Eighteen students file into a brightly lit classroom. Arrayed around its perimeter are 18 computers. The students take their seats, log in to their machines, and silently begin working. At a desk in the back, the instructor’s screen displays a series of spreadsheets and data visualizations to help her track each student’s progress in real time.

This isn’t a Vulcan finishing school or a scene from some Back to the Future sequel. It’s Sheela Whelan’s pre-algebra class at Westchester Community College in Valhalla, New York.

The students in Whelan’s class are all using the same program, called ALEKS. But peek over their shoulders and you’ll see that each student is working on a different sort of problem. A young woman near the corner of the room is plugging her way through a basic linear equation. The young man to her left is trying to wrap his mind around a story problem involving fractions. Nearby, a more advanced student is simplifying equations that involve both variables and fractions.

At first glance, each student appears to be at a different point in the course. And that’s true, in one sense. But it’s more accurate to say that the course is literally different for each student.

Just a third of the way through the semester, a few of the most advanced students are nearly ready for the final exam. Others lag far behind. They’re all responsible for mastering the same concepts and skills. But the order in which they tackle them, and the pace at which they do so, is up to the artificially intelligent software that’s guiding them through the material and assessing their performance at every turn.

And how far we’ve come since the 1920s.

ALEKS starts everyone at the same point. But from the moment students begin to answer the practice questions that it automatically generates for them, ALEKS’ machine-learning algorithms are analyzing their responses to figure out which concepts they understand and which they don’t. A few wrong answers to a given type of question, and the program may prompt them to read some background materials, watch a short video lecture, or view some hints on what they might be doing wrong. But if they’re breezing through a set of questions on, say, linear inequalities, it may whisk them on to polynomials and factoring. Master that, and ALEKS will ask if they’re ready to take a test. Pass, and they’re on to exponents—unless they’d prefer to take a detour into a different topic, like data analysis and probability. So long as they’ve mastered the prerequisites, which topic comes next is up to them.

Whelan, the instructor, does not lecture. What would be the point, when no two students are studying the same thing? Instead, she serves as a sort of roving tutor, moving from one student to the next as they call on her for help. A teaching assistant is also on call to help those who get stuck or to verify that they’re ready to take their next test. As the students work, the software logs everything from which questions they get right and wrong to the amount of time they spend on each one. When Whelan’s online dashboard tells her that several are struggling with the same concept, she’ll assemble those students and work through some problems as a small group. It’s teaching as triage.

The result is a classroom experience starkly different from the model that has dominated American education for the past 100 years. In a conventional classroom, an instructor stands behind a lectern or in front of a whiteboard and says the same thing at the same time to a roomful of very different individuals. Some have no idea what she’s talking about. Others, knowing the material cold, are bored. In the middle are a handful who are at just the right point in their progress for the lecture to strike them as both comprehensible and interesting. When the bell rings, the teacher sends them all home to read the same chapter of the same textbook.

Almost everyone who thinks seriously about education agrees that this paradigm—sometimes derided as “sage on a stage”—is flawed. They just can’t agree on what should replace it. Flipped classrooms? Massive open online courses? Hands-on, project-based learning?

While the thinkers are arguing, textbook publishers are acting. With their traditional business models under pressure, they’ve begun to reinvent themselves as educational technology companies. They’re selling schools and colleges on a new generation of digital courseware—ALEKS is just one example—that takes on much of the work that teachers used to do. The software isn’t meant to replace teachers, they insist. Rather, it’s meant to free them to focus on the sort of high-level, conceptual instruction that only a human can provide.

Whelan’s pre-algebra classroom is a glimpse into the near future—not only for community college math classes, but potentially for wide swaths of K-12 and higher education in subjects ranging from chemistry to Spanish to social studies. Software like ALEKS, according to its proponents, represents the early stages of a technological and pedagogical breakthrough decades in the making. It has the potential to transform education as we know it, and soon. Before that happens, though, it’s worth at least asking the question: Is that something we want?

Over the past 20 years, computers and the Internet have dramatically reshaped business, entertainment, and the media—but not education. The technology you’ll find in most classrooms today (textbooks, desks, pencils, pens) closely resembles what you would have found there a century ago. Maybe the blackboards have become whiteboards or projection screens. But the underlying structure has persisted since the days of Horace Mann.

Efforts to introduce personal computers into the equation have been well-funded, well-intended, and sometimes even successful. But the push to equip students with iPads and Chromebooks has also met resistance, and for good reason: In the context of the traditional classroom, Internet-connected devices risk distracting from the learning process more than they aid it. A famous 2003 Cornell University study found that students who were allowed to use their laptops during class recalled far less of the material than those who were denied access to computers. More broadly, a 40-country Organisation for Economic Co-operation and Development study recently found that the students who reported spending the most time on computers, both in class and at home, performed worse than their peers on a pair of standardized tests. And countries that have invested heavily in classroom technology have seen “no noticeable improvement” in students’ test scores. The OECD report concluded that “adding 21st-century technologies to 20th-century teaching practices will just dilute the effectiveness of teaching.”

Meanwhile, a much-hyped movement to “disrupt” higher education by offering college classes online for free has begun to fizzle. MOOCs were touted in TED talks and Wired cover stories as a disruptive force that would throw open the gates to an Ivy League–quality instruction to anyone with an Internet connection. Yet in 2013, just as the hype was peaking, evidence began to mount that the courses were having something less than their desired effect. Students signed up in droves, but only a tiny fraction completed the courses. It’s too early to declare MOOCs dead. Surely they’ll evolve. But thoughtful educators have largely backed away from the notion that online classes are ready to replace those taught in person, the old-fashioned way. At this point, online lectures may be better viewed as an alternative to the textbook than as a replacement for the entire classroom.

That’s of little comfort, however, to the companies whose businesses are built on selling actual textbooks. The big publishers’ bottom lines had already taken a hit from the rise of online marketplaces like Amazon that make it easier than ever to buy used textbooks. Now they face added pressure to differentiate their pricey offerings from the perfectly serviceable free videos and course materials available on sites like Coursera and Khan Academy. At stake is an educational publishing industry that takes in an estimated $8 billion per year in sales revenue in the United States alone, according to figures provided by the Association of American Publishers.

David Levin, CEO of McGraw-Hill Education, tells me his company views all of this as an imperative to reinvent its core products. To retain its value, Levin says, the textbook of the 21st century can’t just be a multimedia reference source. It has to take a more active role in the educational process. It has to be interactive, comprehensive, and maybe even intelligent. It has to make students’ and teachers’ lives easier by automating things they’d otherwise do themselves. The smarter it gets, the more aspects of the educational experience it can automate—and the more incentive schools and teachers will have to adopt it.

ALEKS wasn’t originally designed to revive the fortunes of the textbook industry. The software—whose name stands for Assessment and Learning in Knowledge Spaces—was developed by a team of mathematicians, cognitive scientists, and software engineers at UC–Irvine in the 1990s, with support from a National Science Foundation grant. It was meant to elucidate and test a specific mathematical theory of how knowledge is constructed and how it can be described. But ALEKS’ creators weren’t content to cloister their work in the ivory tower. They formed a private company in 1996, and McGraw-Hill Education bought it in 2013. Now the former textbook giant and its investors are banking on ALEKS to become a profit machine as well as a teaching tool.

I say “former textbook giant” because it no longer thinks of itself as a textbook company. “We’re a learning science company,” Levin says. In fact, since being sold by parent company McGraw-Hill to a private-equity firm for $2.5 billion in 2013, McGraw-Hill Education is beginning to more closely resemble a technology company—as are, to varying degrees, its major rivals in the textbook business. These established players are being pushed by, and partnering with, Silicon Valley–backed ed-tech startups such as Knewton, whose adaptive learning platform is one of ALEKS’ top rivals.

McGraw-Hill Education still has its headquarters in a high-rise office building in Manhattan’s Penn Plaza. But these days the real action is at its research and development hub in Boston’s Innovation District, where the software developers who sit plugging away at machine-learning algorithms could be mistaken for employees of an early stage tech startup. Overseeing McGraw-Hill Education’s digital operations is Stephen Laster, who worked alongside the “father of disruption,” Clayton Christensen, in a previous job as chief information and technology officer at Harvard Business School. Levin, the CEO, was brought on in April 2014, not because of his background in textbooks—he had none—but because of his experience running technology companies. His résumé includes a stint as CEO of Symbian Software, an early global leader in developing operating systems for mobile phones. Now McGraw-Hill’s products have begun to pop up at tech-industry confabs like the Consumer Electronics Show in Las Vegas.

It didn’t take long for McGraw-Hill Education and its rivals to grasp the appeal of a “digital-first” strategy. For decades, used textbooks have sapped the industry’s profits. College students save money by buying used books, checking them out at the library, or just Xeroxing their classmates’ books. All of which frustrates textbook companies, which respond by hiking prices. That boosts their revenue in the short term. But it also reinforces the vicious cycle, driving schools and students to find ever more creative ways to avoid buying new books.

Software is different: You can require an individual, nontransferrable license for each student in every class, so that course materials can’t be resold or passed down from year to year. Since the marginal cost of a license is essentially zero, you can charge far less—say, $25 per student versus upward of $100 for a new print textbook. Course materials look cheaper, but the textbook companies end up with far greater profits. Revenues from McGraw-Hill Education’s digital products jumped by 24 percent in 2014, and they now account for close to a third of its $2 billion in annual revenue.

McGraw-Hill Education’s transformation has dramatically altered its fortunes. This August, Apollo Global Management announced plans to take the company public in an offering that reportedly would value it at nearly $5 billion—more than double what it was worth just 2½ years ago.

The other large textbook publishers are busy developing interactive software of their own. Boston-based Houghton Mifflin Harcourt has an iPad-based math tool called Fuse whose interface can be tailored to each student’s “learning style”: more videos for visual learners, games for kinesthetic learners, and so forth. London-based Pearson has partnered with (and invested heavily in) Knewton, a venture capital–funded New York City startup whose adaptive learning platform plugs into the textbook companies’ course materials. In 2011, Knewton raised $33 million in a funding round that valued it at about $150 million. It’s now rumored to be worth several times that amount.

This fall Pearson introduced an “immersive digital learning tool” called Revel that reimagines the textbook as a multimedia experience replete with videos, interactive exercises, and animated infographics. The goal, Pearson’s Stephen Frail tells me, is to make the course materials “more compelling than Facebook,” which after all is only “one click away” whenever a student is doing coursework on her computer or tablet. To that end, Frail says, “We don’t want students to go too long without having to do something with the information they’re acquiring.” The mantra is: “Read a little, do a little.”

Public schools and universities, always under pressure to rein in costs and improve student performance, are proving especially receptive to the tech book pitch. They’re being spurred along by government programs like Race to the Top and influential nonprofits like the Bill and Melinda Gates Foundation, whose focus on educational standards and performance metrics dovetails with the software’s design. All the grant-giving and grant-writing has given rise to a raft of overlapping buzzwords, including “adaptive learning,” “personalized learning,” and “differentiated learning,” whose definitions are so amorphous that few can agree on them.

What’s clear is that digital platforms are catching on fast. A survey by the Book Industry Study Group, a trade group, found that 10 percent of college students in 2014 were taking at least one class that was run through an online platform. This year that figure jumped to 40 percent.

It’s tempting, for those who have spent their lives as educators, to dismiss the whole trend as the latest techno-fad, destined to distract administrators and upset curricula for a few years until the next one comes along. But there are two reasons why adaptive learning might prove more durable than that. The first is that the textbook companies have invested in it so heavily that there may be no going back. The second: It might, in at least some settings, really work.

Illustration by Natalie Matthews-Ramo

A once-struggling middle school near Myrtle Beach, South Carolina, credits its embrace of ALEKS and other adaptive software platforms for boosting test scores, student engagement, and even teacher attendance. A STEM-focused charter school in Wisconsin saw similar gains and, according to Education Week, was so impressed with the technology that it redirected funding from a full-time educational position to buy more digital assessment materials.

Arizona State University has made adaptive software, most notably from Knewton and Pearson, the centerpiece of a dozen college courses over the past three years and is branching beyond math into disciplines like chemistry, psychology, and economics. (Disclosure: Slate partners with ASU and New America on Future Tense.) The university says its adaptive courses now enroll some 26,000 students annually. “We don’t have a failure yet,” says Adrian Sannier, whose official role at the university is chief academic technology officer. Incongruous as it sounds today, it’s a job title that could someday be as familiar on American campuses as dean of students.

But that’s hardly inevitable. The history of educational technology is littered with failed efforts to automate key aspects of the teaching process, from B.F. Skinner’s mechanical “teaching machines” in the 1950s to the e-learning craze of the first dot-com boom. Resistance to change runs deep in the teaching profession, and adoption of best practices is sluggish even in the rare instances where experts can agree on what those practices are. Adaptive software isn’t quite there yet. For all the isolated success stories, controlled studies of its efficacy over the years have yielded murkier results.

One of the oldest and most influential of the current crop of adaptive software programs is Carnegie Learning’s Cognitive Tutor for algebra, developed in the early 1990s at Carnegie Mellon University. Its efficacy has been closely studied over the years, and in some settings it has appeared to substantially boost students’ performance. Yet a 2010 federal review of the research found “no discernible effects” on high school students’ standardized test scores.

The picture was further complicated in 2013, when the RAND Corporation published the results of a massive, seven-year, $6 million controlled study involving 147 middle and high schools across seven states. The study compared students’ performance in traditional algebra classrooms with those in “blended” classrooms designed around the Cognitive Tutor software. The results at the middle school level were inconclusive. At the high school level, the study found no significant results in the first year of the program’s implementation. Students who took the software-based course for a second year, however, improved their scores twice as much between the beginning and end of the year as those who remained in traditional classrooms.

An optimist looks at those results and concludes that properly implementing the technology simply requires an adjustment period on the part of the students, the teachers, or both. Do it right, and you’ll be rewarded with significant gains. The optimist would also be sure to point out that we’re still in the early days of developing both the technology and the pedagogy that surrounds it.

Count Carnegie Learning co-founder Ken Koedinger as a cautious optimist. Koedinger does not work for the company, which was sold for $75 million in 2011 to the Apollo Education Group, the education conglomerate whose holdings include the University of Phoenix chain of for-profit colleges. (It’s not affiliated with Apollo Global Management, the private-equity firm that bought McGraw-Hill Education.) Koedinger has remained at his post as a professor of human-computer interaction and psychology at Carnegie Mellon University, where he continues to develop and study adaptive software. “I think the potential is super,” Koedinger says. “I like to think of analogies to other places where science and technology have had an impact, like transportation. We went from walking to horse-drawn carriages to Model Ts, and now we have jet planes. So far in educational technology, we’re in the Model T stage.”

Peter Brusilovsky, a professor at the University of Pittsburgh and a pioneer in adaptive learning, says he’s seen (and conducted) plenty of studies in which the use of adaptive software led to higher test scores. When it doesn’t, he says, it could be because the software simply isn’t very good. Or it could be a function of the learning curve required of teachers and students when they try to implement a novel approach for the first time.

A pessimist looks at educational technology’s track record and sees something different: a long history of big promises and underwhelming results. “I think the claims made by many in ‘adaptive learning’ are really overblown,” says Audrey Watters, an education writer who has emerged as one of ed tech’s more vocal critics. “The research is quite mixed: Some shows there is really no effect when compared to traditional instruction; some shows a small effect. I’m not sure we can really argue it’s an effective way to improve education.”

Even ALEKS’s creators would admit the technology still has a long way to go. The best of today’s artificial intelligence can’t begin to approach the aptitude of a human teacher when it comes to relating to students, understanding their strengths and weaknesses, and delivering the instruction they need.

You can see this immediately in Whelan’s classroom at Westchester Community College. When student Spencer Dunnings, who was 18 at the time I visited the classroom last year, gets stuck on an equation, he follows ALEKS’s prompt to a page that attempts to break the problem down into its constituent parts. But the explanation itself is laden with terms that Dunnings doesn’t understand. “First, find the common multiple” is a good first step only if you already know what a common multiple is. In theory, Dunnings could click on the term to read a definition of it. But he’s already seen enough. “Ms. Whelan,” he calls. “I could use some help when you have a minute.”

Pulling up a chair next to him, Whelan watches him attempt the next problem and sees immediately where he’s going wrong.

Whelan: See where it says, “Simplify the expression?” Do you know what that means?

Dunnings: [Shakes his head no.]

Whelan: OK. Let’s see. … Ask me how old I am.

Dunnings: How old are you?

Whelan: I’m 60 minus six. But wouldn’t it just be simpler to say I’m 54?

Dunnings: Yeah.

Whelan: So that’s why, if we see “60 minus six,” we have to simplify the expression to 54.

The proverbial light flicks on. Dunnings simplifies his equation, and suddenly the rest of the problem looks more manageable.

In a class that revolves around computers and software, you might think that the software would do most of the teaching. On the contrary, the students in Whelan’s class seem to do most of their actual learning—in the sense of acquiring new concepts—during their brief bursts of personal interaction with their human tutors.

That seems like a serious problem for ALEKS. And if the software were intended to function as an all-in-one educational solution, it would be. But McGraw-Hill Education is adamant that isn’t the goal. Not anytime soon, at least.

“Unlike some younger tech startups, we don’t think the goal is to replace the teacher,” says Laster, the company’s chief digital officer. “We think education is inherently social, and that students need to learn from well-trained and well-versed teachers. But we also know that that time together, shoulder-to-shoulder, is more and more costly, and more and more precious.”

The role of the machine-learning software, in this view, is to automate all the aspects of the learning experience that can be automated, liberating the teacher to focus on what can’t.

But how much of the learning experience can really be automated? And what is lost when that happens?

In a small conference room in 2 Penn Plaza, McGraw-Hill Education’s imposing New York headquarters, a dashing Danish man stands at a whiteboard trying to answer exactly that question. His name is Ulrik Christensen, and his title is senior fellow of digital learning. While in medical school in Denmark, he started a company that ran medical simulations to help doctors learn and retain crucial information and skills. That got him interested in broader questions of how people learn, and he went on to found Area9, a startup that specialized in adaptive textbooks. McGraw-Hill Education partnered with Area9 to build its LearnSmart platform, then bought it in 2014.

How much you can automate, Christensen explains, depends on what you’re trying to teach. In a math class like Whelan’s, the educational objectives are relatively discrete and easily measured. Her students need to be able to correctly and consistently solve several types of problems, all of which have just one correct answer. If they can do that, they’ve mastered the material. If they can’t, they haven’t.

That makes ALEKS—a program focused on skills, practice, and mastery—a good fit. It also makes the software’s impact relatively easy to gauge.

Illustration by Natalie Matthews-Ramo

At Westchester Community College, the pass rate for developmental pre-algebra is just 40 percent. But in computer-assisted sections of the same course, including Whelan’s, the average pass rate is 52 percent. And thanks to the individual pacing that ALEKS allows, a few students move up so quickly that they go on to pass a second computer-assisted math class in the same term. One caveat to the success rate in the computer-assisted classes, Whelan says, is that they have two instructors for just 18 students. In contrast, the college’s traditional math classes have student-to-faculty ratios that are more like 25 to 1.

But what happens when you have a class whose goals are less clear-cut—one where the questions it asks are something like, “What would Marshall McLuhan think of Twitter?”

At this point, McGraw-Hill Education says, ALEKS is designed only to work for math, chemistry, and business classes. For everything else, the company offers LearnSmart, which like Knewton adds an adaptive element to courses in a wide range of subject areas, allowing faculty to continually test and gauge each student’s knowledge and skills. Teachers can customize what it tests or use out-of-the-box content for certain classes.

Knewton’s platform in particular boasts the ability to add an “adaptive layer” to just about any type of educational content. It first analyzes how students engage with both the text and the practice exercises therein. Then it applies machine-learning algorithms to present the material in the order it thinks will be most useful to a given student. But there’s a caveat, says Jose Ferreira, Knewton’s founder: “Knewton cannot work when there’s no right answer.”

I talked with two teachers at Kean University in New Jersey who use LearnSmart and related McGraw-Hill Education products called SmartBook and Connect in their communications classes. (SmartBook is a kind of interactive e-book in which the lessons change in response to the student’s answers to pop quizzes along the way.) Both told me the technology has made their lives easier, and they’re convinced it helps their students, too.

Julie Narain, an adjunct professor, said it used to be a struggle grading every assignment for 25 students. Now the software does that for her. Better yet, her students can see right away what they’ve gotten right and wrong, rather than having to wait days to get their grades back. “I know how much time they’ve spent on each problem, and I know which ones they’re getting wrong,” Narain says.

There are a few downsides, however. For one, each semester a few students run into problems with their accounts, or their logins, or they forget how to find their assignments on the platform or submit their work. Valerie Blanchard, who teaches the same communication class at Kean, says it’s nice that the e-books cost less than a print textbook. But, she adds, “It’s a little bittersweet when you have two students who are roommates and they’re in the same class, but they can’t use the same book because everyone has to have their own (login) code.” That might sound like a small inconvenience. But it’s symbolic of a deeper problem with personalized courseware: It treats learning as a solo endeavor.

Remember Whelan’s pre-algebra students, toiling quietly at their computers? Just down the hall was a bustling study room, where I found three young men huddled around a single print textbook, working their way through a math problem. They were taking a different section of the same pre-algebra class, one that was still taught the old-fashioned way. They may well have come into the class with disparate skill levels. But, because they were all assigned the same chapters and exercises at the same time, they could work together, helping and correcting one another as they went. The collaborative process by which they solved the problem involved a different kind of adaptive learning—one in which humans adapt to one another, rather than waiting for algorithms to adapt to them.

What’s gained when technology enters the classroom is often easier to pinpoint than what’s lost. Perhaps that’s because the software aims to optimize for the outcomes we already measure. It’s nice that a program carefully engineered to improve students’ performance on a particular kind of test does in fact improve that performance. But it makes it all too easy to forget that the test was meant only as a proxy for broader pedagogical goals: not just the acquisition of skills and retention of knowledge, but the ability to think creatively, work collaboratively, and apply those skills and that knowledge to new situations. This is not to say that cutting-edge educational technologies can’t serve those broader goals as well. It’s just: How would we know?

“Adaptive technologies presume that knowledge can be modularized and sequenced,” says Watters, the education writer. “This isn’t about the construction of knowledge. It’s still hierarchical, top-down, goal-driven.”

Teaching machines have come a long way, but the speed and apparent efficacy of today’s adaptive software can mask deeper limitations. It tends to excel at evaluating students’ performance on multiple-choice questions, quantitative questions with a single right answer, and computer programming. The best machine-learning software is also beginning to get better at recognizing human speech and analyzing some aspects of writing samples, but natural language processing remains at the field’s far frontier.

Notice a pattern? In short, MIT digital learning scholar Justin Reich argues in a blog post for Education Week, computers “are good at assessing the kinds of things—quantitative things, computational things—that computers are good at doing. Which is to say that they are good at assessing things that we no longer need humans to do anymore.”

The implication is that adaptive software might prove effective at training children to pass standardized tests. But it won’t teach them the underlying skills that they’ll need to tackle complex, real-world problems. And it won’t prepare them for a future in which rote jobs are increasingly automated.

McGraw-Hill Education’s Laster says he’s well aware of adaptive technology’s limitations when it comes to teaching abstractions. Yet technology could still have a place in social science and humanities classrooms, Laster believes, provided it’s well-designed and judiciously implemented. If the technology continues to improve at the pace he thinks it will, adaptive software could some day be smart enough to make inferences about students’ conceptual understanding, rather than just their ability to solve problems that have one right answer. Laster believes his company, which has hired a growing team of machine-learning experts, could achieve that.

In April, McGraw-Hill Education launched its newest adaptive product, called Connect Master. Like ALEKS, it will be made first for math classes, with other disciplines to follow. But Connect Master takes less of a behaviorist approach than a conceptual one, asking a student to show his work on his way to solving a problem. If he gets it wrong, it tries to do something few other programs have attempted: analyze each step he took and try to diagnose where and why he went wrong.

That’s a form of intelligence that others in the field have been working on for years. Koedinger of Carnegie Mellon calls it “step-level adaptation,” meaning that the software responds to each step a student takes toward a solution, as opposed to “problem-level adaptation,” where the software analyzes only the student’s final answer. Carnegie Learning’s Cognitive Tutor, which Koedinger co-developed, helped to pioneer this approach. You can play with a basic demo of this sort of functionality in the simple interactive below, provided to Slate by Koedinger and Carnegie Mellon’s Pittsburgh Advanced Cognitive Tutoring Center.

To view the interactive, click here.

It’s an impressive feature, in some ways—especially considering that Koedinger and his team built the sort of technology that underlies this demo way back in the late 1980s. But you’ll see that the software shown above doesn’t really attempt to dynamically assess the approach you’re taking to any given problem. There’s no artificial intelligence here—it’s simply hard-wired to identify and diagnose a few specific wrong turns that a student could take on the way to an answer.

Accurately diagnosing a student’s misconceptions is a harder conundrum than you might think, explains McGraw-Hill Education’s Christensen. An algebra problem may have only one correct answer. But there are countless not-incorrect steps that a student could theoretically take on the path to solving it. If you’re not careful, your software will tell you you’re on the wrong track, when in fact you’re just on a different track than the one your textbook authors anticipated.

That same difficulty makes it challenging for a software program to offer appropriate remediation when a student gets a question wrong. When students get question wrong in one of McGraw-Hill Education’s interactive textbooks, the textbook can direct them to a text, graphical, or video explanation that attempts to better explain the concept in question. But that requires the textbook authors to anticipate the common mistakes or misunderstandings that might have led students to the wrong answer. McGraw-Hill Education’s own research found that the authors—despite being experts in their field—were amazingly bad at predicting where students’ misconceptions would lie. For every 10 “remediation comments” the authors wrote to try to address common mistakes, only one would turn out to be useful for any significant proportion of students.

The problem wasn’t that textbook authors were bad at their jobs, Christensen says. It’s that they’ve never had access to much feedback about how their textbooks were actually being used. In trying to compose explanations and practice problems that would effectively address students’ needs, they were essentially shooting in the dark.

If they achieve nothing else—if they never solve the hard problems of adaptation and personalization—the rise of interactive courseware should at least help textbook authors to write better textbooks.

All that collection and analysis of student data comes with risks and concerns of its own, however. In an in-depth 2013 story on Arizona State’s use of Knewton math software for Inside Higher Ed, Steve Kolowich examined the technology’s upsides and downsides. Among the upsides: ASU saw an 18 percent increase in pass rates and a 47 percent decrease in withdrawals from the math courses that used Knewton’s adaptive tools.

But the collaboration between university and for-profit startup raised some troubling questions about just who owned all the data that flowed from students’ use of the software—and what they might ultimately do with it. Knewton’s Ferreira says he envisions a future in which students’ educational records added up to much more than a series of letter grades that make up traditional transcripts. By tracking everything they read, every problem they solve, every concept they master, Knewton could compile a “psychometric profile” on each student that includes not only what she learned but how readily she learned it, and at what sorts of problem-solving she struggled and exceled. That sort of data could be of great interest to admission committees and employers. It could also, in theory, erode the privacy that has traditionally surrounded young people’s schoolwork.

This isn’t merely a theoretical concern. In 2014, an educational data management startup called InBloom folded amid a firestorm of criticism over the way it handled the data it was collecting from various sources on K-12 students’ educational performance. In the scandal’s wake, a handful of ed tech companies, including Knewton and Houghton Mifflin Harcourt, signed a privacy pledge in which they vowed not to sell students’ information, target them with advertising, or use their data for noneducational purpose. They also pledged to set limits on the data they collect and retain and to offer students and parents access to their own records. “Our philosophy is: Data should only be used to improve learning outcomes,” Ferreira says.

It’s reassuring that the industry appears to have absorbed early on just how damaging it could be if they were to abuse the data they’re collecting. At the same time, protecting sensitive data from breaches and hackers has proven to be a daunting task in recent years even for some of the world’s most secure and high-tech organizations. Concerns aren’t likely to dissipate on the basis of some earnest promises.

There’s a broader worry about the adaptive learning trend: that all of the resources being expended on it will one day prove to have been misguided.

Let’s take for granted, for a moment, that the best of today’s adaptive software already works well in a developmental math classroom like Whelan’s, where the core challenge is to take a roomful of students with disparate backgrounds and teach them all a well-defined set of skills. Let’s stipulate that adaptive software will continue to catch on in other educational settings where the pedagogical goals involve the mastery of skills that can be assessed via multiple choice or short-answer quizzes. Let’s further assume that the machine-learning algorithms will continue to evolve, the data will grow more robust, the content will continue to improve, and teachers will get better at integrating it into their curricula. None of this is certain, but it’s all plausible, even in the absence of any great technological leaps.

Where critics are likely to grow queasy is at the point where the technology begins to usurp or substitute for the types of teaching and learning that can’t be measured in right or wrong answers. At a time when schools face tight budgets and intense pressure to boost students’ test scores, especially at the K-12 level, it’s easy to imagine some making big bets on adaptive software at the expense of human faculty. It’s equally easy to imagine the software’s publishers trumping up their claims of efficacy in pursuit of market share. (Ferreira told me he believes that’s exactly what some of Knewton’s rivals are already doing, although he declined to name them publicly.) They might also, intentionally or not, drive out those teachers who resist the push to rebuild their classes and teaching styles to suit the courseware. If that happens, there is a real chance that the adaptive learning trend could do more harm than good.

To see how this is possible, consider for a moment an example that has become well-known among education scholars. In a 1986 study, a researcher asked grader-schoolers the following question: “There are 125 sheep and five dogs in a flock. How old is the shepherd?”

The students’ math education had not trained them to stop and ask questions like: “What does this problem really mean?” or “Do I have enough evidence to answer it?” Rather, it had trained them by rote to apply mathematical operations to story problems, so that’s just what they did. Some subtracted five from 125; some divided, finding 25 a more plausible age for a shepherd than 120. Three-fourths gave numerical answers of one sort or another. The concern is that adaptive software might train generations of students to become ever more efficient at applying rote formulas without ever learning to think for themselves.

So perhaps adaptive software will turn out to be a false step on the path to a better education system. But there’s also a risk in marking a step as wrong before you’ve seen where it leads. Remember, that study was conducted long before ALEKS or Knewton were invented. It was meant as a critique of the very textbooks they’re meant to replace. It would be a mistake, in criticizing today’s educational technology, to romanticize the status quo.

Our best hope may be that educators themselves heed the lesson of the shepherd problem: Before they start to apply solutions, they need to make sure they understand the problem. Ideally, they’ll find ways to make use of a promising new technology—without turning their students into sheep.