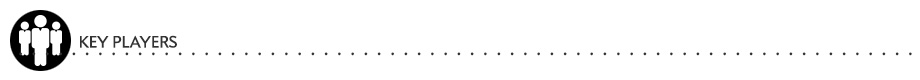

Pieter Abbeel: Abbeel is a robotics and artificial intelligence researcher at UC–Berkeley, where his lab is teaching a robot to think by allowing it to play in much the same manner as a human child.

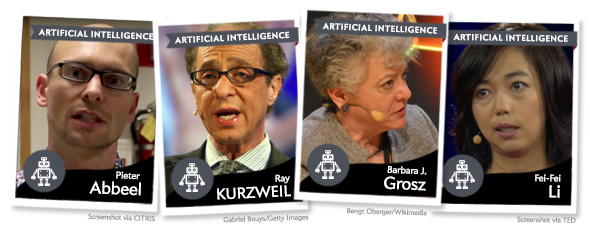

Nick Bostrom: A philosopher at the University of Oxford, Bostrom has written on the threat of A.I.

Ray Kurzweil: Currently serving as a director of engineering at Google, Kurzweil is a prominent futurist who helped popularize the concept of technological singularity.

Barbara J. Grosz: Grosz, a computer scientist based at Harvard, researches how we can turn computers into better collaborators and team members.

Fei-Fei Li: As director of the Stanford Artificial Intelligence and Vision labs, Fei-Fei has contributed to research that tries to prevent car accidents without entirely removing humans from the equation.

Elon Musk: Musk, who is CEO of Tesla Motors and SpaceX, has both contributed to A.I. research and warned against its dangers.

Francesca Rossi: A University of Padova professor who publishes extensively on A.I., Rossi has argued for a future in which humans and A.I. complement one another.

Eliezer Yudkowsky: Yudkowsky, who works with the Machine Intelligence Research Institute, aims to ensure that we develop friendly A.I.

Photo illustration bySlate. Bethesda Softworks, © 1984 Metro-Goldwyn-Mayer Studios Inc. All Rights Reserved, and © Universal Pictures International.

Excession, by Iain M. Banks: Though it offers a vision of a civilization in which A.I. coexists with human-like beings, this novel also shows how a society can be disrupted by forces beyond its comprehension.

Exegesis, by Astro Teller: In this epistolary novel, a computer scientist exchanges emails with a newly awoken artificial intelligence as it works to discern its place in the world.

Ex Machina, directed by Alex Garland: This unnerving thriller revolves around the uncertain intentions of an artificial being.

Fallout 4 by Bethesda Softworks: In this post-apocalyptic video game, a group of sinister robots emerge from the ashes of a research institute based on MIT.

Terminator, directed by James Cameron: This film, in which a robot travels back in time to kill the mother of humanity’s savior, is arguably the most canonical killer A.I. text.

Illustration edited by Slate. Illustration by Thinkstock.

Existential threat: A force capable of completely obviating human existence.

Autonomous weapons: The proverbial killer A.I., autonomous weapons would use artificial intelligence rather than human intelligence to select their targets.

Machine learning: Unlike conventional computer programs, machine-learning algorithms modify themselves to better perform their assigned tasks.

Alignment problem: A situation in which the methods artificial intelligences use to complete their tasks fail to correspond to the needs of the humans who created them.

Theory of mind: A basic building block of social intelligence, a theory of mind involves the ability to anticipate and respond to the thoughts of others.

Singularity: Although the term has been used broadly, the singularity typically describes the moment at which computers become so adept at modifying their own programming that they transcend current human intellect.

Superintelligence: A superintelligence would exceed current human mental capacities in virtually every way and be capable of transformative cognitive feats.

“Inside the Artificial Intelligence Revolution: A Special Report,” by Jeff Goodell: In this two-part series, Goodell investigates the current state of A.I. and robotics, offering a clear, thoughtful, and non-alarmist look into where we might be headed.

In Our Own Image: Savior or destroyer? The History and Future of Artificial Intelligence, by George Zarkadakis: With sweeping scope, Zarkadakis approaches artificial intelligence from historical, evolutionary, and philosophical angles. He ultimately argues that the real risk of A.I. may not be that it will kill us, but that it could take away what makes us human.

“The Singularity: A Philosophical Analysis,” by David Chalmers: Taking seriously the possibility of a coming “intelligence explosion,” Chalmers thinks through “the place of humans in a post-singularity world.”

“Autonomous Weapons: An Open Letter From A.I. and Robotics Researchers”: In this open letter, co-signed by numerous key players in the field, along with other concerned parties, the Future of Life Institute warns against the surprisingly human dangers of weaponized A.I.

“Inside the Surprisingly Sexist World of Artificial Intelligence,” by Sarah Todd: The attitudes that shape A.I. today may well inform the ways it relates to us in the future. With this possibility in mind, Todd interrogates many of the “outdated ideas about gender” that pervade research in the field.

Superintelligence: Paths, Dangers, Strategies, by Nick Bostrom: Working from a position grounded in academic philosophy, Bostrom considers the long-term consequences of creating thinking beings that would exceed us in almost every way.

Alienness: As artificial intelligence emerges, it will likely evolve along pathways that differ from those followed by biological life forms. Will A.I. be so different that it can’t have meaningful contact with humans?

Morality: There’s no guarantee that A.I. will share our sense of right and wrong. Can we incorporate ethical principles into computers before they outstrip us?

Roko’s Basilisk: According to this chilling thought experiment, merely thinking about how to stop a superintelligence from coming into being might condemn you to a lifetime of torment. Are we already doomed?

Technopanic: Worrying about the way computers will develop in the future may actually blind us to the ways that they’re shaping our lives today. Are we focusing on the wrong things when we worry about the future of A.I.?

Weaponization: A.I. promises to make the methods of war more precise, thereby reducing casualties and avoiding other catastrophes. But many worry that autonomous weapons could actually worsen conflicts. How much trouble will we get into if we give our weapons the power of thought?

This article is part of the A.I. installment of Futurography, a series in which Future Tense introduces readers to the technologies that will define tomorrow. Each month from January through June 2016, we’ll choose a new technology and break it down. Read more from Futurography on A.I.:

Future Tense is a collaboration among Arizona State University, New America, and Slate. To get the latest from Futurography in your inbox, sign up for the weekly Future Tense newsletter.