The Sensitive Robot: How Haptic Technology is Closing the Mechanical Gap

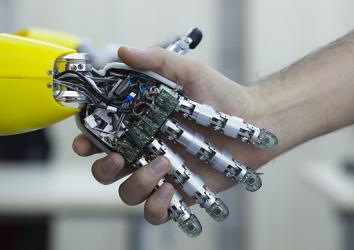

One differentiator that’s always separated humans from robots is our ability to touch and feel, but advances in haptic technology are rapidly closing the mechanical gap.

There are surgeons operating on patients right now who can't feel their instruments. Similarly, there are workers in nuclear facilities around the world using remote manipulator arms to handle radioactive materials without a sense of what they're touching. It's an epidemic of numbness that afflicts virtually everyone who performs a manual job with robotic assistance—from bomb disposal experts in Afghanistan to astronauts aboard the International Space Station.

The problem isn't physiological; but rather, mechanical. As robots become increasingly common, particularly in high-stakes and high-risk situations, the benefit of deploying remotely operated surrogates is hitting a functional wall. For the most part, robots can't feel what they touch, forcing their users to rewire their own instincts and replace simple tactile cues—the contours of a wire between two fingertips, for example—with constant visual confirmation. The result is what researchers call “increased cognitive load,” where the operator must actively think about countless minor tasks that should all be effortless. When the clock is ticking on those tasks, such as when a patient is losing blood, or a roadside bomb is about to detonate, the weight of that additional responsibility can become nearly unbearable.

Now, researchers are hoping to lighten that cognitive burden, and possibly make an entire generation of robots more effective, by creating machines that can feel. It's called haptic technology, and it represents one of the most challenging research fields in robotics. But with new sensors and feedback systems finally making it out of the lab, it also happens to be one of the most promising.

Sensory feedback: the thin line between good vibrations and aggravating distractions

At its core, haptics is about machines communicating through touch, whether that means a joystick that grinds to a halt when the manipulator it’s commanding hits an obstacle, or a touch screen that buzzes with each tap on its virtual keyboard. Vibration is the most common form of haptic feedback, and the rattle of an incoming call on a silenced cell phone is its most common application.

Extending that concept to robotics seems straightforward. When a surgical robot, like Intuitive Surgical's da Vinci system, touches a patient's tissue with its manipulator, why not signal the operator with a mild buzz? “You don't want to put vibration on a surgeon's hand,” says Ken Steinberg, CEO of Massachusetts-based Cambridge Research & Development, which has tested its own haptic device for use with surgical robots. Since their hands already pinch and torque the machine's controls to direct the manipulators inside a patient's body, surgeons have told Steinberg that near-constant vibrations would, at best, be a nuisance; and at worst, a confusing, finger-numbing distraction. “Vibration absolutely does not work with the human body,” says Steinberg. “The nerves lose track of which vibration is stronger and which one is weaker. All it does, over time, is aggravate you.”

Cambridge R&D's solution is less buzz, more nudge. The company recently demonstrated the Neo, a prototype of a linear actuator—a headband-mounted mechanism that moves up and down, rather than with the circular motion typical of most motors. When the da Vinci system's instruments or manipulators make contact with anything, the Neo’s small tactor (a tiny transducer that conveys pressure and vibrations to the user’s skin) pushes against the surgeon's head. The use of the linear actuator allows for adjustments of tactile feedback by minute degrees—from a feathery tickle that reflects a manipulator brushing against tissue, to an unmistakable tap when a suture is pulled taut.

To test the feedback’s effectiveness, one surgeon outfitted with a Neo conducted a simulated operation that required him to grasp a vein—something surgeons typically avoid doing with minimally invasive surgical robots due to the high risk of puncture or damage. “We decided to show how delicately we could grab a simulated vein to demonstrate the minimized level of deflection, or crushing,” says Steinberg. “We blindfolded the surgeon and had him use the robot to grab the vein, which was a straw in this case, and told him to stop the moment he could feel the straw.” When the surgeon felt the straw, the robot stopped almost immediately, providing Steinberg with confirmation that there would not have been any tissue damage or punctures. Considering that the da Vinci's standard feedback is visual-only by way of a 3D high-resolution monitor, it's an impressive glimpse of what transmitted touch has to offer. “This capability costs hundreds of dollars to install, not hundreds of thousands. We think we have a solution for haptics for the next decade,” says Steinberg.

Although Cambridge R&D is hoping to license its technology to companies like Intuitive Surgical, a more traditional form of haptic feedback has already made it into the operating room. The RIO surgical robot, which is used exclusively for hip and knee procedures, is guided by an orthopedic surgeon, but RIO already has a plan in place before the procedure begins—it draws on its own knowledge of the patient based on CT scans conducted ahead of time. So if the human surgeon veers off-target, or applies too much pressure, the RIO applies what Florida-based Mako Surgical calls “tactile feedback.” It's another term for “force feedback,” where the controls actively push back against the user.

Haptics may be more effective if robots are designed to react to tactile feedback autonomously

And yet, force feedback isn't a catch-all solution. While the RIO surgical robot can contain its user within a clearly defined area, robots like the ones that responded to the Deepwater Horizon oil spill in 2010, or to the Fukushima Daiichi nuclear power plant disaster in 2011, are navigating the chaos of unpredictable environments. Researchers must shift gears to provide better solutions for the operators of these types of systems, who are tasked with turning wrenches or doorknobs by peering through cameras that are murky with oil, or flickering due to the ionizing effects of elevated radiation levels.

Instead of obsessing over how to transmit the sensation of touch to the user in a meaningful, non-distracting way, companies like Los Angeles-based SynTouch are focusing on the machines themselves. If a robot knows what it's touching, why not program it to react accordingly? SynTouch's BioTac sensors are designed to mimic the human fingertip, detecting force as well as vibration and temperature. By combining all three kinds of inputs, a BioTac-equipped robotic hand might be able to discern glass from metal (based on temperature), and a button from its surrounding panel (based on texture). But as handy as that input would be to a human user, robots might make better use of the information.

According to SynTouch co-founder and head of business development Matt Borzage, it's a matter of speed. “With humans, our hands need to make thousands of adjustments per second when holding something. If it starts to slip, to send that information all the way up to the brain and all the way back down the arm takes too long. That's why the spinal cord is connected to the arm,” says Borzage. For a robot hand to achieve that level of human-like precision, and avoid constant fumbling, it has to act independently. Waiting for an operator to receive a haptic signal, process it, and send back a command is a recipe for halting, klutzy failure. “It's why we need to implement some low-level reflexes into the robotic arm that mimic that spinal cord level reflex,” says Borzage.

This reflex-based approach is being adopted by the makers of the Shadow Hand, one of the world's most dexterous robotic hands. “For remote manipulation, there's no great benefit yet for having complex sensing because you can't get that back to the operator,” says Shadow Robot Company's managing director Rich Walker. “You want that data to be interpreted by the robot, rather than being rendered back to the human.” The London-based company works closely with SynTouch, which created a Shadow Hand BioTac kit to help research-oriented customers integrate the fingertip sensors with that system. But for the touch-enhanced technology to make the leap to widespread real-world adoption, such as in robots used by material handlers in nuclear power facilities, a smart, nimble manipulator could be feasible long before a system with haptic feedback.

Haptic technology may ultimately give robots a stronger sense of “self”

It would be unfair to say that haptic technology, as it is traditionally defined, has been abandoned. If anything, it's evolved, encompassing both machines that can feel, and machines that can share that sensation with humans. Montreal-based Kinova Systems is currently developing a haptic-enabled upgrade to its existing, joystick-controlled JACO gripper arm. The arm can be attached to a wheelchair to enable someone with upper-body disabilities to grab and manipulate an item, and give that person a refined sense of “touch,” while doing so via the gripper's three individually controlled, underactuated “fingers.” The second phase of the research will apply feedback to the operator, possibly through an arm or headband. But the first step will be grippers that sense and identify the objects they touch, and adjust accordingly; a glass of water, for example, might be held level, and with just enough pressure to maintain a grip, to avoid shattering the glass.

The field of haptics, in other words, may wind up helping robots first. If companies like Cambridge R&D, SynTouch and Shadow Robot can successfully court the major players in robotics—the ones whose systems have become ubiquitous in hospitals, under oil rigs, or throughout the world's battlefields and disaster zones—those machines will need less monitoring. They'll catch the wrench before it tumbles into the plume of oil, or snip a single wire within the bomb's snarl of cables. Call it a happy accident: in the quest to make machines that can transmit touch, and that are easier to control, roboticists have made machines that better control themselves.

Download a prospectus.

Disclaimer: All funds are subject to market risk, including possible loss of principal. Funds that invest in a single sector are subject to greater volatility than those with a broader investment mandate. Investing in small companies is generally carries more risk than investing in larger companies. Funds investing overseas are subject to additional risks, including currency risk and geographic risk.

The following companies are not held by T. Rowe Price Health Sciences Fund, T. Rowe Price Blue Chip Growth Fund, T. Rowe Price Growth & Income Fund, T. Rowe Price Growth Stock Fund, T. Rowe Price Global Technology Fund, T. Rowe Price New Horizons Fund, or T. Rowe Price Science & Technology Fund as of December 31, 2012: Show Robot, Kinove Systems, Syntouch, Mako Surgical, Cambridge Research & Development.

The funds' portfolio holdings are historical and subject to change. This material should not be deemed a recommendation to buy or sell any of the securities mentioned.