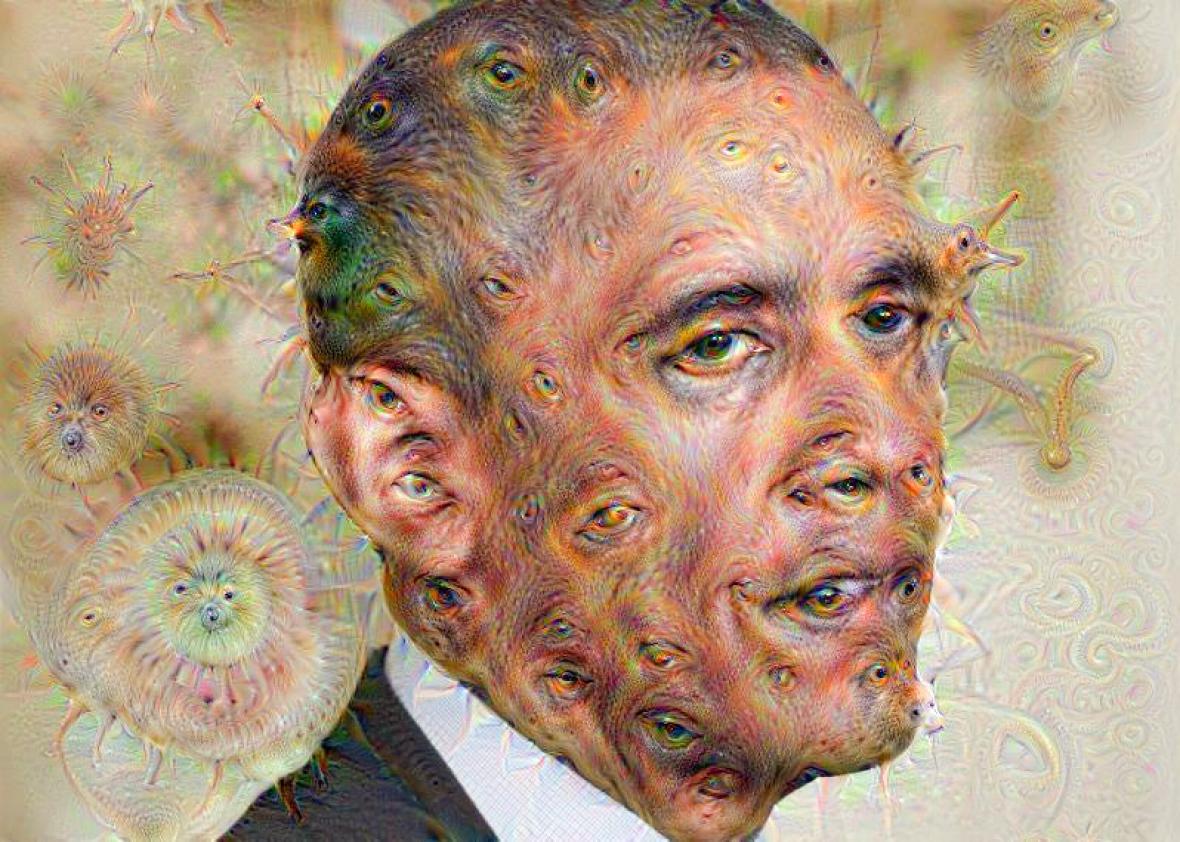

Last month Google introduced a trippy program called DeepDream, which early adopters have used to generate psychedelic variations of everything from Hieronymus Bosch to porn (NSFW!). By taking these objects and subjecting them to analysis using a “neural net”—or what’s known as “deep learning”—DeepDream produces images bubbling with eyes, birds, blinding contours, and other things that look like they belong on the cover of a King Crimson album. It’s dazzling and surreal—further evidence of Salvador Dalí’s discovery that eyeballs can only improve art—but also creepy. It suggests some sort of creativity in the mind of the machine doing the processing, and some sort of dreamer at the heart of the dream.

So what’s going on in the dreaming neural nets, and what does it portend for the future of artificial intelligence? The answer tells us a lot about where artificial intelligence is going—and why it might be more creative, more opaque, and a lot less predictable than we’d perhaps like it to be.

Most write-ups of DeepDream have stumbled over the very concept of a neural net, which doesn’t actually have all that much to do with biological neurons. Neural nets are one tool of machine learning, the field of computer science focused on building trainable systems for pattern recognition and predictive modeling—which, likewise, doesn’t have much to do with human learning. For example, take image recognition of porn images, used to classify whether an image should be filtered from an all-ages search. Programmers feed the network a “training set” of porn images and nonporn images, each classified as such by hand. Based on the training set, the neural network iteratively fine-tunes a large internal set of probabilities and conditions, using it to make a best guess as to whether an unspecified image is pornographic or not. This is very much an estimate, one based on a great number of interdependent probabilities. The predominance of certain skin tones, the presence of certain shapes, the absence of other features—all of these lend greater or lesser weight to the neural net’s ultimate decision. At the end of the training, the network performs far better than it did at the start—which is why it is called “learning.”

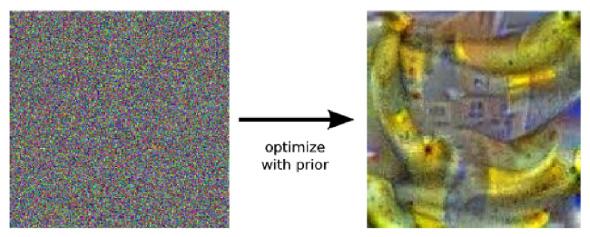

It is exactly this sort of probabilistic guesswork that underlies DeepDream, just in a very different application. Where the porn filter learns to distinguish between porn and nonporn images, DeepDream learns to identify parts of images that resemble other common parts of images (call them archetypes). DeepDream then adds a new wrinkle by altering the original image to look more like the archetype of what DeepDream associates with a particular word, which is why it produces, say, a naked woman festooned with eyeballs. DeepDream can, if asked, “find” an archetype in an image that is total garbage, as when Google researchers asked it to find bananas in random noise. DeepDream located bits and pieces of the noise that matched up the most (still not very much) with its banana archetype, then incrementally altered the image to bring the bananas into relief.

Asked to find bananas, DeepDream finds bananas in noise.

Courtesy of Google Research Blog

The imposition of these archetypes, however compelling or surreal they might be, accounts for some of the uniformity of DeepDream’s results. Because it is searching for patterns against an evolving but singular set of archetypes, DeepDream brings out not just the same features (dog, eyeball) in the images it’s given, but the same manifestations of those features (this dog, this eyeball). The homogenized gestalt of the Internet produces DeepDream’s best guess as to what a particular word or phrase “looks like,” and some of the resulting surrealism is simply because the guesses are rather far off. DeepDream doesn’t see any of this, of course; what’s being presented is just a visualization of the patterns that it identifies imposed onto the original image.

Humans and animals work with archetypes too, but theirs are far more elusive things. When we think of a “dog” or an “eye,” we have the ability to distinguish images of them immediately, yet our minds don’t appear to be comparing them against a fixed reference image, even a very good one. Something more mysterious is going on. Yet the conditioning of neural networks to recognize archetypes in images does take some primitive steps toward one of the fundamental elements of human cognition, pattern recognition. But it doesn’t do so in a particularly human way.

Photo illustration by Lisa Larson-Walker using Dreamscopeapp. Photo by Phillip Chin/Getty Images.

GitHub user iamtrask wrote a short, elegant tutorial on neural networks which contains an 11-line neural network written in Python:

X = np.array([ [0,0,1],[0,1,1],[1,0,1],[1,1,1] ])

y = np.array([[0,1,1,0]]).T

syn0 = 2*np.random.random((3,4)) - 1

syn1 = 2*np.random.random((4,1)) - 1

for j in xrange(60000):

l1 = 1/(1+np.exp(-(np.dot(X,syn0))))

l2 = 1/(1+np.exp(-(np.dot(l1,syn1))))

l2_delta = (y - l2)*(l2*(1-l2))

l1_delta = l2_delta.dot(syn1.T) * (l1 * (1-l1))

syn1 += l1.T.dot(l2_delta)

syn0 += X.T.dot(l1_delta)

Even if this code means nothing to you, I hope you’ll trust me when I say that it has very little to do with biological neurons or the brain. Neural nets contain a set of connected “nodes” (termed neurons) that “fire” probabilistically in response to a variety of inputs, analogous to how biological neurons fire when activated to a certain threshold. This, however, is simply an analogy. When Google researchers write, “Even a relatively simple neural network can be used to over-interpret an image, just like as children we enjoyed watching clouds and interpreting the random shapes,” they are not saying that the neural network is interpreting in the way a child’s brain does, but merely that the computer is picking out certain patterns in an image and reinforcing them. In her authoritative history of cognitive science Mind as Machine, Margaret Boden quotes neural network pioneer Frank Rosenblatt describing his perceptron neural network in 1958 as:

a hypothetical nervous system, or machine … designed to illustrate some of the fundamental properties of intelligent systems in general, without becoming too deeply enmeshed in the special, and frequently unknown, conditions which hold for particular biological organisms.

While some research has attempted to draw neural networks closer to their biological analogues, most neural networks take Rosenblatt’s looser approach. Such networks are similar to the brain not in sharing a particular neuronal model, but in the sense of both using parallel distributed processing—a huge number of neurons (of one kind or another) working in parallel rather than in sequence, in which the actual distribution of labor can be difficult to determine.

Though the neural network executes on code, the code only provides the infrastructure for the learning, not the “knowledge” itself. An operational neural network is less coded than it is trained. The nodes are initially seeded with a random (or at least suboptimal) set of probabilities, and this first iteration of the network does not produce meaningful results. Neural networks are then trained on sample data, which is to say, they are given data sets (images, Web pages, you name it) in which they learn conditional probabilities and relationships between different “features” of the data—though defining what constitutes a “feature” can often be a great part of the difficulty itself. Google’s DeepDream allows a top-level feature to be as fine-grained as a line or as coarse-grained as an object (like an eye). Sometimes a neural network is given a set of “correct” outcomes; other times it’s let free, “unsupervised,” to find whatever patterns in the data it deems most prominent.

Photo illustration by Lisa Larson-Walker using Dreamscopeapp. Photo courtesy of IFC Films.

The neural net then can take new data and, using what it “learned” from the training data, apply similar analysis to find the very features that were specified in the initial data. iamtrask’s neural net only contains two “layers” of computational neurons, and is too limited to be capable of “learning” very much. But as additional layers of neurons are piled on, we get to far more sophisticated possibilities and what is called “deep learning.”

By the time you get to the enormous deep learning architectures that Google (where I used to work, and where my wife still does) and others are creating, you have neural networks with 20-plus layers that are capable of achieving remarkable feats of “learning,” such as image recognition, but you have another phenomenon as well: The network’s functioning has become fairly opaque and its behavior has become emergent. The result is no longer algorithmic because an algorithm specifies concrete steps to accomplish a task. The steps here created the neural net itself, but once trained, it has become a system of unpredictable analysis and feedback, only loosely under our control. This is, in fact, why I preferred to avoid neural networks and other forms of machine learning in my own programming: If something went wrong, it was almost impossible to fix it without breaking something else. You had to settle for programs that were mostly approximately working, not perfect. And indeed, the most impressive accomplishments in artificial intelligence today are coming from networks that are increasingly opaque in their mechanism. Their inner workings do not appear “rational,” nor are they algorithmic.

Photo illustration by Lisa Larson-Walker using Dreamscopeapp. Photo by Mandel Ngan/AFP/Getty Images.

The anti-computational backlash against DeepDream is predictable: Pacific Standard’s Kyle Chayka deems it kitsch. Yet in saying “the computer is undergoing something that humans experience during hallucinations,” he makes the mistake of imposing a clichéd pattern on a very different phenomenon—in other words, doing exactly the thing that DeepDream does. Quoting Walter Benjamin on the emptiness of kitsch is as mechanical and predictable a move as DeepDream’s analysis, evidence that we often do no better than a computer when it comes to originality and creativity. The importance of DeepDream does not lie in the resultant images, but in the computer’s grasp of similarity, the analogical process that lies at the heart of creativity, as well as its trained and stochastic nature, a far cry from the deterministic algorithms that form the fundament of computer science. Art historian Barbara Maria Stafford calls analogy “a metamorphic and metaphoric practice for weaving discordant particulars into a partial concordance,” the ability to understand that something can be like another thing without being identical to it. What DeepDream does is computationally intensive yet incredibly primitive next to the feats of the brain—in essence, it’s finding bits of a picture that vaguely resemble its archetypes of eyes and birds.

But “vaguely resemble” is significant. It is this sort of analogical processing that will lead to far more impressive accomplishments in artificial intelligence: robots with agency, story writing, natural language conversation, and creative problem solving. There is no mythical secret sauce required to generate “human” creativity, just ever-increasing layers of complexity and emergent behavior. We are still a long way from that point, but DeepDream shows how far we’ve come.

This article is part of Future Tense, a collaboration among Arizona State University, New America, and Slate. Future Tense explores the ways emerging technologies affect society, policy, and culture. To read more, visit the Future Tense blog and the Future Tense home page. You can also follow us on Twitter.