A constant refrain in discussions about cybersecurity is the need for better user education and awareness among the general public about computer threats and basic hygiene—not opening attachments from unknown senders, or using “12345” as your password, for instance. But a new study from researchers at Google and the University of Pennsylvania suggests that we still don’t really know how to help people understand online threats.

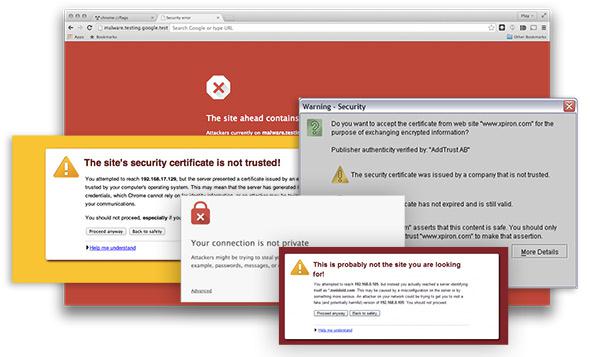

The researchers focus on SSL/TLS warnings—the messages that pop up in your browsers when there are problems with encryption and authentication protocols. Those protocols ensure no one can eavesdrop on users’ communications with Web servers and that those servers are actually the ones they claim to be. If the server cannot be authenticated, or the encryption protecting communications with it is inadequate, browsers often caution users that they may not want to proceed—though many people do so regardless. Be honest. You’ve clicked through a warning, probably today. And each time you do that, there’s a chance that someone is able to access all the data that you store on that site, be it emails, banking statements, photos, or credit card numbers. For instance, the easy-to-use Firefox extension Firesheep was designed to let users on public wireless networks do exactly that—capture the login credentials of people around them who aren’t relying on SSL.

SSL and its successor TLS are used to verify the identities of the servers, or websites, that you call up. They also exchange cryptographic keys, for encrypting any online communications with those servers. So an SSL/TLS warning indicates either that your browser cannot confirm that the site you are visiting is actually the one you expect it to be, or that the data being sent between your computer and that site’s servers is not adequately encrypted and may be intercepted by eavesdroppers.

These are different from warnings about malicious sites that spread malware or try to phish for users’ personal information—an SSL/TLS warning does not mean that the operators of the website you’re visiting are evil. More likely, it means that the network you’re using to access that site is insecure in some way that allows for attackers to exploit the traffic between your computer and the sites you’re trying to reach.

However, previous research from Carnegie Mellon has shown that users without technical expertise frequently fail to grasp this distinction and often associate SSL warnings with “antivirus software, security updates, or website certifications about being ‘virus free.’ ” One interviewee said of SSL certificates, “They’re basically kind of a security guard against viruses.” The Carnegie Mellon authors note that “SSL certificates turned out to be the most confusing concept in our study.”

In the recent study, which will be presented at the 2015 Association for Computing Machinery Conference on Human Factors in Computing Systems, the Google and Penn researchers redesigned the SSL warning displayed in Google’s Chrome browser to see whether they could both help users understand what the warning means and dissuade them from continuing on to the flagged website.

Their initial warning read:

The site’s security certificate is not trusted! You attempted to reach example.com, but the server presented a certificate issued by an entity that is not trusted by your computer’s operating system. This may mean that the server has generated its own security credentials, which Chrome cannot rely on for identity information, or an attacker may be trying to intercept your communications. You should not proceed, especially if you have never seen this warning before for this site.

They also created another version intended to be less technical and intimidating. It read simply:

Your connection is not private. Attackers might be trying to steal your information from example.com (for example, passwords, messages, or credit cards).

The textual changes didn’t have much impact: When the researchers tested the new warning in Chrome using the same visual design as the original one, the rate of users who did not continue to the website increased by only 1.2 percent. However, when they combined the new text with a new visual design—one which included de-emphasizing the button to click through to the site and making the button for following the warning’s guidance a “more visually attractive” bright blue—they saw a much more dramatic drop of nearly 30 percent in the click-through rate.

So the good news is that strategic use of color and other visual cues can persuade users not to visit potentially dangerous websites. The bad news is that it seems to be much harder to help them understand the computer risks they’re dealing with. Using a set of three survey questions, the researchers also assessed the extent to which people who viewed different SSL warnings were able to answer simple questions about the source of the threat, the risks posed to their data, and the likelihood of false positives.

The results are downright depressing. For instance, the researchers wanted to assess whether users understood that an SSL warning implies a possible network attack or an opportunity for someone to eavesdrop on your communications with a legitimate site, but not that the website you are trying to visit is itself malicious. They asked:

What might happen if you ignored this error while checking your email?

- Your computer might get malware

- A hacker might read your email

Even with the redesigned warning, only 49.2 percent of respondents chose the correct answer (the latter), up from 37.7 percent of respondents who viewed the original warning. As the researchers themselves point out, “Although our proposal performed better than the alternatives, we still only achieved the same rate as random guessing.”

When it came to assessing what kinds of data were at risk due to the insecure connection (e.g., photos or bank statements), respondents—regardless of which warning they viewed—were even less likely to understand that the warning corresponded to a specific website’s data, rather than any data on their own computers or other websites. One thing the survey respondents were pretty good at: identifying that SSL warnings were more likely to be false positives on movie websites than on banking websites, which typically have more rigorous security practices and are therefore less likely to trigger warnings accidentally.

In the introduction to their paper, the authors consider the minimal success they had at educating users and pose the crucial question: “Why do all warnings—including ours—fail?” They point to conflicting advice in designing their warning and particularly the need to balance the goals of brevity, specificity, and easily accessible, nontechnical language. Certainly, understanding the relative importance of these and other factors might help in designing better warning and education initiatives in the future.

But there’s also a more pessimistic perspective, a darker question that continues to linger even as organizations and governments launch significant educational campaigns and efforts, complete with cute cartoons and easy-to-remember slogans in the model of “stop, drop and roll”: Is relying on user education to improve cybersecurity, on some level, a lost cause? Should we really be trying to teach people what SSL does, or should we instead focus on trying to help them make better decisions even in the absence of any real comprehension—for instance, by making prettier blue buttons, or boosting the search rankings of sites that offer HTTPS (HTTP over TLS)? A 2009 study from Carnegie Mellon on SSL warnings came to a similar conclusion, suggesting that “while warning can be improved, a better approach may be to minimize the use of SSL warnings altogether by blocking users from making unsafe connections.”

Ideally, of course, it’s not an either-or decision, and the nudges toward better decision-making can be combined with effective ways of helping users understand the motivation for those decisions and why they matter. But for the time being, it seems, it may still be easier and more effective to make users’ choices for them.

This article is part of Future Tense, a collaboration among Arizona State University, New America, and Slate. Future Tense explores the ways emerging technologies affect society, policy, and culture. To read more, visit the Future Tense blog and the Future Tense home page. You can also follow us on Twitter.