Whether it’s our location, contact lists, calendars, photo albums, or search requests, app developers, advertising companies, and other tech firms are scrambling to learn everything they can about us in order to sell us things. Data from smartphone apps, aggregated by third-party companies, can indeed paint an eerily accurate picture of us, and data miners are increasingly able to predict how we will behave tomorrow. For example, as Future Tense blogger Ryan Gallagher reported for the Guardian, Raytheon, the world’s fifth-largest defense contractor, has developed software called RIOT (Rapid Information Overlay Technology) that can synthesize a vast amount of data culled from social networks. By pulling, for instance, the invisible location metadata embedded in the pictures our cellphones take, RIOT tracks where we’ve been and accurately guesses where we will be—and provides all of this information to whomever is running the software. Other companies are increasing the accuracy of such forecasts by comparing our travel habits against our friends’ locations.

Amid the growing popularity of data mining, governments around the world are taking action on perceived misdeeds, like the $7 million fine Google faces for collecting unsecured information. But the stakes are far higher than lawmakers realize. New consumer devices are emerging that, left unchecked, could enable violations of our personal privacy on a far more intimate level: our brains.

Brain-computer interfaces have been widely used in the medical and research communities for decades, but in the last few years, the technology has broken out of the lab and into the marketplace with surprising speed. (Will Oremus recently explored the potential of BCIs in Slate.) They work by recording brain activity and transmitting that information to a computer, which interprets it as various inputs or commands.

The most commonly used technique is electroencephalography, which is widely known as a medical diagnostic test (especially for detecting seizures) but now has more potential uses. An EEG device is typically a headset with a small number of electrodes placed on different parts of the skull in order to detect the electrical signals made by your brainwaves. While EEGs cannot read your mind in the traditional, Professor X-y sense, it turns out that your brainwaves can reveal a great deal about you, such as your attention level and emotional state, and possibly much more. For instance, the presence of beta waves correlates with excitement, focus, and stress. One brain signal, known as the P300 response, correlates with recognition, say of a familiar face or object. This response is so well documented that it is widely used by psychologists and researchers in clinical studies. The popularity of EEG devices over other brain scanning technologies, like fMRIs, stems from their low cost, their light weight, and their ability to collect real-time data.

The medical research community has long been interested in BCI technology as a means to treat patients with paralysis, like those with “locked-in” syndrome (a neurological condition that results in total paralysis but leaves the brain itself unaffected). Through a simple, noninvasive EEG headset, scientists are able to interpret signals from the patients’ brains—for instance, lift left arm or say: “hello”—and relay these messages to a peripheral device such as an artificial limb, wheelchair, or voice box.

Scientists are also researching the use of BCIs to treat psychiatric disorders like ADHD and depression. In fact, in the summer of 2012, OpenVibe2 released a new attention-training game for children with ADHD. Based in a virtual classroom, kids perform various tasks like focusing on a cartoon or finding an object, while the game introduces various stimuli (a truck driving by outside or a dog barking) to try to distract them. An EEG headset measures their level of focus, and objects on screen become blurry as attention wanders, forcing them to refocus their attention.

In the last few years, the cost of EEG devices has dropped considerably, and consumer-grade headsets are becoming more affordable. A recreational headset capable of running a range of third-party applications can now be purchased for as little as $100. There is even an emerging app market for BCI devices, including games, self-monitoring tools, and touch-free keyboards. One company, OCZ Technology, has developed a hands-free PC game controller. NeuroSky, another EEG headset developer, recently produced a guide on innovative ways for game developers to incorporate BCIs for a better gaming experience. (Concentration level low—send more zombies!)

Likewise, auto manufacturers are currently exploring the integration of BCIs to detect drivers’ drowsiness levels and improve their reaction time. There is even a growing neuromarketing industry, where market researchers use data from these same BCI devices to measure the attention level and emotional responses of focus groups to various advertisements and products. Scientists remain quite skeptical of the efficacy of these tools, but companies are nevertheless rushing to bring them to consumers.

Courtesy of Grady Johnson and Sean Vitka

The information promised by these devices could offer new value to developers, advertisers, and users alike: Companies could detect whether you’re paying attention to ads, how you feel about them, and whether they are personally relevant to you. Imagine an app that can detect when you’re hungry and show you ads for restaurants or select music playlists according to your mood.

But, as with data collected during smartphone use, the consequences for data collected through the use of BCIs reach far beyond mildly unsettling targeted ads. Health insurance companies could use EEG data to determine your deductible based on EEG-recorded stress levels. After all, we live in a world in which banks are determining creditworthiness through data mining and insurance companies are utilizing GPS technology to adjust premiums. With these devices in place, especially with a large enough data set, companies will be able to identify risk indicators for things like suicide, depression, or emotional instability, all of which are deeply personal to us as individuals but dangerous to their bottom lines.

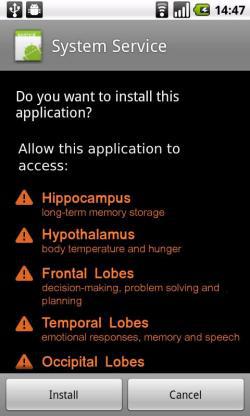

These problems aren’t entirely hypothetical. In August, researchers at the Usenix Security conference demonstrated that these early consumer-grade devices can be used to trick wearers into giving up their personal information. The researchers were able to significantly increase their odds of guessing the PINs, passwords, and birthdays of test subjects simply by measuring their responses to certain numbers, words, and dates.

BCIs invoke serious law enforcement concerns as well. One company, Government Works Inc., is developing BCI headsets for lie detection and criminal investigations. By measuring a person’s responses to questions and images, the company claims to be able to determine whether that person has knowledge of certain information or events (leading to conclusions, for instance, about whether that person was at a crime scene). According to one BCI manufacturer, evidence collected from these devices has already been used in criminal trials. Although the jury is still out on the reliability of these devices, as psychics, predictive psychology, lie detectors, and unreliable forensics have taught us: Voodoo convicts.

We don’t want to delay or block innovation—we’re excited for the day when we can open doors like a Jedi or play Angry Birds entirely with our minds. Or even better, when double amputees can. But now is the time to ask serious questions about who ultimately controls our devices, who has access to the data stored and collected on them, and how those data are ultimately used. Real-time data that reveal one’s attention level and emotional state are incredibly valuable—to buyers, prosecutors, and us data cattle. The question remains as to whether it will simply be the next grain of personal information bought, taken, volunteered, or stolen in the name of more accurate advertising and cheaper services.

And it’s evident that today’s privacy standards are woefully inadequate. You might assume health privacy laws would offer protection for this category of sensitive data. But you’d be wrong. Those rules apply only to a select group of people and companies—specifically health care providers, health insurers, and those who provide services on their behalf. Thus, most companies’ ability to use BCI data or sell it to one another—even real-time data from your brain—is essentially unchecked.

The Department of Justice’s legal opinion is that law enforcement can access any data a user provides to its cellphone company without a warrant, even if no person at the service provider would regularly see that information. This includes passive background data, like the location of the cell towers used to complete a call. Despite their loss on a similar issue at the Supreme Court last year, the DOJ continues to argue that they can access months of a user’s location data stored by phone companies and further that they can compel these companies to provide prospective location information. The deliriously old statute controlling these law enforcement tools was passed in 1986 (though thankfully, legislators like Sen. Patrick Leahy, D-Vt., are trying to update it).

Tomorrow’s Yelp may be interested in more than just your location. Your brainwaves might help it provide more appealing results, and we may volunteer that information without understanding the implications of the data collection. Prosecutors could turn around and use this information as circumstantial evidence: Johnny says he wasn’t angry at the victim, but his brainwave forensics say otherwise. Do you expect the jury to believe the robot lied, Johnny? If our laws remain outdated when these issues begin to come up, this new, incredibly intimate data will be guarded just like our current data: not at all.

This article arises from Future Tense, a collaboration among Arizona State University, the New America Foundation, and Slate. Future Tense explores the ways emerging technologies affect society, policy, and culture. To read more, visit the Future Tense blog and the Future Tense home page. You can also follow us on Twitter.