The most important difference between the world today and 150 years ago isn’t airplane flight or nuclear weapons or the Internet. It’s lifespan. We used to live 35 or 40 years on average in the United States, but now we live almost 80. We used to get one life. Now we get two.

You may well be living your second life already. Have you ever had some health problem that could have killed you if you’d been born in an earlier era? Leave aside for a minute the probabilistic ways you would have died in the past—the smallpox that didn’t kill you because it was eradicated by a massive global vaccine drive, the cholera you never contracted because you drink filtered and chemically treated water. Did some specific medical treatment save your life? It’s a fun conversation starter: Why are you not dead yet? It turns out almost everybody has a story, but we rarely hear them; life-saving treatments have become routine. I asked around, and here is a small sample of what would have killed my friends and acquaintances:

- Adrian’s lung spontaneously collapsed when he was 18.

- Becky had an ectopic pregnancy that caused massive internal bleeding.

- Carl had St. Anthony’s Fire, a strep infection of the skin that killed John Stuart Mill.*

- Dahlia would have died delivering a child (twice) or later of a ruptured gall bladder.

- David had an aortic valve replaced.

- Hanna acquired Type 1 diabetes during a pregnancy and would die without insulin.

- Julia had a burst appendix at age 14.

- Katherine was diagnosed with pernicious anemia in her 20s. She treats it with supplements of vitamin B-12, but in the past she would have withered away.

- Laura (that’s me) had scarlet fever when she was 2, which was once a leading cause of death among children but is now easily treatable with antibiotics.

- Mitch was bitten by a cat (filthy animals) and had to have emergency surgery and a month of antibiotics or he would have died of cat scratch fever.

After a while, these not-dead-yet stories start to sound sort of absurd, like a giddy, hooray-for-modernity response to The Gashleycrumb Tinies. Edward Gorey’s delightfully dark poem is an alphabetical list of children (fictional!) who died gruesome deaths: “A is for Amy who fell down the stairs/ B is for Basil assaulted by bears.” Here’s how modern science, medicine, and public health would amend it:

M is for Maud who was swept out to sea … then brought back to shore by a lifeguard and resuscitated by emergency medical technicians.

O is for Olive run through with an awl … but saved during a four-hour emergency surgery to repair her collapsed lung.

S is for Susan, who perished of fits … or who would have, anyway, if her epilepsy hadn’t been diagnosed promptly and treated with powerful anticonvulsant drugs.

Now we’d like to hear your stories. What would have killed you but didn’t? Which friend or relative is alive today thanks to some medical intervention, whether heroic or trivial? Please send your stories to survival_stories@yahoo.com or share them on Twitter using the hashtag #NotDeadYet. We’ll collect the best stories and publish them in Slate next week, at the end of a series of articles about why life expectancy has increased. And congratulations on being alive!

* * *

When I first started looking into why average lifespan has increased so much so rapidly, I assumed there would be a few simple answers, a stepwise series of advances that each added a few years: clean water, sewage treatment, vaccines, various medical procedures. But it turns out the question of who or what gets credit for the doubling of life expectancy in the past few centuries is surprisingly contentious. The data are sparse before 1900, and there are rivalries between biomedicine and public health, obstetricians and midwives, people who say life expectancy will rise indefinitely and those who say it’s starting to plateau.

It’s important to assign the credit correctly. In much of the developing world, average lifespan hasn’t increased nearly as dramatically as in the United States and the rest of the developed world. (And the United States has a lousy life expectancy compared to other wealthy nations.) Even within the United States, there are huge differences among races, geographic regions, and social classes. Even neighborhoods. These discrepancies are among the greatest injustices of the 21st century. How can we prevent needless premature deaths? What interventions are mostly likely to grant people in poorer countries extra years? And what’s the best way to prolong life and health even further in rich countries?

Courtesy of Work Projects Administration Poster Collection/Library of Congress

To understand why people live so long today, it helps to start with how people died in the past. (To take a step back in time, play our interactive game.) People died young, and they died painfully of consumption (tuberculosis), quinsy (tonsillitis), fever, childbirth, and worms. There’s nothing like looking back at the history of death and dying in the United States to dispel any romantic notions you may have that people used to live in harmony with the land or be more in touch with their bodies. Life was miserable—full of contagious disease, spoiled food, malnutrition, exposure, and injuries.

But disease was the worst. The vast majority of deaths before the mid-20th century were caused by microbes—bacteria, amoebas, protozoans, or viruses that ruled the Earth and to a lesser extent still do. It’s not always clear which microbes get the credit for which kills. Bills of mortality (lists of deaths by causes) were kept in London starting in the 1600s and in certain North American cities and parishes starting in the 1700s. At the time, people thought fevers were spread by miasmas (bad air) and the treatment of choice for pretty much everything was blood-letting. So we don’t necessarily know what caused “inflammatory fever” or what it meant to die of “dropsy” (swelling), or whether ague referred to typhoid fever, malaria, or some other disease. Interpreting these records has become a fascinating sub-field of history. But overall, death was mysterious, capricious, and ever-present.

The first European settlers to North America mostly died of starvation, with (according to some historians) a side order of stupidity. They picked unnecessary fights with Native Americans, sought gold and silver rather than planting food or fishing, and drank foul water. As Charles Mann points out in his fascinating book 1493: Uncovering the New World Columbus Created, one-third of the first three waves of colonists were gentlemen, meaning their status was defined by not having to perform manual labor. During the winter of 1609–10, aka “the starving time,” almost everyone died; those who survived engaged in cannibalism.

Deadly diseases infiltrated North America faster than Europeans did. Native Americans had no exposure and thus no resistance to the common European diseases of childhood, and unimaginable pandemics of smallpox, measles, typhus, and other diseases swept throughout the continent and ultimately reduced the population by as much as 95 percent.

The slave trade killed more than 1 million Africans who were kidnapped, shackled, and shipped across the Atlantic. Those who survived the journey were at risk of dying from European diseases, as well as starvation and abuse. The slave trade introduced African microbes to North America; malaria and yellow fever were the ones that killed the most.

Painting by Sidney King/National Park Service/MPI/Getty Images

Global trade introduced new diseases around the world and caused horrific epidemics until the 1700s or so, when pretty much every germ had made landfall on every continent. Within the United States, better transportation in the 1800s brought wave after wave of disease outbreaks to new cities and the interior. Urbanization brought people into ideal proximity from a germ’s point of view, as did factory work. Sadly, so did public schools: Children who might have toiled in relative epidemiological isolation on farms were suddenly coughing all over one another in enclosed schoolrooms.

One of the best tours of how people died in the past is The Deadly Truth: A History of Disease in America by Gerald Grob. It’s a great antidote to all the heroic pioneer narratives you learned in elementary school history class, and it makes the Little House on the Prairie books seem delusional in retrospect. Pioneers traveling west in wagon trains had barely enough food, and much of it spoiled; their water came from stagnant, larvae-infested ponds. They died in droves of dysentery. Did you ever play with Lincoln logs or dream about living in a log cabin? What a fun fort for grown-ups, right? Wrong. The poorly sealed, damp, unventilated houses were teeming with mosquitoes and vermin. Because of settlement patterns along waterways and the way people cleared the land, some of the most notorious places for malaria in the mid-1800s were Ohio and Michigan. Everybody in the Midwest had the ague!

How did we go from the miseries of the past to our current expectation of long and healthy lives? “Most people credit medical advances,” says David Jones, a medical historian at Harvard—“but most historians would not.” One problem is the timing. Most of the effective medical treatments we recognize as saving our lives today have been available only since World War II: antibiotics, chemotherapy, drugs to treat high blood pressure. But the steepest increase in life expectancy occurred from the late 1800s to the mid-1900s. Even some dramatically successful medical treatments such as insulin for diabetics have kept individual people alive—send in those #NotDeadYet stories!—but haven’t necessarily had a population-level impact on average lifespan. We’ll examine the second half of the 20th century in a later story, but for now let’s look at the bigger early drivers of the doubled lifespan.

The credit largely goes to a wide range of public health advances, broadly defined, some of which were explicitly aimed at preventing disease, others of which did so only incidentally. “There was a whole suite of things that occurred simultaneously,” says S. Jay Olshansky, a longevity researcher at the University of Illinois, Chicago. Mathematically, the interventions that saved infants and children from dying of communicable disease had the greatest impact on lifespan. (During a particularly awful plague in Europe, James Riley points out in Rising Life Expectancy: A Global History, the average life expectancy could temporarily drop by five years.) And until the early 20th century, the most common age of death was in infancy.

Clean water may be the biggest lifesaver in history. Some historians attribute one-half of the overall reduction in mortality, two-thirds of the reduction in child mortality, and three-fourths of the reduction in infant mortality to clean water. In 1854, John Snow traced a cholera outbreak in London to a water pump next to a leaky sewer, and some of the big public works projects of the late 1800s involved separating clean water from dirty.* Cities ran water through sand and gravel to physically trap filth, and when that didn’t work (germs are awfully small) they started chlorinating water.

Closely related were technologies to move wastewater away from cities, but as Grob points out in The Deadly Truth, the first sewage systems made the transmission of fecal-borne diseases worse. Lacking an understanding of germs, people thought that dilution was the best solution and just piped their sewage into nearby waterways. Unfortunately, the sewage outlets were often near the water system inlets. Finally understanding that sewage and drinking water need to be completely separated, Chicago built a drainage canal that in 1900 reversed the flow of the Chicago River. The city thus sent its sewage into the greater Mississippi watershed and continued taking its drinking water from Lake Michigan.

Courtesy of U.S. National Archives and Records Administration

The germ theory of disease didn’t catch on all that quickly, but once it did, people started washing their hands. Soap became cheaper and more widespread, and people suddenly had a logical reason to wash up before surgery, after defecating, before eating. Soap stops both deadly and lingering infections; even today, kids who don’t have access to soap and clean water have stunted growth.

Housing, especially in cities, was crowded, filthy, poorly ventilated, dank, stinky, hot in the summer, and cold in the winter. These were terrible conditions to live in as a human being, but a great place to be an infectious microbe. Pretty much everyone was infected with tuberculosis (the main cause of consumption), the leading killer for most of the 19th century. It still has a bit of a reputation as a disease of the young, beautiful, and poetic (it claimed Frederic Chopin and Henry David Thoreau, not to mention Mimì in La Bohème), but it was predominantly a disease of poverty, and there was nothing romantic about it. As economic conditions started improving in the 19th century, more housing was built, and it was airier, brighter (sunlight kills tuberculosis bacteria), more weather-resistant, and less hospitable to vermin and germs.

We live like kings today—we have upholstered chairs, clean beds, a feast’s worth of calories at any meal, all the nutmeg (people once killed for it) and salt we could ever want. But wealth and privilege didn’t save royalty from early deaths. Microbes do care about breeding—some people have evolved defenses against cholera, malaria, and possibly the plague—but microbes killed off people without regard to class distinctions through the 1600s in Europe. The longevity gap between the rich and the poor grew slowly with the introduction of effective health measures that only the rich could afford: Ipecac from the New World to stop bloody diarrhea, condoms made of animal intestines to prevent the transmission of syphilis, quinine from the bark of the cinchona tree to treat malaria. Once people realized citrus could prevent scurvy, the wealthy built orangeries—greenhouses where they grew the life-saving fruit.

Improving the standard of living is one important life-extending factor. The earliest European settlers in North America suffered from mass starvation initially, but once the Colonies were established, they had more food and better nutrition than people in England. During the Revolutionary War era, American soldiers were a few inches taller than their British foes. In Europe, the wealthy were taller than the poor, but there were no such class-related differences in America—which means most people had enough to eat. This changed during the 1800s, when the population expanded and immigrants moved to urban areas. Average height declined, but farmers were taller than laborers. People in rural areas outlived those in cities by about 10 years, largely due to less exposure to contagious disease but also because they had better nutrition. Diseases of malnutrition were common among the urban poor: scurvy (vitamin C deficiency), rickets (vitamin D deficiency), and pellagra (a niacin deficiency). Improved nutrition at the end of the 1800s made people taller, healthier, and longer lived; fortified foods reduced the incidence of vitamin-deficiency disorders.

Photo courtesy of New York Public Library Archives/Tucker Collection

Contaminated food was one of the greatest killers, especially of infants; once they stopped breast-feeding, their food could expose them to typhoid fever, botulism, salmonella, and any number of microbes that caused deadly diarrhea in young children. (Death rates for infants were highest in the summer, evidence that they were dying of food contaminated by microbes that thrive in warm conditions.) Refrigeration, public health drives for pure and pasteurized milk, and an understanding of germ theory helped people keep their food safe. The Pure Food and Drug Act of 1906 made it a crime to sell adulterated food, introduced labeling laws, and led to government meat inspection and the creation of the Food and Drug Administration.

People had started finding ways to fight disease epidemics in the early 1700s, mostly by isolating the sick and inoculating the healthy. The United States suffered fewer massive epidemics than Europe did, where bubonic plague (the Black Death) periodically burned through the continent and killed one-third of the population. Low population density prevented most epidemics from becoming widespread early in the United States history, but epidemics did cause mass deaths locally, especially as the population grew and more people lived in crowded cities. Yellow fever killed hundreds of people in Savannah in 1820 and 1854; the first devastating cholera epidemic hit the country in 1832. Port cities suffered some of the worst outbreaks because sailors brought new diseases and strains with them from all over the world. Port cities instituted quarantines starting in the 19th century, preventing sailors from disembarking if there was any evidence of disease, and on land, quarantines separated contagious people from the uninfected.

A smallpox epidemic in Boston in 1721 led to a huge debate about variolation, a technique that involved transferring pus from an infected person to a healthy one to cause a minor reaction that confers immunity. Rev. Cotton Mather was for it—he said it was a gift from God. Those opposed said that disease was God’s will. People continued to fight about variolation, then inoculation (with the related cowpox virus, introduced in the late 1700s), and finally vaccination. The fight over God’s will and the dangers of vaccinations (real in the past, imaginary today) are still echoing.

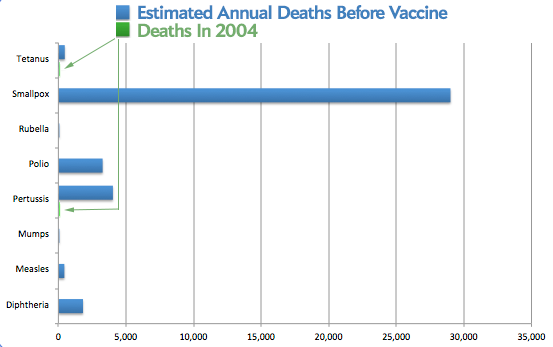

In the early 1900s, antitoxins to treat diphtheria and vaccines against diphtheria, tetanus, and pertussis helped stop these deadly diseases, followed by vaccines for mumps, measles, polio, and rubella.

Anne Schuchat, assistant surgeon general and the acting director of CDC’s Center for Global Health, says it’s not just the scientific invention of vaccines that saved lives, but the “huge social effort to deliver them to people improved health, extended life, and kept children alive.” Vaccines have almost eliminated diseases that used to be common killers, but she points out that “they’re still circulating in other parts of the world, and if we don’t continue to vaccinate, they could come back.”

Courtesy of Sandra W. Roush, Trudy V. Murphy, and the Vaccine-Preventable Disease Table Working Group/Journal of the American Medical Association

Vaccines have been so effective that most people in the developed world don’t know what it’s like to watch a child die of pertussis or measles, but parents whose children have contracted these diseases because of anti-vaccine paranoia can tell them. “The mistake that we made was that we underestimated the diseases and we totally overestimated the adverse reactions [to vaccines],” says a father in New Zealand whose child almost died of an agonizing bout of tetanus.

Schuchat says the HPV vaccine is a huge priority now; only one-third of teenage girls have received the full series of three shots required to protect them against viruses that cause cervical cancer. The vaccines “are highly effective and very safe, but our uptake is horrible. Thousands of cases of cervical cancer will occur in a few decades in people who are girls now.”

Photo by Keystone Features/Getty Images

Some credit for the historical decrease in deadly diseases may go to the disease agents themselves. The microbes that cause rheumatic fever, scarlet fever, and a few other diseases may have evolved to become less deadly. Evolutionarily, that makes sense—it’s no advantage to a parasite to kill its own host, and less-deadly strains may have spread more readily in the human population. Of course, sudden evolutionary change in microbes can go the other way, too: The pandemic influenza of 1918–19 was a new strain that killed more people than any disease outbreak in history—around 50 million. In any battle between microbes and mammals, the smart money is on the microbes.

Over the next week, we’ll take a closer look at deaths in childbirth and changes in life expectancy in adulthood. We’ll examine the evolution of old age and the social repercussions of having infants that survive and a robust population of old people, and we’ll list some of the oddball, underappreciated innovations that may have saved your life. In the meantime, please play the Wretched Fate game and send us your #NotDeadYet survival stories on Twitter or survival_stories@yahoo.com.

Please read the rest of Laura Helmuth’s series on longevity, and play our “Wretched Fate” interactive game to learn how you would have died in 1890.

Correction, Sept. 5, 2013: This article originally misspelled John Stuart Mill’s middle name.

Correction, Jan. 6, 2020: This article originally stated that some of the big public works projects of the late 1900s involved separating clean water from dirty. These projects occurred in the late 1800s.