As fake news and political hoaxes proliferated on Facebook during the presidential campaign, the company did little to stop them. Facing a backlash in the days following the election, CEO Mark Zuckerberg downplayed the problem, while making vague assurances that Facebook would look into it further. That only intensified the criticism.

Now, it seems, Facebook is taking it seriously. The company announced on Thursday several new features designed to identify, flag, and slow the spread of false news stories on its platform, including a partnership with third-party fact-checkers such as Snopes and PolitiFact. It is also taking steps to prevent spammers and publishers from profiting from fake news.

The new features are relatively cautious and somewhat experimental, which means they may not immediately have the intended effects. But they signal a new direction for a company that has been extremely reticent to take on any editorial oversight of the content posted on its platform. And they are likely to evolve over time as the company tests and refines them.

The company detailed the changes in a post on its News Feed FYI blog Thursday.

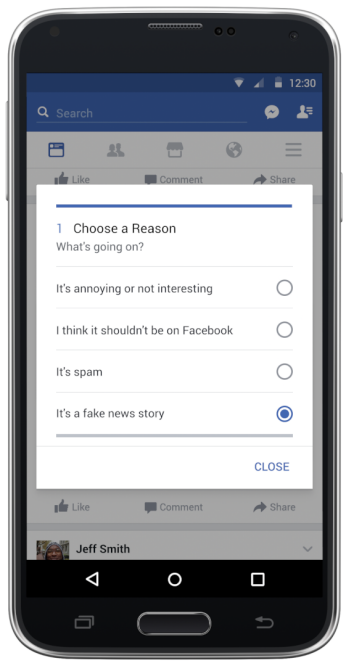

First, it’s trying to make it easier for users to report fake news stories. The drop-down menu at the top right of each post in your feed will now include an explicit option to report it as a “fake news story,” after which you’ll be prompted to choose among multiple options, which include notifying Facebook and messaging the person who shared it. (This approach is similar to the one Slate itself has taken with its fake-news fighting tool, a Chrome browser extension called This Is Fake, which launched on Monday.)

Screenshot courtesy of Facebook

Facebook’s approach to adjudicating the thorny question of what constitutes fake news is a cautious and probably prudent one: It will partner with established fact-checking organizations to assess the accuracy of individual news stories. At launch, the company said the partners will be Snopes, Factcheck.org, ABC News, and Politifact, with more to be added later. They’ll all be drawn from the nonprofit Poynter’s international fact-checking network.

This careful, manual approach to fact-checking is likely to be slow, because such organizations generally have limited resources. Facebook will try to aid them, a company representative told me, by providing them with a virtual dashboard that uses an algorithm to surface the viral stories that are being most widely flagged as fake by Facebook users, among other signals.

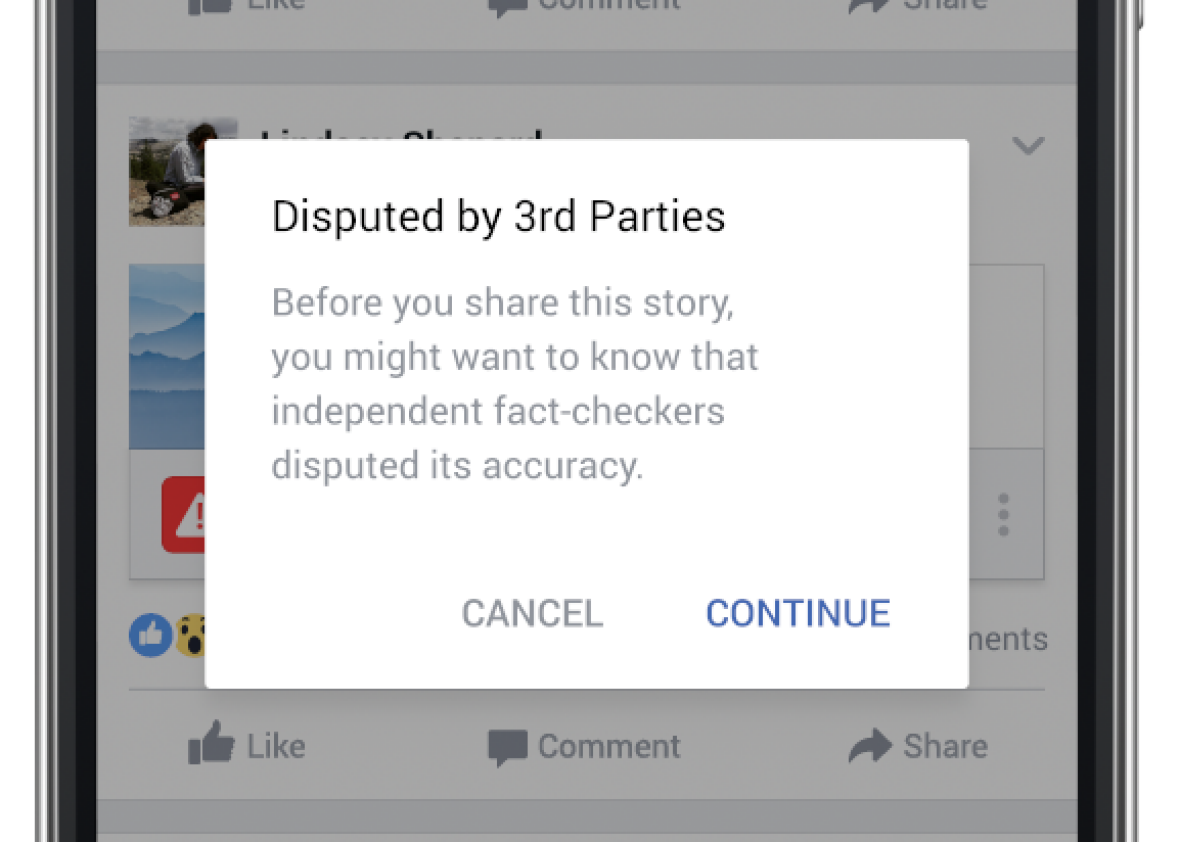

When a fact-checking organizations identify a story as fake, it will appear on Facebook with a notice that the story has been disputed. Its ranking in the news feed will also take a hit, inhibiting its viral spread. And while users will still be allowed to share such stories, they’ll be given a warning that the story has been disputed before they post it. Additionally, Facebook will ban disputed stories from being promoted as ads in the news feed.

Screenshot courtesy of Facebook

At the same time, but separately, Facebook is adding a new signal to its news feed algorithm that will look at whether users are sharing a post before or after they’ve taken the time to read it. This is similar but distinct from another feature that Facebook already uses to assess whether a story might be clickbait. Stories that are being shared mostly by people who haven’t taken time to read them will be ranked lower in the news feed, on the theory that the content itself might not be credible or worthwhile.

Finally, Facebook is stepping up its efforts to prevent spammers and fake-news sites from promoting stories on its platform, including a mechanism to prevent domain-spoofing, a common tactic of hoax websites.

What’s cautious about this effort is that Facebook is avoiding the responsibility of deciding which news stories are genuine and which are fake. The company is highly sensitive to claims of editorial bias, because its value lies in its ability to appeal to users across the political spectrum. It also avoids using data, algorithms, or artificial intelligence to try to discern truth from fact, which is wise given the difficulty of doing so.

I do have one important criticism, however: The company has declined to define its criteria for fake news. A spokesperson did tell me the company will set a high bar for what counts as fake, which I’ve argued is crucial. But the company was unable to say exactly how it would handle stories that its fact-checking partners identify as containing a mixture of truth and falsehood.