Last week a team of 72 scientists released the preprint of an article attempting to address one aspect of the reproducibility crisis, the crisis of conscience in which scientists are increasingly skeptical about the rigor of our current methods of conducting scientific research.

Their suggestion? Change the threshold for what is considered statistically significant. The team, led by Daniel Benjamin, a behavioral economist from the University of Southern California, is advocating that the “probability value” (p-value) threshold for statistical significance be lowered from the current standard of 0.05 to a much stricter threshold of 0.005.

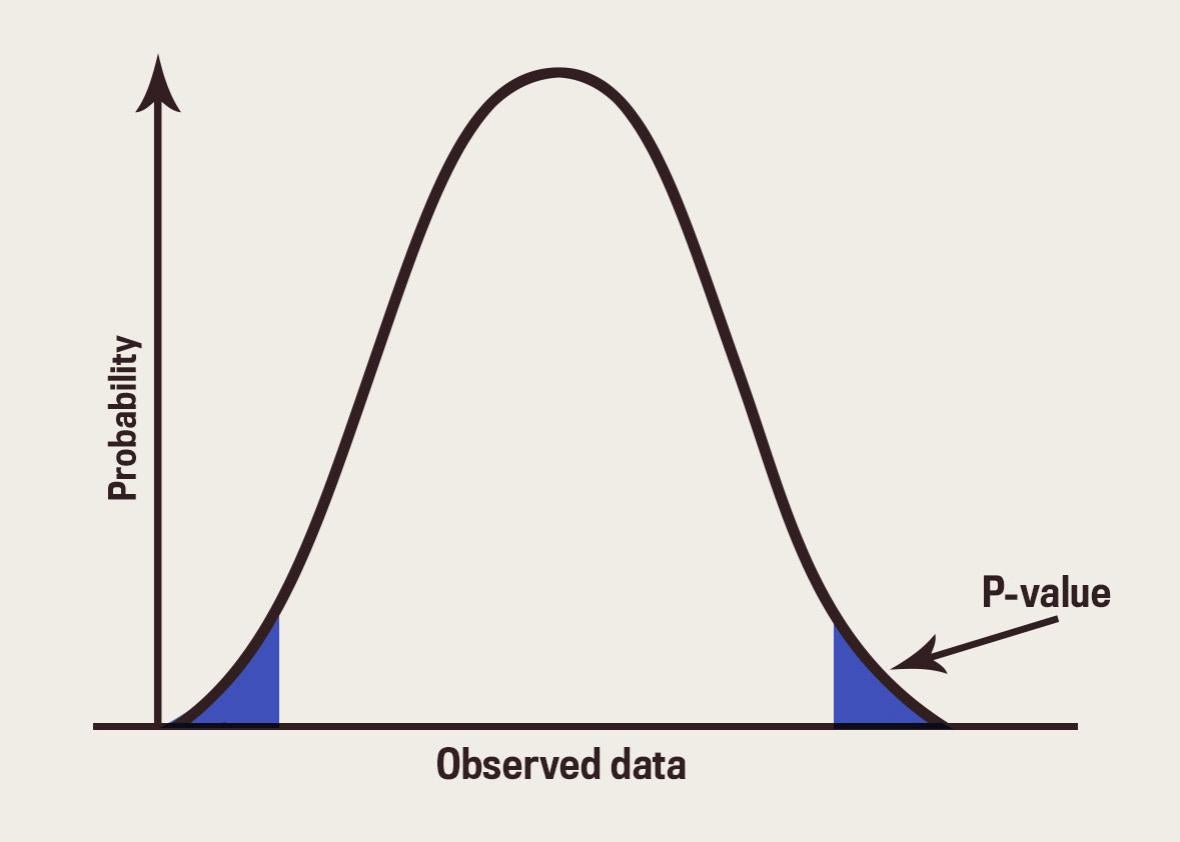

P-values are tricky business, but here’s the basics on how they work: Let’s say I’m conducting a drug trial, and I want to know if people who take drug A are more likely to go deaf than if they take drug B. I’ll state that my hypothesis is “drugs A and B are equally likely to make someone go deaf,” administer the drugs, and collect the data. The data will show me the number of people who went deaf on drugs A and B, and the p-value will give me an indication of how likely it is that the difference in deafness was due to random chance rather than the drugs. If the p-value is lower than 0.05, it means that the chance this happened randomly is very small—it’s a 5 percent chance of happening, meaning it would only occur 1 out of 20 times if there wasn’t a difference between the drugs. If the threshold is lowered to 0.005 for something to be considered significant, it would mean that the chances of it happening without a meaningful difference between the treatments would be just 1 in 200.

On its face, this doesn’t seem like a bad idea. If this change requires scientists to have more robust evidence before they can come to conclusions, it’s easy to think it’s a step in the right direction. But one of the issues at the heart of making this change is that it seems to assume there’s currently a consensus around how p-value ought to be used and this consensus could just be tweaked to be stronger.

P-value use already varies by scientific field and by journal policies within those fields. Several journals in epidemiology, where the stakes of bad science are perhaps higher than in, say, psychology (if they mess up, people die), have discouraged the use of p-values for years. And even psychology journals are following suit: In 2015, Basic and Applied Social Psychology, a journal that has been accused of bad statistical (and experimental) practice, banned the use of p-values. Many other journals, including PLOS Medicine and Journal of Allergy and Clinical Immunology, actively discourage the use of p-values and significance testing already.

On the other hand, the New England Journal of Medicine, one of the most respected journals in that field, codes the 0.05 threshold for significance into its author guidelines, saying “significant differences between or among groups (i.e P<.05) should be identified in a table.” That may not be an explicit instruction to treat p-values less than 0.05 as significant, but an author could be forgiven for reading it that way. Other journals, like the Journal of Neuroscience and the Journal of Urology, do the same.

Another group of journals—including Science, Nature, and Cell—avoid giving specific advice on exactly how to use p-values; rather, they caution against common mistakes and emphasize the importance of scientific assumptions, trusting the authors to respect the nuance of any statistics tools. Deborah Mayo, award-wining philosopher of statistics and professor at Virginia Tech, thinks this approach to statistical significance, where various fields have different standards, is the most appropriate. Strict cutoffs, regardless of where they fall, are generally bad science.

Mayo was skeptical that it would have the kind of widespread benefit the authors assumed. Their assessment suggested tightening the threshold would reduce the rate of false positives—results that look true but aren’t—by a factor of two. But she questioned the assumption they had used to assess the reduction of false positives—that only 1 in 10 hypotheses a scientist tests is true. (Mayo said that if that were true, perhaps researchers should spend more time on their hypotheses.)

But more broadly, she was skeptical of the idea that lowering the informal p-value threshold will help fix the problem, because she’s doubtful such a move will address “what almost everyone knows is the real cause of nonreproducibility”: the cherry-picking of subjects, testing hypothesis after hypothesis until one of them is proven correct, and selective reporting of results and methodology.

There are plenty of other ways that scientists are testing to help address the replication crisis. There’s the move toward pre-registration of studies before analyzing data, in order to avoid fishing for significance. Researchers are also now encouraged to make data and code public so a third party can rerun analyses efficiently and check for discrepancies. More negative results are being published. And, perhaps most importantly, researchers are actually conducting studies to replicate research that has already been published. Tightening standards around p-values might help, but the debate about reproducibility is more than just a referendum on the p-value. The solution will need to be more than that as well.