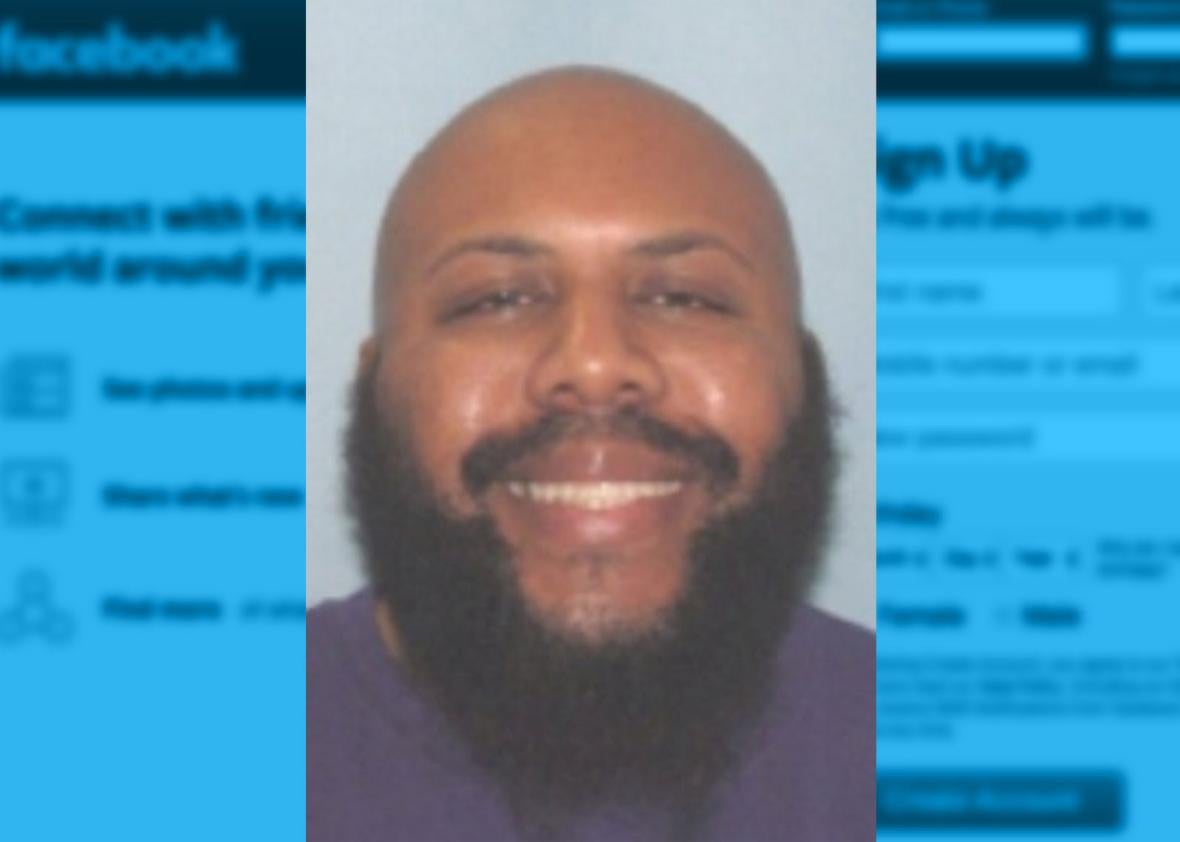

On Sunday in Cleveland, a man appears to have shot and killed a 74-year-old stranger point-blank, recorded it on his phone, and uploaded the video to Facebook. He then broadcast himself on Facebook Live as he drove around the city telling viewers he was searching for his next victims in what he said would be an “Easter Day slaughter.” While there is no evidence the perpetrator has killed anyone else, he remained at large Monday afternoon.

The brutal slaying was the latest in a string of violent acts that have found their way onto Facebook since the company launched its live video feature a year ago. But you can’t really blame a social media platform for the appalling uses to which disturbed people apply its tools—or can you?

In this case, Facebook has been criticized for failing to promptly take down the video, which remained on suspected shooter Steve Stephens’ page for several hours, according to the Verge. It has since removed both the video and Stephens’ account, offering the following statement:

This is a horrific crime and we do not allow this kind of content on Facebook. We work hard to keep a safe environment on Facebook, and are in touch with law enforcement in emergencies when there are direct threats to physical safety.

It’s well and good for Facebook to say it doesn’t “allow” people to broadcast cold-blooded killings on the site, in the sense that it’s against the rules. The fact is, its service allowed just that, at least for long enough for large numbers of people to watch the video and even interact with the suspect before the company took it down.

Now that shooting people live on Facebook (or in this case, on a slight tape delay) is apparently a thing—along with assaulting people live on Facebook, getting shot by police live on Facebook, and committing suicide live on Facebook—it stands to reason that the company bears some responsibility. But it’s worth taking a closer look at exactly what that responsibility is and what actions Facebook could take to fulfill it.

One extreme view would be that Facebook bears full responsibility for what its users publish, and deserves to be criticized or potentially even punished for whatever role its product plays in incentivizing or amplifying heinous behavior. In this view, Facebook Live would be essentially untenable as a product, because there’s no way Facebook could possibly police every video around the world in real time with perfect accuracy.

At the other extreme would be the claim that Facebook Live and Facebook video are merely tools, and it’s not up to the company to control how people use them. Internet startups have a rich history of pleading ignorance, helplessness, or neutrality with respect to their users’ behavior. Stephens, in this view, was going to kill one way or another and find a way to publicize it. That he opted to post his misdeeds to Facebook instead of YouTube or Snapchat is not Facebook’s fault.

That’s a little too convenient, however. Any number of different broadcast platforms can be used to amplify objectionable messages. But they lend themselves to different balances between editorial oversight and publisher autonomy. A TV station can exert relatively tight control of what it broadcasts, albeit not full control, as we saw when a pair of reporters were shot to death live on a local newscast in Virginia in 2015. (The shooter posted his own graphic video to Twitter soon afterward.)* By contrast, content moderation is a much thornier problem if you run, say, a real-time, global, anonymous microblogging service. Twitter says it took down some 377,000 terror-related accounts in just the second half of 2016, yet it is still routinely accused of failing to adequately police its network.

Facebook Live is attracting scrutiny because it lies near the extreme vanguard of this spectrum. It’s real-time; anyone can use it at any moment; the medium of personal video is as intimate as it gets; and the potential audience for a popular stream is tremendous thanks to Facebook’s algorithms. Its one advantage over Twitter, when it comes to moderation, is that accounts aren’t anonymous, which means that you can’t use it with impunity. By broadcasting on Facebook, Stephens made it highly likely that he’ll be caught, and in the meantime Facebook can deny him his page.

That aside, it’s hard to imagine a platform more ripe for user abuse or more difficult to effectively monitor.

So while it may be wrong to blame Facebook for the shooting itself, it’s fair to expect that the company would have thought hard about the ways its live video features could be misused and devoted itself to finding ways to mitigate the damage before releasing them.

It’s safe to say at this point that Facebook did not do that. If anything, the company seems to have welcomed the potential for drama as a way to stay relevant with young people in the face of challenges from Snapchat and other rivals. In an exclusive interview with BuzzFeed’s Mat Honan to announce Facebook Live in April 2016, CEO Mark Zuckerberg repeatedly and excitedly used the words “raw” and “visceral” to describe the product’s potential. His quotes from that piece take on a rather grisly dimension in light of Sunday’s killing:

We built this big technology platform so we can go and support whatever the most personal and emotional and raw and visceral ways people want to communicate are as time goes on. …

Because it’s live, there is no way it can be curated. And because of that it frees people up to be themselves. It’s live; it can’t possibly be perfectly planned out ahead of time. Somewhat counterintuitively, it’s a great medium for sharing raw and visceral content.

Nowhere in the interview does he express serious concern about the possibility that someone might broadcast a murder, a suicide, a police shooting, a sexual assault, or an armed standoff. It’s no surprise, then, that as each of these scenarios has transpired in just the first year of the product’s existence, Facebook has appeared woefully unprepared to deal with them.

It isn’t just a matter of being slow to take down the offending content. As this rundown by Fortune’s Mathew Ingram makes clear, the company has appeared to adjust its policies an ad hoc basis, stumbling over each episode in new and different ways.

Zuckerberg said in a recent Fast Company interview, “I believe more strongly than ever that giving the most voice to the most people will be this positive force in society.” He evinced no awareness that the bloody abuses of Facebook Live have challenged that notion repeatedly and, yes, viscerally.

That it would be extremely hard for Facebook to prevent people from abusing its hot new product is not an excuse. It’s a feature of the system that Facebook built. And if the company’s reputation suffers because it can’t find better ways to handle it, that’s no ones fault but its own.

Update, April 18, 2017, 2:30 p.m. EST: This post has been updated with additional information.