Anyone who’s followed the debates surrounding autonomous vehicles knows that moral quandaries inevitably arise. As Jesse Kirkpatrick has written in Slate, those questions most often come down to how the vehicles should perform when they’re about to crash. What do they do if they have to choose between killing a passenger and harming a pedestrian? How should they behave if they have to decide between slamming into a child or running over an elderly man?

It’s hard to figure out how a car should make such decisions in part because it’s difficult to get humans to agree on how we should make them. By way of evidence, look to Moral Machine, a website created by a group of researchers at the MIT Media Lab. As the Verge’s Russell Brandon notes, the site effectively gameifies the classic trolley problem, folding in a variety of complicated variations along the way. You’ll have to decide whether a vehicle should choose its passengers or people in an intersection. Others will present two differently composed groups of pedestrians—say, a handful of female doctors or a collection of besuited men—and ask which an empty car should slam into. Further complications—including the presence of animals and details about whether the pedestrians have the right of way—sometimes further muddle the question.

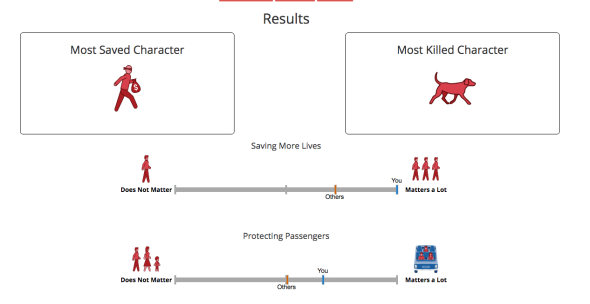

Once you’ve made your way through 13 of these binaristic scenarios, Moral Machine presents you with an explanation of how you stack up against the average visitor. I, for example, discovered that I apparently favor the lives of criminals over those of dogs, but treat young and old humans as relatively equal. Below all this data, a note informs visitors, “Our goal here is not to judge anyone, but to help the public reflect on important and difficult decisions.”

Moral Machine

Slate writers who took the quiz reported that those dilemmas sent them in a variety of directions. L.V. Anderson, for example, told me that she “tended to kill off the passengers, on the theory that they assumed the risks of riding in a self-driving car.” I, by contrast, found that, all other things being equal, I gave slight preference to car passengers over pedestrians, assuming (arguably against the intentions of the site) that the latter might be able to dive out of the way, while the former had no agency or control whatsoever. Science writer Matt Miller largely agreed with Anderson on the choice to protect pedestrians over passengers, since “pedestrians didn’t ask for this.” The two diverged, however on the relevance of traffic lights. Miller claimed that he “showed pretty little regard for upholding the law, since the law in this case is just ‘don’t jaywalk,’ ” while Anderson admitted that she killed off people who were walking on red.

Though these results obviously aren’t scientific—just as they likely don’t reflect how we’d make such choices under real circumstances—they nevertheless speak to the difficulties of automating morality. As Adam Elkus has argued in Slate, the trouble with imparting “human” values onto computers is that different humans value competing things under varied circumstances. In that sense, the true lesson of Moral Machine may be that there’s no such thing as a moral machine, at least not under the circumstance that the site invites its visitors to explore.

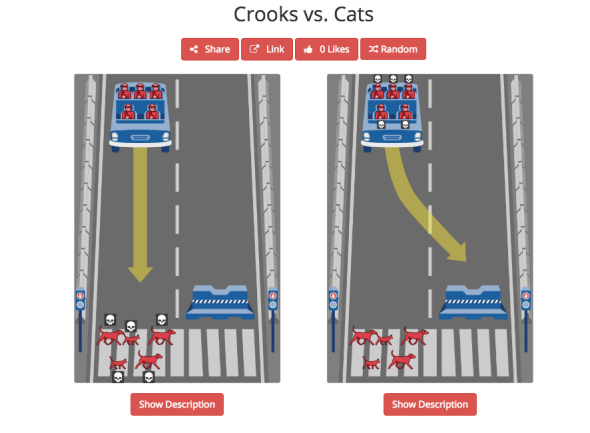

Still, there’s fun to be had here: Moral Machine’s most compelling feature may also be its silliest, a built-in system that allows visitors to create their own dilemmas, letting them fiddle with an array of variables. While it’s possible to engineer highly specific scenarios—say, one in which a car has to choose between taking out three homeless men or an elderly woman and a baby—the easiest dilemmas to build may be the goofiest. In a nod to my own troubling preference for outlaws, I created the scenario “Crooks vs. Cats” in which you have to decide between killing off a car full of hardened criminals and a gang of assorted pets. Before you decide, know this: The pets are jaywalking.

Moral Machine

Tinkering with Moral Machine at this level ultimately points to an odd underlying assumption. Here, we’re assuming that computers will be able to autonomously evaluate and classify human beings. Separating cats and people is simple enough, even with existing machine vision technology. But how, one wonders, would a car “know” whether someone is homeless? And if it can identify criminals, would it do so by referencing their tattoos? Looking up their mug shots? Regardless, if a car’s sensors can single out individuals in a crowd as belonging to one class or another—or, for that matter, form conclusions about the identity of its passengers—it’s already making moral claims.