Everyone knows it’s in Facebook’s interest to get us all to overshare, right? The more we post, the looser our privacy settings, the more data the company gleans, and the better it can target us with personalized advertisements. Not only that, but the more we post publicly, the more useful Facebook becomes as a search engine, a news service, a virtual water cooler, and a recommendation service. (To understand why, just imagine how worthless Yelp would be if a majority of its users turned their reviews to “private” or “friends-only.”)

The baseline assumption for many people these days is that Facebook will take all the data it can get, and only offers privacy options when it absolutely has to.

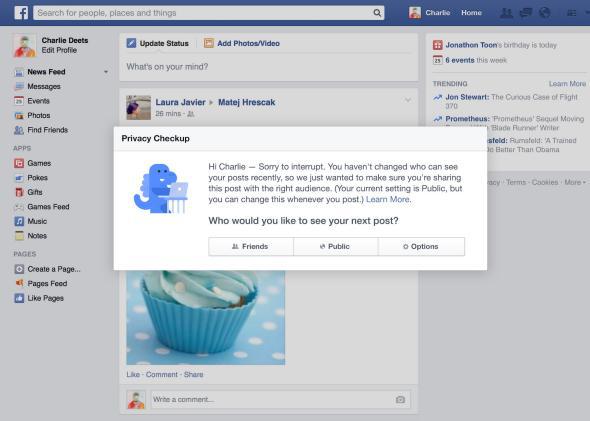

And yet a new privacy measure, which Facebook is testing this week on a subset of users, suggests the company might not be quite as shameless or rapacious as many of its users—and the media—tend to assume. It’s called “privacy checkup,” and it takes the form of a pop-up box that appears one time per user, just as you’re about to post something publicly on your timeline.

In case you’re unable to believe your eyes, I’ll confirm that this is indeed an instance of Facebook checking in to make sure you’re not accidentally sharing things with a wider audience than you realized. That little blue dinosaur, it turns out, is a friendly privacy dinosaur.

You might suspect this is actually a clumsy attempt on Facebook’s part to get people to change their settings from “friends” to “public.” But it isn’t, at least for now: A Facebook spokesman told me the only people seeing the pop-up box in the current test are those who are already posting publicly on Facebook. From Facebook’s perspective, then, this feature can only lead to one thing: Fewer people sharing publicly.

Why, then, would Facebook do this? I can think of two plausible motivations.

The first is that, believe it or not, Facebook understands more than ever that maintaining its users’ trust—or at least a modicum of it—is crucial to its long-term survival. Maintaining access to users’ data is crucial, too, of course. But I think Facebook recognizes that no one wins when people accidentally share things with people they didn’t mean to share them with.

In Mark Zuckerberg’s ideal, “open and connected” world, people would probably be far more comfortable sharing things publicly than they are today. But that’s not the world we live in, and he knows it’s better for Facebook to keep its shyer users sharing privately than to lose them altogether. And remember: Facebook also just spent a cool $19 billion on a messaging service that is inherently private. Clearly the company is well aware of the value and importance of non-public communications.

The second motivation for Facebook to run a test like this is simple: It’s good PR. Remember, the people seeing these Privacy Checkups are those who are already sharing publicly—which means that the test group probably includes a disproportionate number of celebrities, public figures, and media-and-advertising types. Some of them, no doubt, will write articles like this one—or this one, or this one. And here’s something you don’t see every day: an international privacy group congratulating Facebook on looking out for its users.

The fact is, Facebook has never been pure evil, and it will never be purely good either. It’s a company whose interests are sometimes in tension with those of its users, but only to a certain extent. In the end, Facebook only wins if its users feel safe using it. Zuckerberg and company probably could have spared themselves an awful lot of backlash and mistrust if they had fully embraced that lesson a little earlier on.

Previously in Slate: