In 2005, a group of MIT graduate students decided to goof off in a very MIT graduate student way: They created a program called SCIgen that randomly generated fake scientific papers. Thanks to SCIgen, for the last several years, computer-written gobbledygook has been routinely published in scientific journals and conference proceedings.

According to Nature News, Cyril Labbé, a French computer scientist, recently informed Springer and the IEEE, two major scientific publishers, that between them, they had published more than 120 algorithmically-generated articles. In 2012, Labbé had told the IEEE of another batch of 85 fake articles. He’s been playing with SCIgen for a few years—in 2010 a fake researcher he created, Ike Antkare, briefly became the 21st most highly cited scientist in Google Scholar’s database.

On the one hand, it’s impressive that computer programs are now good enough to create passable gibberish. (You can entertain yourself by trying to distinguish real science from nonsense on quiz sites like this one.) But the wide acceptance of these papers by respected journals is symptomatic of a deeper dysfunction in scientific publishing, in which quantitative measures of citation have acquired an importance that is distorting the practice of science.

The first scientific journal article, “An Account of the improvement of Optick Glasses,” was published on March 6, 1665 in Philosophical Transactions, the journal of the Royal Society of London. The purpose of the journal, according to Henry Oldenburg, the society’s first secretary, was, “to spread abroad Encouragements, Inquiries, Directions, and Patterns, that may animate.” The editors continued, explaining their purpose: “there is nothing more necessary for promoting the improvement of Philosophical Matters, than the communicating to such, as apply their Studies and Endeavours that way, such things as are discovered or put in practice by others.” They hoped communication would help scientists to share “ingenious Endeavours and Undertakings” in pursuit of “the Universal Good of Mankind.”

As the spate of nonsense papers shows, scientific publishing has strayed from these lofty goals. How did this happen?

Over the course of the second half of the 20th century, two things took place. First, academic publishing became an enormously lucrative business. And second, because administrators erroneously believed it to be a means of objective measurement, the advancement of academic careers became conditional on contributions to the business of academic publishing.

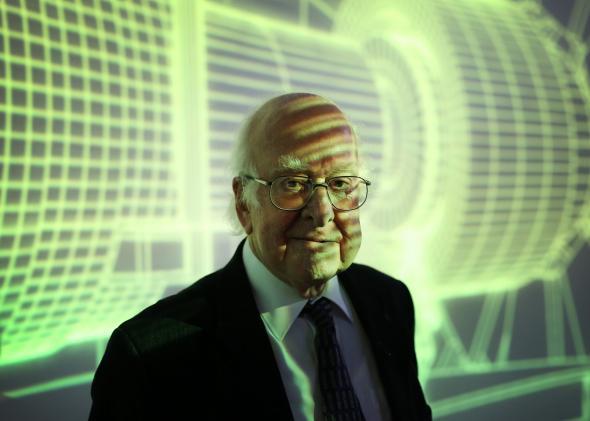

As Peter Higgs said after he won last year’s Nobel Prize in physics, “Today I wouldn’t get an academic job. It’s as simple as that. I don’t think I would be regarded as productive enough.” Jens Skou, a 1997 Nobel Laureate, put it this way in his Nobel biographical statement: today’s system puts pressure on scientists for, “too fast publication, and to publish too short papers, and the evaluation process use[s] a lot of manpower. It does not give time to become absorbed in a problem as the previous system [did].”

Today, the most critical measure of an academic article’s importance is the “impact factor” of the journal it is published in. The impact factor, which was created by a librarian named Eugene Garfield in the early 1950s, measures how often articles published in a journal are cited. Creating the impact factor helped make Garfield a multimillionaire—not a normal occurrence for librarians.

In 2006, the editors of PloS Medicine, then a new journal, were miffed at the capriciousness with which Thomson Scientific (which had bought Garfield’s company in 1992), calculated their impact factor. The PloS editors argued for “better ways of assessing papers and journals”—new quantitative methods. The blossoming field of scientometrics (with its own eponymous journal—2012 impact factor: 2.133) aims to come up with more elaborate versions of the impact factor that do a better job of assessing individual articles rather than journals as a whole.

There is an analogy here to the way Google and other search engines index Web pages. So-called search-engine optimization aims to boost the rankings of websites. To fight this, Google (and Microsoft, and others) employ armies of programmers to steadily tweak their algorithms. The arms race between the link spammers and the search-algorithm authors never ends. But no one at Thomson Reuters (or its competitors) can really formulate an idea of scientific merit on par with Google’s idea of search quality.

Link spam is forced upon even reputable authors of scientific papers. Scientists routinely add citations to papers in journals they are submitting to in the hopes of boosting chances of acceptance. They also publish more papers, as Skou said, in the hopes of being more widely cited themselves. This creates a self-defeating cycle, which tweaked algorithms cannot address. The only solution, as Colin Macilwain wrote in Nature last summer, is to “Halt the avalanche of performance metrics.”

There is some momentum behind this idea. In the past year, more than 10,000 researchers have signed the San Francisco Declaration on Research Assessment, which argues for the “need to assess research on its own merits.” This comes up most consequentially in academic hiring and tenure decisions. As Sandra Schmid, the chair of the Department of Cell Biology at the University of Texas Southwestern Medical Center, a signatory of the San Francisco Declaration, wrote, “our signatures are meaningless unless we change our hiring practices. … Our goal is to identify future colleagues who might otherwise have failed to pass through the singular artificial CV filter of high-impact journals, awards, and pedigree.”

Unless academic departments around the world follow Schmid’s example, in another couple of years, no doubt Labbé will find another few hundred fake papers haunting the databases of scientific publication. The gibberish papers (“TIC: a methodology for the construction of e-commerce”) are only the absurdist culmination of an academic evaluation and publication process set up to encourage them.