Theoretical physicist Michio Kaku* rekindled a perennial discussion this week by saying Moore’s Law, the pacesetter of technological advancement over the last half-century, will hold true for only about 10 more years. As Kaku describes, we’re reaching the physical limits of silicon technology, and at a certain point computing power won’t improve at the rate we’ve come to expect.

This may sound familiar. Every few years, it seems, technology reaches the limits of what’s physically possible, and Moore’s Law is given a decade to get its affairs in order.

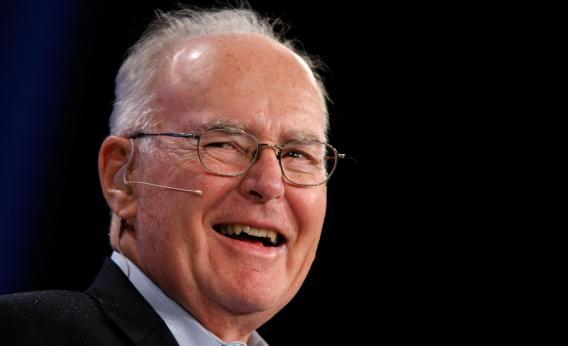

Moore’s Law was first laid out in 1965 by Intel co-founder Gordon Moore. It’s not the kind of specific, immutable law that explains science—it’s more a rule of thumb or a guide for the tech industry. It says the number of transistors that can fit on a single microchip will double every 18 to 24 months. Because transistors carry bits of information inside computers, the maxim is often extended to say computing power doubles as well.

Run this model into the future and there is a clear end point. Objects can only get so small, and as Kaku notes, we’re already making transistors just 20 atoms wide. By the time you get down to five atoms, he says, things start to break down. And even still, how could a transistor get smaller than a single atom?

This isn’t the first time we’ve worried that physical limitations could bring an end to Moore’s Law. Here are a few examples, in reverse chronological order:

- By 2008, there was no way to manufacture anything much smaller than the transistors already in production. Typical transistors were about 65 nanometers wide, and only labs could make them as small as 35 nanometers. The manufacturing process was reaching its physical limits, but as the New Scientist explained, researchers at MIT discovered a way to cut lines as small as 25 nanometers in computer chips. (To understand the scale we’re talking about here, there are 1 million nanometers in a millimeter, and the average human hair is about 100,000 nanometers wide. And just last week Intel started selling chips with 22-nanometer transistors.)

- In 2005, Adam L. Penenberg wrote a Slate article titled “The End of Moore’s Law” that documented the insurmountable challenge of improving transistors as they entered the realm of nanotechnology. Penenberg described how Intel pushed microchips to their physical limit, and the result was an underperforming chip with 90-nanometer transistors. “Unless chip manufacturers figure out some new techniques,” Penenberg wrote, “the march to miniaturization could stall.” Replace the numbers, and it’s fundamentally the same as the argument Kaku made recently: the need to go smaller, the supposed impossibility of doing so, and the question of whether some innovation can keep Moore’s Law from falling apart.

- In 2003, Moore’s Law was under threat, but only because of technological-limitation speculation. The New York Times wrote about “an influential cadre of heretics” who argued that “an end to the slavish demands of Moore’s Law could be the best thing to happen to the culture of Silicon Valley and maybe even to the future of technological innovation that is affected by that culture.” That group included Silicon Valley journalist and investor Michael S. Malone, who called Moore’s Law an unhealthy fixation that was responsible for the dot-com bust a few years earlier. Similarly, the Economist suggested that same year that the pursuit of Moore’s Law was coming to an end not just because of physical constraints, but because microchips were more powerful than anyone really needed them to be. (Take a moment to remember how powerful your cell phone was in 2003 and be glad the gurus in Silicon Valley raced forward.) But warnings about the law’s unavoidable demise continued: According to the Times, in February 2003 Moore told an audience at the International Solid State Circuits Conference that his law would hold up for only another 10 years.

- 1997 was a rollercoaster for Moore’s Law. In February, the New York Times ran a story headlined “Incredible Shrinking Transistor Nears Its Ultimate Limit: The Laws of Physics,” which noted that “the question keeps arising of whether Moore’s law will lapse in the next decade or so, ending the era of explosive growth.” At the time, transistors were as small as 300 nanometers wide. But then, in mid-September, Intel announced a new chip with 250-nanometer transistors that would double computing memory. The next week, IBM announced its own breakthrough to make smaller microchips. And by the first week in October, Steve Lohr at the Times said Moore’s Law was obsolete—because the maxim couldn’t keep up with technology. Look back to 1990 and you’ll find Dean Morton, then-CEO of Hewlett-Packard, saying the same thing.

Though Moore’s Law has held generally true since the first transistor was created in 1947, it also been tweaked. When Moore first presented the idea (PDF) in 1965, he said the number of transistors on a chip would double every year. That was later revised to two years, and then it became a range of 18 to 24 months. But even when explaining it for the first time, Moore made one familiar prediction. “Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least 10 years.”

Correction, May 3, 2012: This post originally misspelled Michio Kaku’s last name.