Now we’re talking. After three weeks of frustrating math dealing with dull statistics problems, we’re on to frustrating math dealing with “neural networks”—models of how the cells in your brain pass information to one another. These are networks in the most traditional sense of the word: a bunch of circles (representing variables) connected by lines.

So far, my fellow students and I—at least those of us who are still in the game—have become pretty good at training computers. Perhaps our machines couldn’t rival Watson on Jeopardy!, but they’d at least be in the running for a Letterman segment on stupid computer tricks. Unfortunately, training a computer to perform a task, like predict housing prices, is different from teaching it to learn. We’re getting closer to the heart of that distinction, which begins with a highly simplified model of the way we ourselves adapt to unforeseen problems.

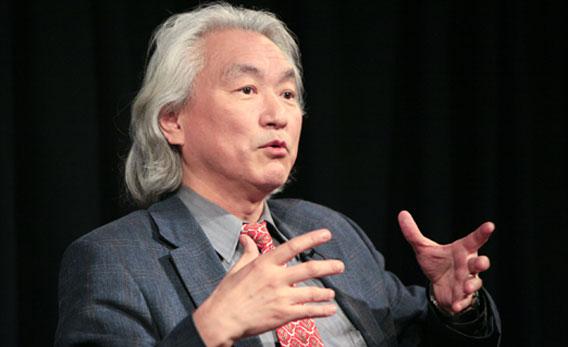

In the science fiction of Arthur C. Clarke and Isaac Asimov, the truly intelligent computer is one that is able to revise its own programming, thus changing to the point that its creators no longer understand how it works. In reality, a computer learns by constantly updating a series of matrices to hone in on the perfect coefficients for calculations that turn one value into another. At face value, this feels disappointing. But in some sense, the truly beautiful and astounding realities of science are always disappointing at the level of calculation. In high school, I dined out on physics mavens like Brian Greene and Michio Kaku, who possess the rare ability to make even mind-bending portions of quantum physics and general relativity accessible to a lay audience. (Well, maybe they just make the theories seem within reach, but given the way most people’s eyes glaze over at the word physics, that is not to be underestimated.)

But when I tried to major in physics in college, I found the classes uninspiring as tedious differential equation assignments kept me up till 4 a.m. My failure to complete the major, which I still regret, tuned me in to this peculiar divergence: You’re meant to be either a scientist or a fan of science, and you discover which one you are when the math gets rough.

The math is getting rough in this class. Matrix multiplication still twists my mind around, but it’s manageable with enough trial and error in the programming assignments. The larger lesson of the past week in Stanford’s machine learning course is that networks themselves possess a supreme intelligence, and developing one to treat your information well is not so different from allowing a computer to code itself. This is true of even very simple social networks in which no math need be applied. As I wrote in 2010, it was a handmade network of Saddam Hussein’s associates and their brethren that led the 4th Infantry Division and their Special Operations Forces partners to the spider hole where the dictator was hiding. (One of the officers in the 4th ID later devoted his Ph.D. dissertation to calculating the weights in that network in a more rigorous manner.)

This part’s for my fellow geeks: On the simplest level, a neural network can accept several binary values and, by weighting them against a neutral variable, determine if one or both are equal to 1—a basic function of computer programming. The examples this week got as complex as translating handwritten numerals into digital figures, but at a core level we’re still using the network to do the heavy lifting. How far this can take us isn’t clear. I remain more competent at reading numerals than a computer—barely.

Grades:

Review questions: 3.5/5

Programming assignment: 81/100