Chris Rodley doesn’t consider himself an artist or a computer scientist. But when he tweeted an image he created using a machine-learning algorithm that crossed a page of dinosaur illustrations with a page of flower prints, many assumed he was both. The cleverly merged image, which looks like a horticulturist’s take on Jurassic Park, went viral. Soon after, he found himself inundated with messages asking to purchase high-resolution copies.

“It was a highly successful procrastination attempt,” says Rodley, a Ph.D. student in digital cultures at the University of Sydney. He explained that he generated the mashup on DeepArt.io, an online program powered by deep neural networks (a kind of advanced machine-learning algorithm) that identify and combine stylistic elements of one image and apply them to another. No artistic or coding experience required.

The technique Rodley used with DeepArt is known as style transfer, and it is just one method in a new wave of algorithmically aided art. In recent years, these kinds of programs have had a resurgence as developers have been improving them to create increasingly sophisticated and beautiful visual and sonic amalgams and original works at ever-faster speeds.

As the complexity and capability of these algorithms grow, however, their users are beginning to cede more artistic control over their outputs to machines. In doing so, they may be ceding another type of control, too: ownership over the creative product. And the consequences could transform the way mainstream art is produced, how we consume it, and how we value the artists behind it.

Algorithmic art is nothing new, but in its early days, it didn’t hold a candle to more human art forms. The earliest known generative computergraphik, created by German mathematician and “father of computer art” Georg Nees in the mid-1960s, mostly consisted of black-and-white ink drawings of lines and polygons. The first computer-generated musical composition, Lejaren Hiller and Leonard Isaacson’s Illiac Suite for String Quartet, came even earlier in 1957. Both efforts were highly experimental and aimed at academic audiences. And both artistic outputs ring with the rigidity of the strict, rudimentary rules imposed in their algorithms. Even later works like Brian Eno’s 1996 album Generative Music 1 (released on floppy disk) still relied on simple, straightforward algorithmic instructions. The end result is, as Eno once described ambient music, “as ignorable as it is interesting.”

Algorithmic art has vastly improved in the past decade thanks in large part to the resurgence of artificial neural networks, a machine-learning method that loosely imitates how neurons in the brain activate one another. Neural networks are particularly good at picking up complicated, hidden patterns in the materials a programmer trains them on. Instead of having to painstakingly code every single rule for transforming an input to an artistic output—as Nees, Hiller, Isaacson, and Eno did—neural nets are set up to automatically derive rules from the materials they are fed.

For example, deepjazz, a program created by Princeton University Ph.D. student Ji-Sung Kim, used artificial neural networks to pick up musical patterns in jazz songs and generate entirely new songs based on them. The software reads a MIDI file and tries to find patterns in the notes and chords of the song as if they were words and phrases in an unknown language. Once deepjazz is convinced it has learned the “grammar” of a song, it outputs random musical sentences that follow the rules of that grammar. One journalist even trained deepjazz to output one of these algorithmic jam sessions based on the theme from Friends. The result was … confusing.

The program that Rodley used to create his floralized prehistoric reptiles also relied on a neural algorithm, specifically the one developed by Leon Gatys and his colleagues at the University of Tübingen in 2015, to pick up on elements of the artistic content and style in images. Since the release of their paper, this kind of style transfer has been used in photo filters from Facebook and Prisma, and on moving images, like the viral video 2001: A Picasso Odyssey. Kristen Stewart, too, used style transfer in her short film and directorial debut Come Swim to redraw a brief dream sequence in the style of an impressionistic painting she had done. (She also got her name on a machine-learning paper to boot.)

Algorithmic art isn’t limited to abstract or combinatorial art, either. The same general machine-learning techniques have been used to generate high-resolution, photorealistic images of things that don’t exist. Just this week, graphics processing unit manufacturer Nvidia published a paper documenting how researchers did just that, with incredibly convincing results. Among them, one that takes images of a winter street scene and predicts what it would look like on a leafy, sunny summer day:

The output terrified some on the internet, who feared such increasingly plausible creations will erode trust in media. (For those looking for comfort, Nvidia also crossed house cats and big cats, and, in previous research, created headshots of fake celebrities.) Gene Kogan, a generative artist and author of Machine Learning for Artists used similar methods to make nonexistent yet realistic images of places, like boathouses and buttes. Though Gatys, Nvidia, and Kogan each use distinct varieties of neural networks, all three systems train on existing works to come up with artistic rules that are then used to create new images.

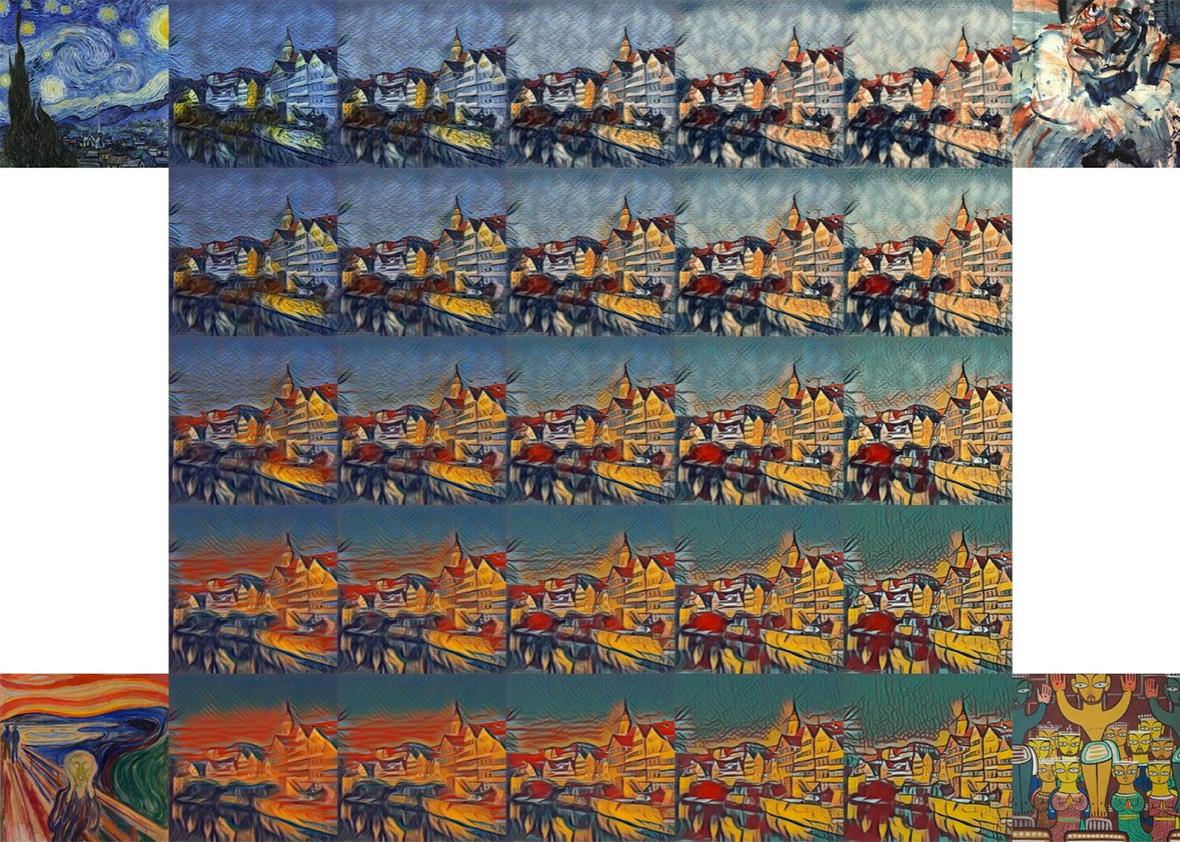

As the artistic products of algorithms continue to enter the mainstream, we’re also starting to see companies and academic institutions pour money and man-hours into improving the speed and capabilities of the production methods behind them. Cornell University and Adobe researchers have been working on a sophisticated version of style transfer for photos. The process they’re developing can, among other tricks, take the sunset lighting of one photo and apply it to a daytime photo of another location. Google, too, has been working on “supercharging style transfer.” Researchers there found a way to get machines to learn how to combine multiple styles into a single image, mixing them as if they were paints. Gatys and his colleagues at Tübingen have continued to improve their system too, including finding a way to let users fine-tune the output by maintaining certain colors or only styling particular areas of an image.

With this surge of interest in these art-generating algorithms, we appear to be near a breakout point for this technology. Yet we still haven’t reckoned with the question of what these tools mean for the creatives who use them. Sure, artists have always been beholden to the capabilities and limitations of media available to them. The history of art is also the history of technology. Premixed paints, artificial colors, printing presses, cameras, phonographs, CDs, digital production tools, the internet, and more have all revolutionized how and what artists produce and how and what their patrons consume. Creators have always been borrowing, lifting, sampling, and remixing the work of other artists, and using tools to augment their own abilities, too.

What’s different is that as the complexity and capability of these advanced algorithms grow, it’s becoming harder for artists to control what the machines will generate from their inputs. Though users can control what data an artificial neural network is trained on, the rules the system derives from the data can be a bit of a black box. Figuring out how neural networks come up with their rules is even harder, even to the programmers who build them. This opacity makes it difficult or impossible for artists to tinker with a machine’s stylistic output. Often, the only way to affect it is through trial and error—at the input stage, this shifts the role of the digital artist to algorithm wrangler.

Essentially, this kind of sophisticated software shifts more of the creative legwork to machines. Rodley, of floral dinosaur fame, described the process of making style transfer pieces as like being a director trying to get a good performance out of an actor. “You don’t press a button and something looks amazing,” he said. “I’ve learned the quirks and the personality traits of the algorithm.” Recently, he said he spent hours in Photoshop tweaking the colors of Botticelli’s Birth of Venus to try to convince the algorithm to recreate the painting in golden retriever fur. (Eventually he got to results that are hairy but recognizable.)

These programs may change the way we value the artists behind them, too. As these systems improve and companies like Adobe and Google start rolling them into client products, we’re likely to see a lot more algorithmic art penetrate mainstream culture. The party line is that these tools will take some of the burden off of artists—graphic designers, studio musicians, film editors—allowing them more time to focus on “real work.” But like other forms of automation, leaving more time for “real work” may translate to fewer job opportunities for artists in the digital economy.

In the future, as algorithms get better at picking up on the underlying aesthetic rules that expert artists often spend years learning, a company could create an entire advertising campaign with only a stylesheet, some wireframes, and a few designers to wrangle the two together. Like Rodley, those wranglers may not even need a background in art.

It’s already starting to happen. Taobao, the Chinese shopping website owned by the Alibaba Group, created banner ads for its mega-shopping holiday Singles’ Day by training algorithms to recognize the design patterns of successful e-commerce ads and generate new ones based on them. Just a few weeks ago, Airbnb showed off an internal tool that uses algorithmic art techniques to convert rough, hand-drawn sketches into fully designed and functional web prototypes.

Optimists may argue that nebulous, innately human qualities like innovation, emotion, and intent will protect artists from being replaced by algorithms. And it’s certainly true that, at least for the near future, these qualities will certainly protect some parts of the creative process and some types of artists (notably fine art, which places a high value on originality). But this does little to reassure artists in more commercial disciplines like graphic design, jingle writing, or stock photography, who may find aspects of their jobs replaced by suboptimal but vastly cheaper algorithms.

It’s also unclear how much creative control artists can give to algorithms while retaining legal ownership over their works. In some cases, software owners can use end-user license agreements—that inscrutable fine print we blindly click “I agree” on in order to use many digital products—to claim or waive ownership, or something in between.

According to one 2001 district court ruling, Torah Soft Ltd. v. Drosnin, when an end user (in this case, an artist) makes something with the aid of software, the software company may be able to claim ownership of the work if the software is deemed responsible for “the lion’s share of the creativity.”

It’s too early to know whether other courts will follow or diverge from that same reasoning, but the reasoning of what constitutes a “lion’s share” recently popped up in a dramatic, and potentially landmark case, between the elite computer graphics company Mova and the major film studios Disney, Fox, and Paramount.

The studios admit to knowingly using stolen Mova technology to turn actors’ facial expressions into photorealistic computer graphics in movies like Guardians of the Galaxy and The Curious Case of Benjamin Button. Mova claims that because their tools do the lion’s share of the creative work in generating the faces, any of the animojilike characters that appear in the films are unlawful derivative works (read: they’re entitled to a lot more money). The studios counter that the actors and directors do the lion’s share of work, and that if the court sides with Mova, it would set a dangerous precedent for digital artists.

“If [Mova’s] authorship-ownership theory were law, then Adobe or Microsoft would be deemed to be the author-owner of whatever expressive works the users of Photoshop or Word generate by using those programs,” wrote Kelly Klaus, the attorney for the studio defendants.

Today, the pervasive open-source culture in the algorithmic art community protects artists from some of these worries. But as tools shift into the private sphere, artists may find themselves in trouble depending on how the justice system sides.

Ceding creativity to software may also shift a final element of control for artists: how audiences perceive their work. Art lovers may have to transform their analysis of art—spending hours interpreting a stylistic choice or psychoanalyzing a creator’s intent—when such questions of intent are put to the black box of an artificial neural network. There may also be confusion, embarrassment, or distrust in a world where human authorship becomes increasingly unclear.

This will likely make art consumption less about criticism and more about taste and aesthetics. This may disappoint art critics, explains Rodley, but he says he believes that it makes art more accessible. “It threatens the current model of art, but not human creativity,” he says. “We should challenge the idea that we should only listen to artists.”

It’s true that the technologies that have radically changed the ways art has been produced and consumed all yielded new and profound forms of creative expression. As Renoir said, “Without colors in tubes, there would be no Cézanne, no Monet, no Pissarro, and no Impressionism.”

However, these tools can come at the expense of artists. With the printing press came mass reproductions. With MP3s came piracy. With computers came a shift to digital media. With Photoshop came distrust. Algorithmic art will be no exception to these historic forces. The vibrant world of artistic potential that’s opened up by algorithms will be darkened by the potential for artists to lose control.

This article is part of Future Tense, a collaboration among Arizona State University, New America, and Slate. Future Tense explores the ways emerging technologies affect society, policy, and culture. To read more, follow us on Twitter and sign up for our weekly newsletter.