The statistical theory of traditional polling is amazing: In the theory, a random sample of 1,000 people is enough to estimate public opinion within a margin of error of +/- 3 percentage points.

But this theory does not exist in reality. We cannot really get a random sample of American voters: Fewer than 10 percent of people respond to pollsters who call them on the phone, and it is not easy dividing voters from nonvoters. The resulting samples are unrepresentative of the general population of voters. Thus, even though pollsters can and do correct the sample for the consistent overrepresentation of women, older people, whites, etc., in surveys, and get adjusted samples, we have shown that the actual margin of error is closer to 7 percentage points. Aggregating polls within a given contest really improves the accuracy, but it does not solve all of polling’s problems.

In the 2016 presidential election, the averages of traditional polls had Hillary Clinton leading Donald Trump by between 3 and 4 percentage points. This was actually very close to her 2.1 percentage point win. The polls didn’t do so great with the Electoral College—those assessments underestimated Trump’s chance of winning. Reconsidering those polls suggests that the corrections performed for nonresponse in state polls were not as thorough and effective as were done for national surveys. We heard a lot about the poll misses in Wisconsin and Michigan, but in fact the largest discrepancies between state polls and actual votes came in strongly Republican states such as North Dakota and West Virginia, where it seems that rural, less-educated whites were disproportionately less likely to respond to surveys, and pollsters did not do a good enough job adjusting for these factors.

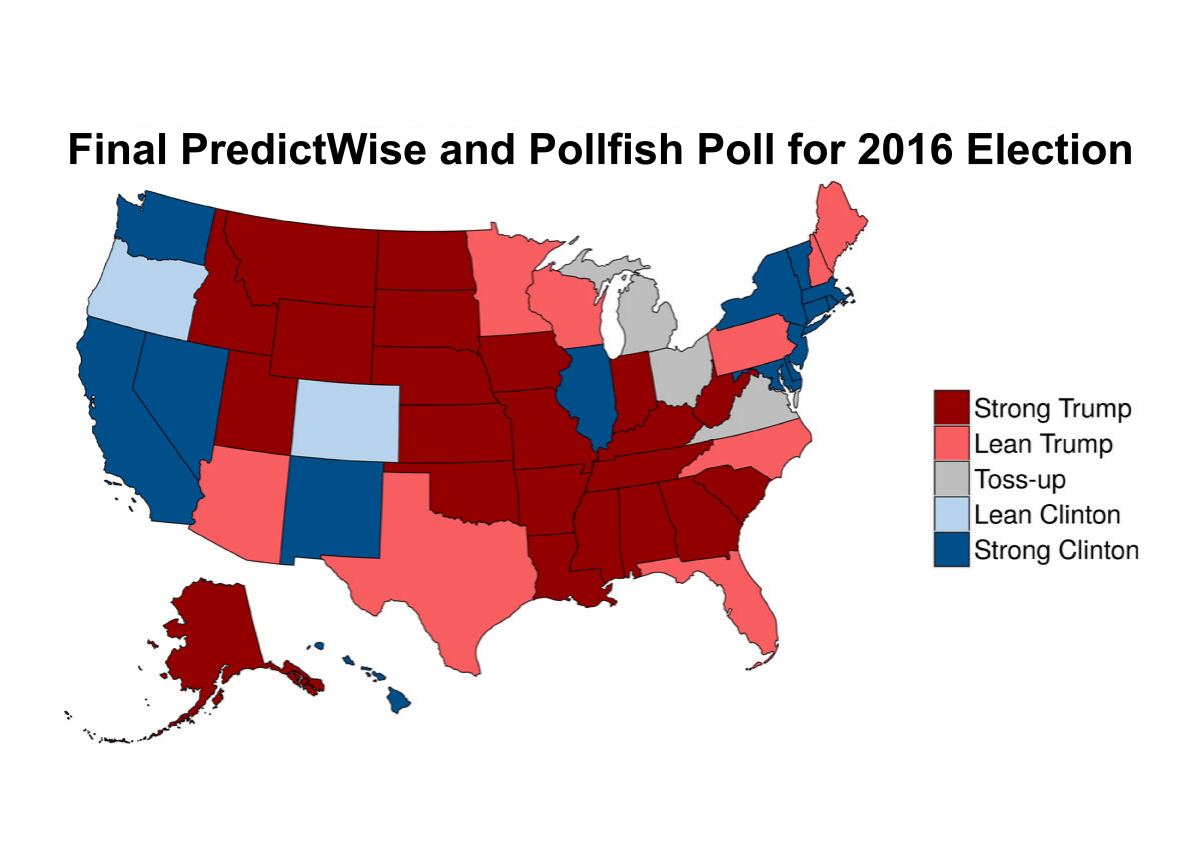

In the wake of the 2016 election, which was not the massive failure in polling that many imagine but rather a misstep that exemplifies the current flaws of the system, many are asking “what is the future of polling?” Traditional polls cost a lot of money, take a lot of time, and are very rigid in their question design; this is all done in pursuit of the impossible hope of random and representative samples. The good news is that it is possible to correct for differences between sample and population. No, we can never get all the way there, but in the recent U.K. general election, the pollster YouGov used the statistical technique of multilevel regression and poststratification, or MRP, to come close to the final result, in a race where conventional pollsters were far off. And, building off of our work with Xbox in 2012, two separate studies, both publishing their results during the 2016 presidential cycle, showed this could work: First, using the opt-in polling on the front of MSN, a MRP correction got 46 of the 50 states and D.C. right, basically matching the aggregation of the traditional polls. Second, using an ad-based mobile collection, Pollfish, the accuracy was even better. (Full disclosure: We have academic and business ties to YouGov and Pollfish.)

PredictWise and Pollfish

The most interesting developments in polling, however, go far beyond mere tweaks to the standard paradigm. Traditional polls are atomistic, focusing nearly entirely on characteristics and opinions of the individual respondent and aiming for a single target: Election Day. It’s when we move beyond these narrow focus points that we will start to see gains.

Instead of asking people just about their own views, let’s also ask about their families, friends, and neighbors. In addition to vastly increasing the effective sample size of a survey, this sort of network question has the potential to give insights into the sorts of political enthusiasms and dissatisfactions people feel strongly enough about to convey to others. Further, why ask the same people the same questions? Online and mobile surveys make branching or adaptive polling easy and effective. The system can ask the next needed question to any respondent.

And instead of zeroing in on elections, we should think of polling and public opinion as a more continuous process for understanding policy. The Affordable Care Act, or Obamacare, was hampered by polls showing low public opinion, with Republicans seeing it as vulnerable. But because of expensive traditional polling, the pollsters were limited to asking a single question of overall support. At the same time, every single component (except the individual mandate) had strong bipartisan support. Cheaper and more flexible polling methods will allow researchers to study the nuances of public opinion more regularly, providing better insight into what Americans want politicians to do once they are elected.

So what is the future of polling? First, a move away from telephone surveys toward online and mobile polling and the gathering of opinion in less traditional ways, including data from searches and purchases. Second, a correspondingly larger need for sophisticated adjustments as pollsters work with data that are less immediately representative of the population. Third, network polling, in which we learn not just about individuals’ views but also what they are hearing from family, friends, and neighbors. And, finally, continuous polling that expands past the horse race, reflecting the continually contested nature of politics in our polarized era.

This article is part of Future Tense, a collaboration among Arizona State University, New America, and Slate. Future Tense explores the ways emerging technologies affect society, policy, and culture. To read more, follow us on Twitter and sign up for our weekly newsletter.