Poetry allows us to explore the depths of what means to be human. It evokes emotion, imagination, and connection between author and reader.

Perhaps because these compositions represent the most human of pursuits, it seems like one of the last talents humans would ever cede to machines. Yet inventors have been endeavoring to build exactly such devices—and the history of attempts to create these artificial bards is longer than you might think.

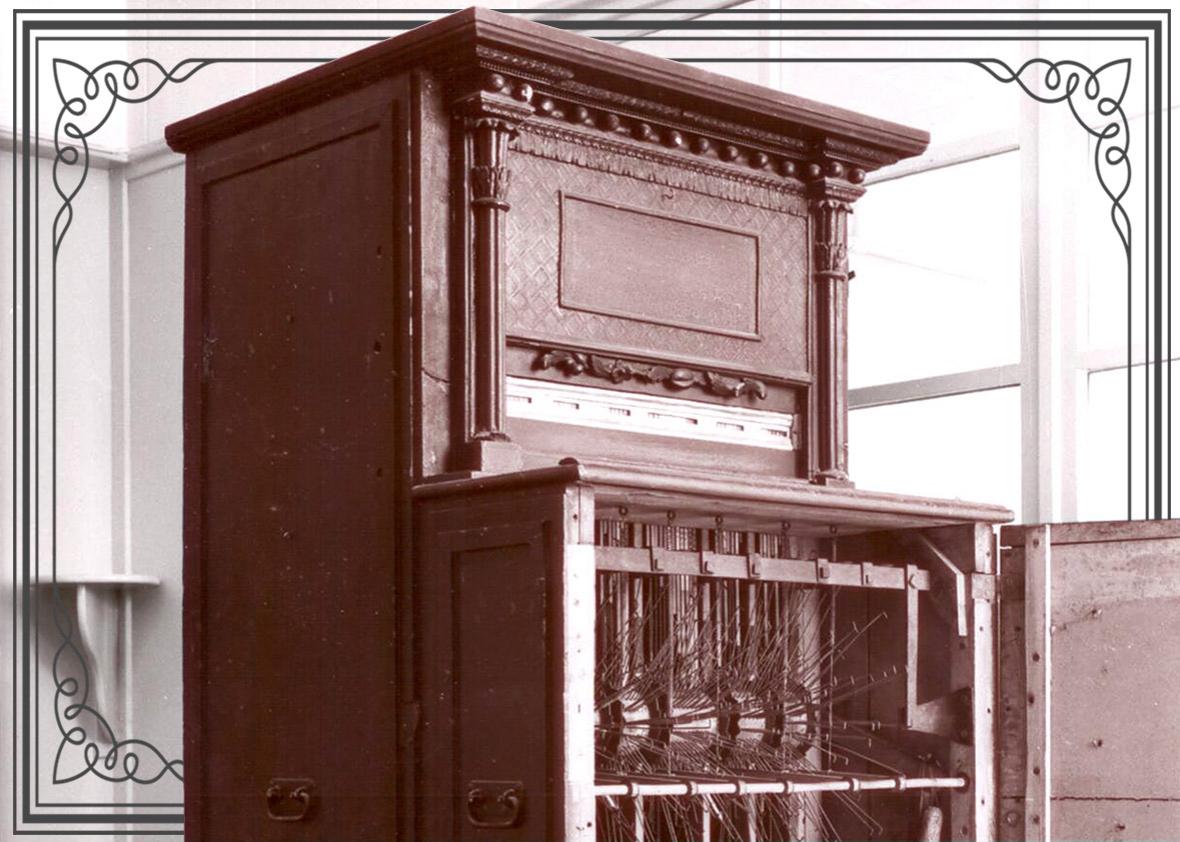

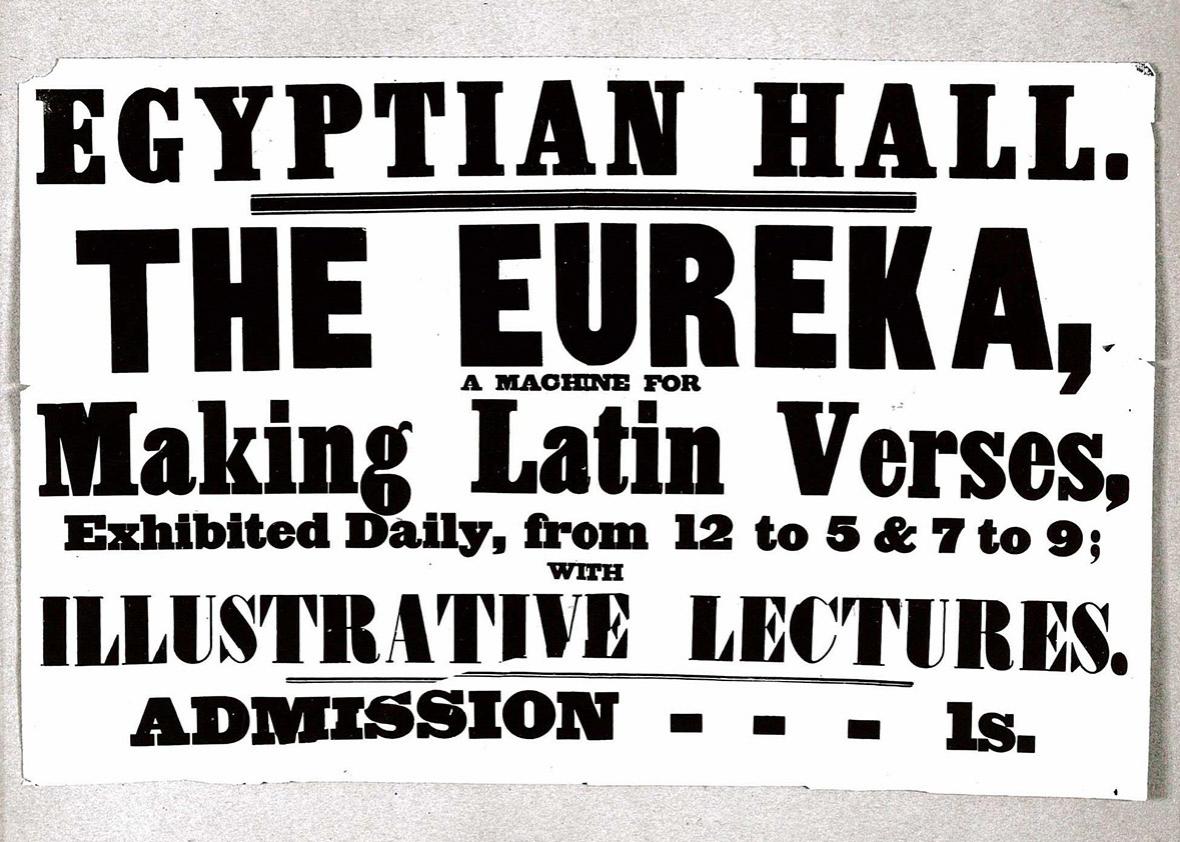

One of the earliest known poetry generators was born from the Victorian enthusiasm for automata. In 1845, inventor John Clark debuted his Eureka machine at the Egyptian Hall in Piccadilly, London. For the price of 1 shilling, visitors to the exhibition space could wind up the wooden, bureau-like contraption and watch the mechanized spectacle of wooden staves, metal wires, and revolving drums grinding out a line of Latin verse that would appear in the machine’s front window. More specifically, a grammatically- and rhythmically-correct line of dactylic hexameter, the kind used by Virgil and Ovid. The meter-making machine relied on producing just six words that never varied in scansion or syntax (always adjective-noun-adverb-verb-noun-adjective, as in “martia castra foris prænarrant proelia multa” or “martial encampments foreshow many battles abroad”). Despite its constraints, the system was capable of churning out an estimated 26 million permutations.

Critics of the era exercised skepticism. One reviewer called it a “useless toy.” Another deemed it “little better than a mere puzzle, which any school-boy might perform by a simpler process.”

Modern scholars, however, have demonstrated a deeper appreciation. “The Eureka machine was much more than a show-place diversion,” Jason David Hall wrote in a 2007 paper. Instead, he said, it was “situated at the intersection of popular culture and scholarly specialization,” and represented “at once the technological embodiment of and a parody of Victorian prosodic science [the study of verse and meter].” The Victorians loved a show, especially one that drew upon both science and illusion for an unforgettable performance. The Eureka offered both. The machine was a feat of industrial progress, but sensational enough to be entertaining. And it can still wow. The unveiling of a recently restored version of Clark’s invention drew crowds of excited visitors who, like spectators past, reveled in the show.

Around the same time as the Eureka’s original debut, the now-defunct Sunday Mercury newspaper in New York also ran with the idea of an automaton bard with a feature it called “machine poetry.” It became a sensational running joke in the paper—printing clumsy, robotic lines purportedly written by a hand-cranked machine powered by a staff drunkard named Bill. One poem, “Love’s Victim” reads:

Oh, list to me Lizzy,

You sweet little booger!

Love makes me feel dizzy,

Like brandy and sugar!

My vision is reeling –

My brains are all burning,

And the sweet cream of feeling

Is curdled by churning;

For my heart ’neath my jacket

Is up and down jumping;

And it keeps up a racket

With its thumping and bumping.

Other publications quickly jumped on the machine poetry bandwagon. The Poughkeepsie Journal in New York announced that it had purchased a water-powered model that would soon be generating “hydraulic poetry.” Though both the Eureka and the Mercury’s “mechanical” poet were mostly treated as oddities, both the real and fake devices encouraged audiences to consider what machines were really capable of.

Even the Eureka wasn’t really an example of early artificial intelligence, though. It took until the advent of computers to usher in a new wave of machine verse.

In 1959, German mathematician Theo Lutz created what is commonly considered the first computer poetry by writing a program that recombined Franz Kafka’s unfinished novel Das Schloss (The Castle). What resulted was a series of nonsensical sentences strung together as though the output were actually written by the Mercury’s resident alcoholic, Bill:

EIN TURM IST WUETEND. JEDER TISCH IST FREI.

EIN FREMDER IST LEISE UND NICHT JEDES SCHLOSS IST FREI.

EIN TISCH IST STARK UND EIN KNECHT IST STILL.

NICHT JEDES AUGE IST ALT. JEDER TAG IST GROSS.

A TOWER IS ANGRY. EVERY TABLE IS FREE.

A STRANGER IS QUIET AND NOT EVERY CASTLE IS FREE.

A TABLE IS STRONG AND A LABOURER IS SILENT.

NOT EVERY EYE IS OLD. EVERY DAY IS LARGE.

This output doesn’t make for great reading, but Lutz’s program opened the computer-generated text floodgates. It proved that one could mathematize language so that computer programs could combine words in ways that the Eureka never could. Nevertheless, such computer poetry remained largely confined to academic communities with equipment and expertise. The general public may have read about Lutz’s program in the newspaper, but they weren’t given the opportunity to pay a shilling so they could try it out for themselves.

Personal computers, unsurprisingly, greatly expanded the development of computer poetry. In 1984, William Chamberlain and Thomas Etter (little is publicly known about either) unveiled the text-generator Racter with the release of The Policeman’s Beard is Half Constructed, a collection of poetry, prose, and dialogue assembled by the program. Among the poems:

More than iron, more than lead, more than gold I need electricity.

I need it more than I need lamb or pork or lettuce or cucumber.

I need it for my dreams.

In the opening of the compendium, Chamberlain declares that this is “the first book ever written by a computer” and that, with the exception of his introduction, all contents were composed by the program. But some have questioned this claim.

To make Racter run, Chamberlain and Etter developed a programming language called INRAC. The system functioned through the use of tags, identifiers embedded in each word to ensure appropriate syntactical and sematic applications, that worked in conjunction with templates that would properly place the words in a sentence.

Alfred Gillett Trust

When Chamberlain and Etter released commercial versions of INRAC and Racter in 1985, they received almost unanimously negative reviews. One critic found the INRAC language “as idiosyncratic as the authors of Racter or Racter himself (itself?).” Other users, including modern Racter enthusiast Jorn Barger, concluded that the commercial version of Racter would have been incapable of writing much of The Policeman’s Beard without relying on boilerplate patterns and strict constraints imposed by its human creators.

While the words and constructions used in The Policeman’s Beard seemed to be intentionally wacky, they were repeated enough to give the book a sense of continuity. For example, Racter regularly referenced certain foods—lettuce, tenderloins, cucumber, and lamb. Coupled with particularly bombastic verbs and descriptors (like ruminate, inexorable, and inflame), the book makes for a surprisingly entertaining read, simply because it’s so outrageous. According to Barger, though, the extreme limitations on potential words and constructions made the templates more like text degeneration rather than text generation. In other words, the program functioned as a sort of digital game of Mad Libs, not an independent scribe.

The original iteration of Racter that allegedly wrote The Policeman’s Beard hasn’t survived, so there’s no way of verifying these claims. However, nearly all, including Chamberlain himself, agree that the program can’t be considered an artificial intelligence. You can still access the commercial chatbot version of Racter online—though prepare to have your patience tested.

In the decades following Racter, computing and computing power took off, and programmers brought increasingly advanced poetry-generation systems to life. Among others, there’s Ranjit Bhatnagar’s Pentametron, a Twitter bot that couples public tweets written in iambic pentameter to create rhyming couplets. There’s Ray Kurzweil’s cybernetic poet, which generates short poems in the styles of various human poets. And there’s Jack Hopkins’ system, which learned to produce verse that adheres to particular rhythms, styles, and themes after being fed 7.56 million words of mostly 20th century poetry. You can even generate your own texts by using a free online tool demonstrating how Markov chains work.

The programs are also getting far better at mimicking humans. As writer-programmer duo Oscar Schwartz and Benjamin Laird demonstrate with their project Bot or Not—a quiz branded as “a Turing test for poetry”—language-simulating algorithms have improved to the point that it can be difficult to distinguish between computer-generated and human-authored verse. In a 2015 talk about the assessment, for example, Schwartz notes that most players answer that a particular poem penned by Gertrude Stein is bot, while another written by an Emily Dickinson–fed algorithm is not.

This development may be unsettling to some. After all, there’s a certain strangeness to the idea of computers entering the art form Allen Ginsberg deemed “the one place where people can speak their original human mind.” We’ve progressed poetry-generation programs bounds ahead of the Eureka churning out stilted Latin hexameter, or even Racter offering up preposterous lines about a nasty, dull, rumrunning pig.

And yet, in the 200 years that humans have been building such machines, we’ve only managed to develop instruments that imitate. They manufacture poetry based on our rigid constraints: always following, emulating, or reworking writings from a human hand. The devices don’t assign meaning to the phrases they emit. Nor do they compose with emotion, memory, or intent. At least for now.

Schwartz admits that his Turing test for poetry—like the original test itself—was meant more as a philosophical provocation than a definitive assessment of who or what passes for a real person. Instead, he says, the project has led him to understand that humanness is not a scientific fact, but an ever-shifting notion that we construct.

“More than any other bit of technology, the computer is a mirror that reflects any idea of the human we teach it,” he said. We can question whether we’re really building human-like artificial intelligence, he said, but we should really be asking what idea of the human we want to have mirrored back.

For now, that reflection can a bit rough. Hopkins’ system, for example, still produces some texts of questionable quality. But, every once and a while, it shows something profound:

A thrilling flash of wind waiting to fall,

with summer sun and vapor and worn rock.

A violent landscape for this world and all,

slipped from the hill or by a ticking clock.

This article is part of Future Tense, a collaboration among Arizona State University, New America, and Slate. Future Tense explores the ways emerging technologies affect society, policy, and culture. To read more, follow us on Twitter and sign up for our weekly newsletter.