Emerging technologies are curious things. With their aura of risk and disruption, they seem to come out of nowhere—but their patina of novelty typically camouflages a much longer history. Cloud computing descended from 1960s-era computational time-sharing; Nikola Tesla wrote about the possibilities of self-driving cars decades ago. Indeed, promoters’ obscuration of emerging technologies’ past is essential to their future promise. Their seeming newness, coupled to their vulnerability to market forces and public reaction, helps generate hype. In turn, the hyperbole—so the model goes—drives interest and investment.

Since the 21st century began, few emerging technologies have been so heavily promoted, funded, and debated as nanotechnology. Defined (currently) by the U.S. government as “the understanding and control of matter at the nanoscale”—a nanometer is one-billionth a meter—nanotechnology as a field and research community has received billions of funding dollars, making it one of the nation’s largest technology investments since the space race.

Yet the idea that manipulation of the material world at the near-atomic scale was possible, even within grasp, possesses a historical arc stretching far back to the height of the Cold War. From the 1950s onward, investments in physics, chemistry, and the relatively new field of materials science provided a research foundation for the eventual emergence of nanotechnology. In fact, architects of a post–Cold War national nano-research program often traced its origins back to a discrete point in time and space.

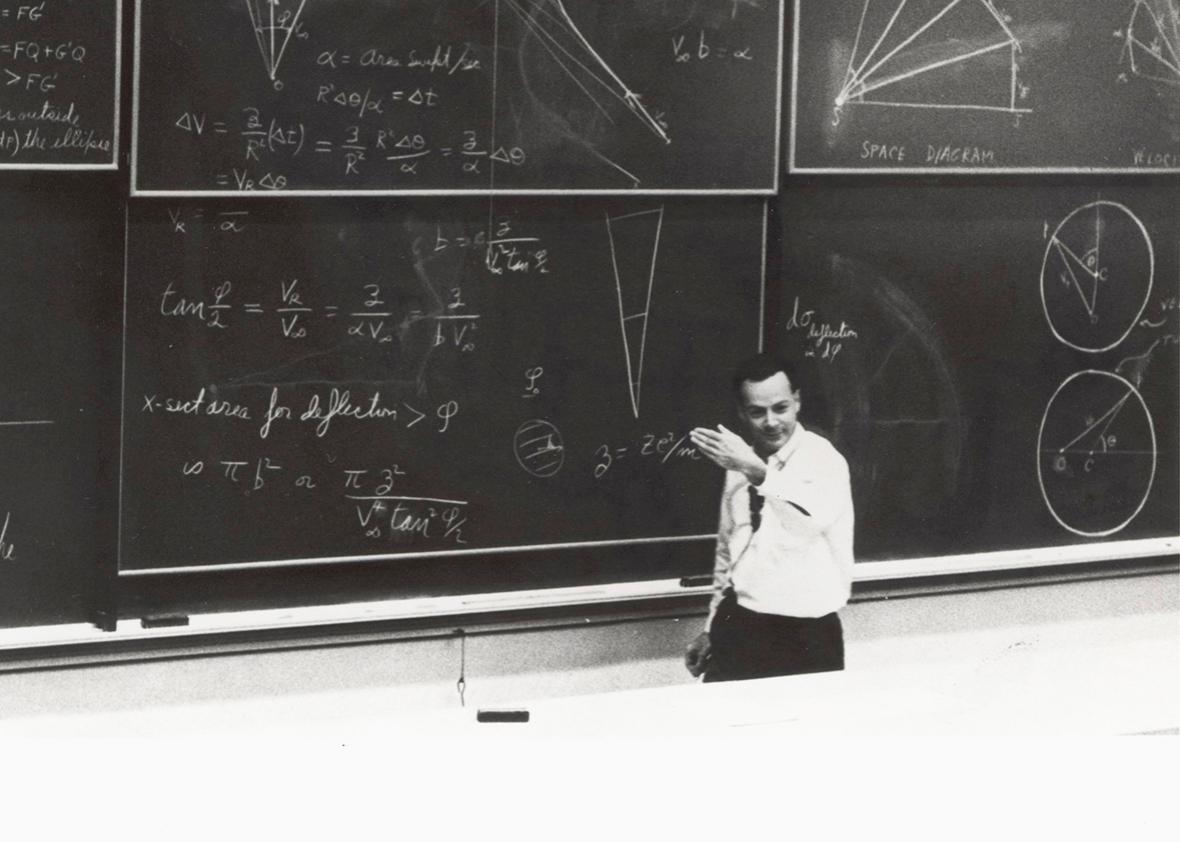

On Dec. 29, 1959, Caltech physicist and future Nobel winner Richard P. Feynman gave an after-dinner address in Pasadena, California, to members of the American Physical Society. His talk, whimsically titled “There’s Plenty of Room at the Bottom,” predicted that scientists would build microscopic machines that could manipulate matter at a very small scale with engineering done at the “bottom”— i.e., the molecular level.

In “Plenty of Room,” Feynman blended speculative extrapolations of existing technological trends with ideas freely borrowed from recent developments in biology and science fiction. (Robert Heinlein’s 1942 novella Waldo was one source of inspiration.) However, Feynman himself did no research that built on his over-the-horizon speculations. The 1965 Nobel Prize he shared was for theoretical physics research on how light and matter interact at the most fundamental levels. Although popular magazines like Time and the Saturday Review reported on ideas Feynman outlined, his talk soon sank out of sight.

Its near-invisibility remained the case for more than three decades until scientists and policymakers rediscovered “Plenty of Room” and interpreted it as visionary portent for what had become known as nanotechnology. When President Bill Clinton visited Pasadena in January 2000, he gave a speech at Caltech and announced what evolved into the National Nanotechnology Initiative. Feynman-esque visions and ideas informed his address. But Clinton, of course, didn’t happen along to nanotechnology by himself.

Richard E. Smalley—the name invites puns—was one of a small cadre of mainstream scientists and policy experts who had put nanotechnology on the Clinton administration’s radar screen in the 1990s. A chemistry professor at Rice University, Smalley shared the Nobel Prize in 1996 for the discovery of carbon-60 molecules (better known as “buckyballs”). He realized that nanotechnology was a broad umbrella under which researchers from many disciplines could gather. Smalley was soon telling his university’s leaders, public audiences, and congressional committees that nanotechnology was the technological future—a vast new scientific frontier for American researchers and students to explore.

A lot was happening in the mainstream world of science and engineering to buttress Smalley’s advocacy. For instance, in the mid-1980s, Heinrich Rohrer and Gerd Binnig, working at an IBM lab in Zurich, pioneered new instrumentation such as the scanning tunneling microscope (and won the 1986 Nobel Prize in Physics). This instrument and later variants gave scientists and engineers the ability to not only see but also move individual atoms and place them where they wanted.

So far, this narrative of nanotechnology’s history is full of the actors one might expect: corporate laboratories, research engineers, university professors, Nobel winners, and science policy managers. But other people had read Feynman’s original 1959 address and were eagerly tracking developments in nanotechnology. One of these people was a self-described “exploratory engineer” named K. Eric Drexler. In fact, it was Drexler who, almost single-handedly, introduced and popularized the word nanotechnology in the first place.

In the 1970s, as an undergraduate at MIT, Drexler was deeply influenced by Gerard O’Neill, a Princeton professor who achieved considerable public recognition for promoting large-scale space colonies. As the excitement of the space program waned, Drexler was transfixed by the potential of new technological frontiers not beyond our planet but with the manipulation of matter at the smallest scales. In this, he was inspired by that era’s emerging technologies: biotechnology and genetic manipulation, as well as the continuing shrinkage of microelectronics.

In 1981, Drexler presented his ideas—what he initially called “molecular engineering” —in the Proceedings of the National Academy of Sciences. His short article claimed that the ability to design biomolecules could lead to the manufacturing of molecular-scale devices that, in turn, could make “second-generation machines” and then eventually lead to “construction of devices and materials to complex atomic specifications.” Long on enthusiastic ideas but short on specific scientific details, Drexler’s early writings nonetheless offered an enthusiastic view of a technological future in which engineers had precise control over the material world.

After moving to Silicon Valley in 1985, Drexler completed Engines of Creation, a book summarizing his vision of the (nano)technological future. With Marvin Minsky, MIT’s artificial intelligence guru who later supervised Drexler’s Ph.D. work, providing an introduction, Engines became the canonical text for what Drexler and others had begun to commonly refer to as nanotechnology; Time called it the “nanotechnologist’s bible.”

Drexler’s visionary ideas received considerable attention among the techno-literati in the San Francisco Bay Area, particularly in Silicon Valley communities immersed in computer hardware, software, and biotechnology. Cybernetics principles, artificial intelligence, biotechnological analogies, and computer control all figured prominently in the Drexlerian view of nanotechnology. Why were his ideas so popular among software coders and computer engineers? One explanation is the fact that Drexler’s predictions about nanotechnology seemed to follow the success California’s computer and biotechnology industries had already enjoyed. In this framework, technologies improved continuously at a rate dictated by Moore’s law and, every so often, a new idea—nanotechnology, perhaps—would appear to shatter prevailing technological paradigms.

However, the publicity and popularization of his ideas, compounded by the fact that Drexler wasn’t doing traditional laboratory research, made him a controversial figure. In the late 1990s, when mainstream scientists and policymakers began to assemble the framework for a national investment in nanotechnology in the late 1990s, they largely ignored Drexler’s intellectual and popularizing contributions. In fact, mainstream scientists and policymakers actively worked to marginalize Drexlerian ideas and demarcate their plans of what nanotechnology was from more radical visions.

The “establishment’s” push for nanotech came at an important time for many American scientists. In the late 1980s and throughout the 1990s, economic competitiveness replaced the twilight military struggle of the Cold War, and science advocates could no longer claim national defense as the prime rationale for funding basic research. Smalley and other mainstream advocates of nanotechnology proposed it at a propitious time—between the end of the Cold War and a renewed preoccupation with national security after Sept. 11, 2001—when lawmakers were trying to reshape national science policy.

Their policy push happened just as the larger public’s perceptions about nanotechnology—was it Drexlerian nanobots or a more incremental blend of physics and chemistry?—were still in a nascent state. Smalley and some policymakers had fears that negative perceptions might impede their plans for a national research initiative. This concern was heightened when, in April 2000, Wired magazine published Bill Joy’s article “Why the Future Doesn’t Need Us.” Joy, one of the co-founders of Sun Microsystems, warned about future technological dystopias. He singled out nanotechnology—especially the Drexlerian visions of autonomous and self-replicating nano-assemblers—as a potential threat to humanity. Two years later, novelist Michael Crichton published his best-selling techno-thriller Prey. Central to its plot was the deliberate release of autonomous, self-replicating nanobots. Crichton’s book hit every button that might stoke public alarm about nanotechnology: a greedy, high-tech firm; lack of government regulation; new technologies turned into military applications. Crichton even provided his readers with an epigraph from Drexler and a multipage bibliography that listed Drexler but neglected “traditional” chemists like Smalley.

Ultimately, the battle between Drexler’s and Smalley’s versions of nanotechnology—a conflict that took place primarily in the pages of magazines, technical journals, and policy hearings—was hardly a fair one. On one side: an articulate and charismatic person regularly described as a futurist and popularizer, with a relatively small number of technical publications, and no major funding or institutional affiliation. On the other side: an articulate and charismatic Nobel laureate who had secured millions of dollars in grants and authored hundreds of peer-reviewed articles.

Ultimately, their clashing viewpoints were about making definitions and marking several boundaries: present-day science versus exploratory engineering; whether the point of view of chemistry or computer science was more representative of the nanoscale world; and ultimately, who had the authority to speak on behalf of “nanotechnology.”

The denouement took place on a cold, sunny afternoon in early December 2003 when George W. Bush signed the 21st Century Nanotechnology Research and Development Act. When Bush signed the bill into law, Richard Smalley was there, looking over the president’s shoulder. But Eric Drexler, once dubbed the “father of nanotechnology” and the person who had popularized the word in the first place, was nowhere in sight.

Emerging technologies exist, at least in part, in the subjunctive realm of the future and the possible. The clash over what nanotechnology is (and who should speak for it) reminds us that the future is not a neutral space that we, either as individuals or as a society, move into. Rather, the future is a contested arena of speculation where diverse interests meet, debate, argue, and compromise. The future exists as an unstable entity, which different individuals and groups vie to construct and claim through their writings, their designs, and their activities while they marginalize alternative futures.

By the time Barack Obama leaves the Oval Office, the U.S. government will have spent close to $25 billion on nano-related research. Whether future generations will look at predictions about nanotechnology’s ability to reshape the world with the same bemusement that the phrase “energy too cheap to meter” draws remains to be seen. Nanotechnology remains as much a futuristic vision as an active area of real-life research and development. With the inevitable disappointments that almost always accompany emerging technologies, one may wonder why they remain so attractive to scientists, lawmakers, and the public. One answer is that visions of technological utopias, despite their dubious claims to success and the darker visions that accompany them, still sell and compel.

This article is part of the nanotechnology installment of Futurography, a series in which Future Tense introduces readers to the technologies that will define tomorrow. Each month, we’ll choose a new technology and break it down. Future Tense is a collaboration among Arizona State University, New America, and Slate.