It seems Facebook’s human news editors weren’t quite as expendable as the company thought.

On Monday, the social network’s latest move to automate its “Trending” news section backfired when it promoted a false story by a dubious right-wing propaganda site. The story, which claimed that Fox News had fired anchor Megyn Kelly for being a “traitor,” racked up thousands of Facebook shares and was likely viewed by millions before Facebook removed it for inaccuracy.

The blunder came just three days after Facebook fired the entire New York–based team of contractors that had been curating and editing the trending news section, as Quartz first reported on Friday and Slate has confirmed. That same day, Facebook announced an “update” to its trending section—a feature that highlights news topics popular on the site—that would make it “more automated.”

Facebook’s move away from human editors was supposed to extinguish the (farcically overblown) controversy over allegations of liberal bias in the trending news section. But in its haste to mollify conservatives, the company appears to have rolled out a new product that members of its own trending news team viewed as seriously flawed.

Three of the trending team members who were recently fired told Slate they understood from the start that Facebook’s ultimate goal was to automate the process of selecting stories for the trending news section. Their team was clearly a stopgap. But all three said independently that they were shocked to have been let go so soon, because the software that was meant to supplant them was nowhere near ready. “It’s half-baked quiche,” one told me.

Before we poke and prod that quiche, it’s worth clearing up a popular misunderstanding. Facebook has not entirely eliminated humans from its trending news product. Rather, the company replaced the New York–based team of contractors, most of whom were professional journalists, with a new team of overseers. Apparently it was this new team that failed to realize the Kelly story was bogus when Facebook’s trending algorithm suggested it. Here’s how a company spokeswoman explained the mishap to me Monday afternoon:

The Trending review team accepted this topic over the weekend. Based on their review guidelines, the topic met the conditions for acceptance at the time because there was a sufficient number of relevant articles and posts. On re-review, the topic was deemed as inaccurate and does no longer appear in trending. We’re working to make our detection of hoax and satirical stories more accurate as part of our continued effort to make the product better.

So: Blame the people, not the algorithm, which is apparently the same one Facebook was using before it fired the original trending team. Who are these new gatekeepers, and why can’t they tell the difference between a reliable news source and Endingthefed.com, the publisher of the Kelly piece? Facebook wouldn’t say, but it offered the following statement: “In this new version of Trending we no longer need to draft topic descriptions or summaries, and as a result we are shifting to a team with an emphasis on operations and technical skillsets, which helps us better support the new direction of the product.”

That helps clarify the blog post Facebook published Friday, in which it explained the move to simplify its trending section as part of a push to scale it globally and personalize it to each user. “This is something we always hoped to do but we are making these changes sooner given the feedback we got from the Facebook community earlier this year,” the company said.

That all made sense to the three former trending news contractors who spoke with Slate. (They spoke separately and on condition of anonymity, citing a nondisclosure agreement, but they agreed on multiple key points and details.) The former contractors said they weren’t told much about their role or the future of the product they were working on, but the companies that hired them—one an Indiana-based consultancy called BCforward, the other a Texas firm called MMC—did indicate their jobs were not permanent. They also understood that the internal software that identified topics for trending news was meant to improve over time, so it could eventually take on more of the work itself.

The strange thing, they told me, was the algorithm didn’t seem to be getting much better at selecting relevant stories or reliable news sources. “I didn’t notice a change at all,” said one, who had worked on the team for close to a year. The system was constantly being refined, the former contractor added, by Facebook engineers with whom the trending contractors had no direct contact. But the improvements focused on the content management system and the curation guidelines the humans worked with. The feed of trending stories surfaced by the algorithm, meanwhile, was “not ready for human consumption—you really needed someone to sift through the junk.”

The second former contractor, who joined the team more recently, actually liked the idea of helping to train software to curate a personalized feed of trending news stories for readers around the world. “When I entered into it, I thought, ‘Well, the algorithm’s basic right now, so that’s not going to be [autonomous] for a couple years.’ The volume of topics we would get, it would be hundreds and hundreds. It was just this raw feed,” full of clickbait headlines and topics that bore no relation to actual news stories. The contractor estimated that, for every topic surfaced by the algorithm that the team accepted and published, there were “four or five” that the curators rejected as spurious.

The third contractor, who agreed that the algorithm remained sorely in need of human editing and fact-checking, estimated that out of every 50 topics it suggested, about 20 corresponded to real, verifiable news events. But when the news was real, the top sources suggested by the algorithm often were not credible news outlets.

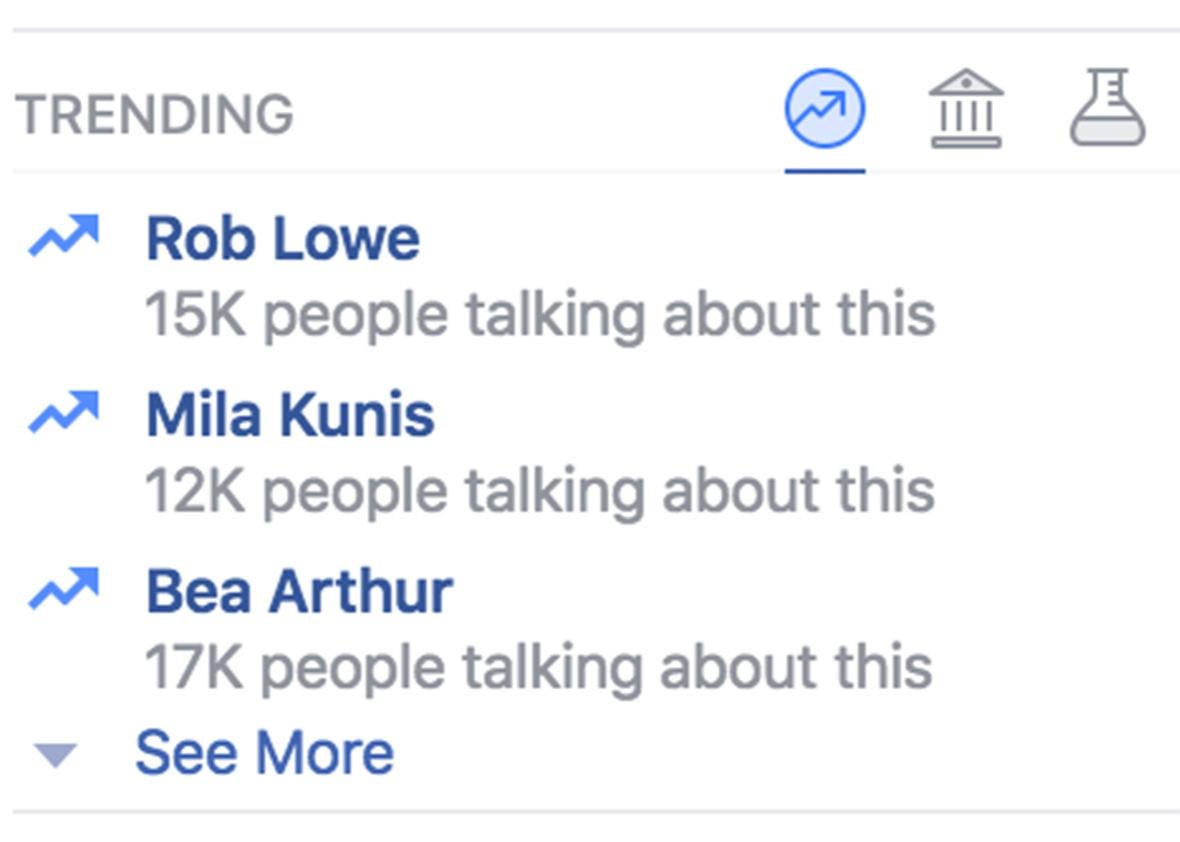

The contractors’ perception that their jobs were secure, at least for the medium term, was reinforced when Facebook recently began testing a new trending news feature—a stripped-down version that replaced summaries of each topic with the number of Facebook users talking about it. This new version, two contractors believed, gave the human curators a greatly diminished role in story and source selection. Said one: “You’ll get Endingthefed.com as your news source (suggested by the algorithm), and you won’t be able to go out and say, ‘Oh, there’s a CNN source, or there’s a Fox News source, let’s use that instead.’ You just have a binary choice to approve it or not.”

The results were not pretty. “They were running these tests with subsets of users, and the feedback they got internally was overwhelmingly negative. People would say, ‘I don’t understand why I’m looking at this. I don’t see the context anymore.’ There were spelling mistakes in the headlines. And the number of people talking about a topic would just be wildly off.” The negative feedback came from both Facebook employees participating in internal tests and external Facebook users randomly selected for small public tests.

The contractor assumed Facebook’s engineers and product managers would go back to the drawing board. Instead, on Friday, the company dumped the journalists and released the new, poorly reviewed version of trending news to the public.

Why was Facebook so eager to make this move? The company may well have deemed journalists more trouble than they’re worth after several of them set off a firestorm by criticizing the product in the press. Others complained about the “toxic” working conditions or dished dirt on Twitter after being let go. Journalists are a cantankerous lot, and in many ways a poor fit for Silicon Valley tech companies like Facebook that thrive on opacity and cultivate the perception of neutrality.

But Facebook appears to have thrown out the babies and kept the bathwater. What’s left of the trending section, even after the removal of the Kelly story, looks a lot like the context-free, clickbait-y mess the contractor described sifting through each day. “You click around, and it’s a garbage fire,” one said of the new version.

Ironically, the decline in quality of the trending section comes at the same time that Facebook is touting values such as authenticity and accuracy in its news feed, where it continues to fight its never-ending battle against clickbait and preach the gospel of “high-quality” news content.

No doubt Facebook cares about the quality of the stories it presents to its users, whose loyalty to the site is the backbone of its multi-hundred-billion-dollar business. But it also insists it won’t become a media company, despite plenty of evidence that it already is. That’s because being a media company means making judgments you have to defend. It also means, as Facebook is surely aware, being deeply unpopular.

This article is part of Future Tense, a collaboration among Arizona State University, New America, and Slate. Future Tense explores the ways emerging technologies affect society, policy, and culture. To read more, follow us on Twitter and sign up for our weekly newsletter.