Apple is taking a stand. It’s a strong stand, and an inescapably political one. It’s standing up to the FBI, a federal district court judge, and, by extension, the United States government.

Apple’s stand is this: Citizens, and iPhone owners in particular, have a legitimate interest in keeping the data on their phones private and secure—even those who are suspected of heinous crimes. And Apple has a right to provide citizens the tools to do that. The government, meanwhile, does not have the right to compel Apple to weaken its own security tools—even if it has a search warrant and a court order demanding just that.

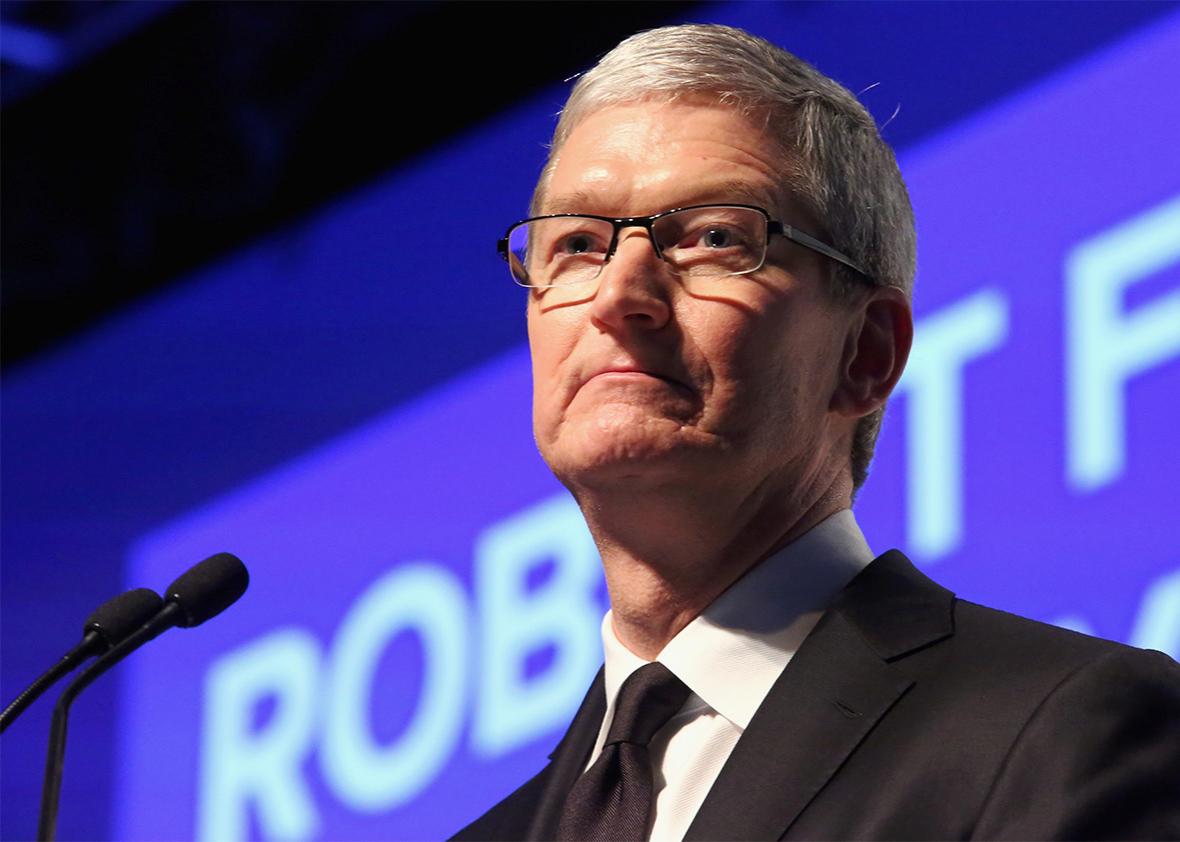

The specific case at hand isn’t quite as simple as all that. There are technical nuances involved in the FBI’s request that Apple modify a San Bernardino shooting suspect’s iPhone software to make it easier for investigators to break into his phone and decrypt the data it contains. There is also an important dispute over semantics: Apple claims that what the magistrate is asking for is tantamount to a backdoor—a loophole built into a software program that allows the government to circumvent its security measures. Backdoors are often criticized by security experts on the grounds that they make programs more vulnerable to criminal hackers as well. This is the thrust of Apple CEO Tim Cook’s stated objection to the court order. The Department of Justice counters that what’s at stake is not a backdoor—just access to a single device. (Ben Thompson’s Stratechery newsletter has a clear explanation of those nuances that is well worth your time.)

Technical details aside, however, Apple’s overarching argument—laid out by Cook in a passionate open letter to the company’s customers on Tuesday night—is big, bold, and philosophical, and it sets Apple up to carry what might seem an unlikely banner for a Silicon Valley tech giant: the banner of citizens’ right to protect their own data. Cook writes:

While we believe the FBI’s intentions are good, it would be wrong for the government to force us to build a backdoor into our products. And ultimately, we fear that this demand would undermine the very freedoms and liberty our government is meant to protect.

Telling the government what is wrong, and rooting its argument in a discussion of freedoms and government responsibility, is a risky position for Apple to take. It puts the company on the side of such divisive entities as the ACLU, the Electronic Frontier Foundation, and Rand Paul. And it pits Apple against some formidable opponents, not least of which is likely to be public opinion. (Donald Trump, for one, wasted no time making political hay out of Apple’s stance, raving, “Who do they think they are?”) Yes, Apple is going to take a lot of heat for obstructing the investigation of a man who, along with his wife, slaughtered 14 innocent, defenseless co-workers in an act that has been widely described as terrorism.

Giant, multinational corporations are usually loath to take such a stand. They tend to see little to gain and much to lose from championing political causes—and still more to lose when those causes are likely to prove unpopular. So why is Apple doing it?

I see two good reasons. First, CEO Tim Cook seems to actually believe in this cause—one that has wide-ranging implications for how much trust we can put it our technology, and one that can use an ally like Apple. But it’s also a cause that could benefit Apple’s business.

Apple, as it happens, is locked in an epic business battle with Google, Facebook, and a small number of other tech behemoths. At stake is who makes the devices and the software that serve as our primary gateway to the digital world. The companies that win this battle stand to dominate the consumer technology industry for years, perhaps decades, to come.

Microsoft won this battle in the 1980s and ’90s. So far in the 21st century, Apple and Google (now Alphabet) have been on top. But their position is always tenuous. One could suddenly pull ahead of the other, as Alphabet recently reminded us when it briefly surpassed Apple as the world’s most valuable company by market capitalization. Or they could both be eclipsed by a company like Facebook or Amazon, a resurgent Microsoft, an Asian rival, or some as-yet-undreamt-of startup. The competition is intense.

Apple has been winning on the strength of its expensive mobile computing devices, which have been conveniently subsidized in the United States by the big wireless carriers. But its business model, which hinges on consumers paying a premium for high-end products, makes it uniquely vulnerable among its competitive set. Businesses like Google and Facebook don’t rely on consumers to buy their products. Their main consumer-facing products are, generally speaking, free. Their model is to lure as many people as possible onto their respective platforms, then sell those people’s attention, demographic data, and behavioral profiles to advertisers.

“If you aren’t paying for the product, you are the product,” goes a shopworn cliché that is often applied to Internet services. It’s misleading in many ways—far too facile to capture the complex relationship between, say, Facebook and its users. Nonetheless, it’s a convenient shorthand for understanding why Apple is uniquely positioned among tech companies to cloak itself in the mantle of consumer privacy—and why it might find it prudent, at this juncture, to do so.

(Another quick note on semantics: Cook focused his letter on security, not privacy, and emphasized the technical and pragmatic drawbacks of backdoors. Namely, that they do more to undermine security than they do to help authorities maintain it. But that debate is inextricable from the debate over citizens’ right to keep their data private, and Cook’s rhetoric about freedom and liberty makes it clear he recognizes this.)

Apple’s software collects data on its users every bit as voraciously as Google, Facebook, and Amazon do. For instance, if you have an iPhone and you haven’t turned off location tracking, your phone has kept a running record, not only of who you’ve called, texted, and emailed, and what you’ve said, but also of where you are at all times. But whereas Google and Facebook package that sort of data into products to sell to advertisers, Apple generally doesn’t. Not that it hasn’t tried, in some relatively desultory ways—it just hasn’t succeeded. The upshot is that Cook can take to the privacy soapbox without looking like the hypocrite that Larry Page or Mark Zuckerberg would.

So put yourself in Cook’s position for a moment. You’re running a company that is on the brink of being dethroned as the world’s most valuable by its most bitter rival. Your success hinges on continuing to sell at a huge profit a device that locks customers into a lucrative software ecosystem of your own devising. Said rival—and a host of other rivals, for that matter—are hawking their own lucrative software ecosystems, either for free or licensed to hardware producers at below cost, in an effort to undermine yours. You live in a state of constant anxiety that your company will lose its edge. But you perceive that your rivals’ model carries a vulnerability of its own—a potential conflict of interest between the sanctity of users’ private data and advertisers’ thirst for that data.

Now say you see an opportunity to differentiate your company as the one that cares so much about its users’ privacy and security that it’s willing to go to great lengths to defend it. As it happens, you also personally believe that defending users’ privacy and security is the right thing to do. It’s not a hard choice, is it?

Cook began to reposition Apple as a defender of consumers’ interest as early as 2013, when the company was being pilloried alongside its rivals for allegedly standing quietly aside while government spooks mined their users’ data en masse. By 2014, when Apple released the next version of its mobile operating system, it was touting industry-leading encryption as a standard feature—encryption so strong that Apple could boast that it couldn’t hand over your data to authorities even if it wanted to.

The move was widely hailed by privacy advocates, but it didn’t seem to register as strongly with the public at large, perhaps because it sounded too abstract and technical. It did, however, set the stage for future clashes between Apple and law enforcement—clashes like the one that is making front-page news this week.

The case at hand is complicated by the fact that the suspect’s iPhone was not in fact the latest model with the strongest security. It’s running iOS 8, which comes with strong encryption, but the device itself is an iPhone 5C—an older, less expensive model. As Dan Guido explains in an authoritative Trail of Bits post, the 5C lacks a key hardware feature that makes newer iPhones essentially impossible for even Apple to unlock. If it were an iPhone 6, we wouldn’t be having this particular debate.

It’s for this reason that Thompson, in his incisive Stratechery post, worries that Cook chose the wrong battle:

Would it perhaps be better to cooperate in this case secure in the knowledge that the loophole the FBI is exploiting (the software-based security measures) has already been closed, and then save the rhetorical gun powder for the inevitable request to insert the sort of narrow backdoor into the disk encryption itself I just described?

As it is, Thompson writes, “the PR optics could not possibly be worse for Apple.”

That’s where Thompson is wrong. Sure, if Apple’s goal were to avoid controversy, then it would have been better off quietly complying with the FBI and the federal magistrate. Likewise, if Apple’s goal were merely to defend its right to protect users’ data by any means necessary, then it might have been better off saving its bullets for a fight over true backdoors. At that point, Google and others might have even joined the fray on Apple’s side.

But a controversy can help Apple differentiate its products on privacy grounds. The more heinous the crimes of the iPhone user that Apple is protecting, the more it underscores the inviolability of the company’s commitment to privacy and security. Viewed in that light, Google’s silence on Wednesday is the best outcome Apple could have hoped for.

You may think that this is an overly cynical reading of Cook’s evidently genuine defense of Apple’s right to provide its users with ironclad security. I don’t see it that way. I think Cook believes and means what he’s saying. I also think he’s fortunate to run a company whose business interests, on this particular issue, align with his convictions. Because the next time privacy activists hold a big rally, they’re going to do it in front of their local Apple Store.