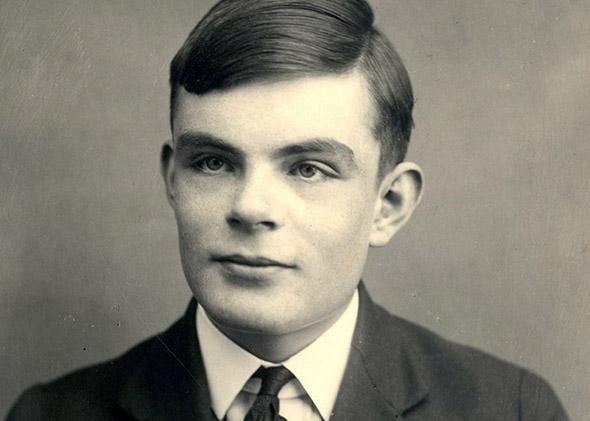

In the Alan Turing biopic The Imitation Game, technology takes down one of history’s most brutal foes: the Nazis. The film highlights the role of computing in core aspects of strategy and geopolitics. Turing and other codebreakers played a pivotal role in deciding the Battle of the Atlantic, going against the presumption that German naval communications were unbreakable.

But breaking WWII codes may not be Turing’s only contribution to security and geopolitics. Today, some suggest that modern-day realizations of Turing’s theories about artificial intelligence could be just as pivotal in deciding the fate of 21st-century nations. Political scientist Michael Horowitz has already posited a “robotics gap” akin to the Cold War “missile gap.” The Center for a New American Security’s Paul Scharre has defined robot swarms as a future military technology regime akin to the invention of precision-guided weapons, which many believe was key to deciding the military component of the late Cold War. (Full disclosure: I consulted on a recent report of Scharre’s.)

Why does this matter for geopolitics, national power, and strategy? Technologies from the railroad to the nuclear bomb have had powerful impacts on the international system and have helped create ideas about how states project power and authority within it. Now machine intelligence, some claim, will create a new form of “meta-geopolitics” that will similarly reshape notions of national power. These arguments play into larger anxieties that America is forfeiting its technological edge, with dire consequences for both military and economic elements of national power.

However, those who seek to divine the implications of artificial intelligence for military power and geopolitics should take heed of 19th-century seapower theorist Alfred Thayer Mahan’s analysis of navies and the destiny of nations. Mahan wrote on many topics, but some of his more famous works deal with the impact of new technological, economic, and political changes (the rise of globalization) as well as the use of history as a tool for taking these changes and potentials into context. One overarching theme of his work is the inherent uncertainty of what will or won’t be disruptive in international security. This has particular relevance for discussions of A.I. and security.

An age-old purpose of a naval fleet, for example, is to starve an opponent of resources via blockade. Those capable of doing so command power over their geopolitical adversaries. But emerging technologies such as the torpedo and the submarine suggested that this mission might be obsolete—a navy trying to enforce a blockade would find itself vulnerable to attack from underwater, they reasoned. Mahan, in contrast, distilled from history the blockade’s importance and persistence and suggested that it would be carried out from greater distances—but not thrown overboard altogether.

So what happened? Not only did the supposed rise of the torpedo and sub-enabled small and many fail to come about, but blockades are also still discussed as a serious strategic option for pressing international security dilemmas. While it may seem that there is little in common between A.I. and subs/torpedoes, the example serves to illustrate that the strategic impact of new technologies is often difficult to interpret and history matters in discussions of whether X or Y new technology will revolutionize security affairs as much as nuclear weapons or have a more subtle and subdued impact. All of these considerations go to the heart of today’s growing number of debates about A.I., military affairs, and geopolitics.

Artificial intelligence certainly has a history. But it’s a young discipline. Though some of the machines Turing messed about with seem primitive today (compare Turing’s 1950 chess program with Deep Blue), they are still very recent in comparison to the grand sweep of technological history as a whole. However, that does not mean that history cannot be useful for those seeking to divine public policy impacts—especially those concerning security and strategy. Specifically, we can learn a lot from the last time that questions of A.I. captured the attention of those concerned with strategy and power, not just computer science and philosophy. In the 1980s, Japan and America vied to use A.I. to capture geopolitical advantage.

The artificial intelligence landscape of the 1980s was defined by an enthusiastic race toward so-called fifth-generation computing. With a combination of high-end hardware and advanced A.I. logical inference, fifth-generation computing promised a revolutionary new paradigm built around intelligent processing of human knowledge. Edward Feigenbaum and Pamela Corduck’s 1983 book The Fifth Generation—which argued that knowledge-based computing technologies would lead to a new economy—mirrors today’s popular science potboilers about a new age of intelligent machines. More ominously, security thinkers warned (as they do today) about the impact of artificial intelligence on war, peace, and power. In a largely forgotten 1980s analysis—The Automated Battlefield—Frank Barnaby, a British nuclear scientist, warned of a future battlefield more or less exclusively populated by combat and surveillance machines.

States took the potential impact of artificial intelligence on national power very seriously. Japan invested heavily in fifth-generation computing, which was a centerpiece of its larger attempt to become a geopolitical giant. Japan’s attempt to use high-end A.I. computers to leapfrog ahead of its rivals provoked widespread panic. Artificial intelligence pioneers like Feigenbaum warned that allowing Japan to monopolize the knowledge economy to come would be a fatal American mistake. Failure to compete with the Japanese, many fretted, would lead to Japan overtaking Silicon Valley.

Yet while Japan focused on the economic component of power, the United States mainly chose to emphasize A.I.’s potential role in the Cold War military competition with the Soviet Union. Advanced American military technologies like the Strategic Defense Initiative could be enabled by A.I. systems. Autonomous land vehicles, battle managers for aircraft carriers, and intelligent advisers for fighter pilots were envisioned. As historians Alex Roland and Phillip Shirman detail, DARPA’s Strategic Computing Initiative was a comprehensive attempt—from computer chip to A.I. program—to claim “strong” artificial intelligence for Uncle Sam. Roland and Shirman rightfully note that SCI was not just a project to connect the various components of machine intelligence—it was also about connecting scientists, organizations, and users on a monumental scale.

But neither effort lived up to expectations. Despite enormous government investment, Japan failed to build commercially viable applications of its vaunted fifth-generation computing paradigm and thus was forced to abandon hope of using these systems to vault ahead of Silicon Valley. The Japanese threat that Feigenbaum and Corduck warned of never materialized—instead, the Japanese found themselves giving away the very technologies they had sunk so much capital into. Why did Tokyo fail? One school of thought holds that the top-down development strategy behind Japanese industrial and technological planning was unlikely to ever come up with a convincing prototype. DARPA’s own race for intelligence also ran into a similar expectations and reality mismatch. What good was an autonomous vehicle baffled by even minor environmental changes? The systems produced, while sophisticated, were too narrow and brittle to live up to grand expectations.

To be fair, both countries’ initiatives yielded some tangible accomplishments. DARPA’s efforts in particular set the stage for more advanced military-technical systems in the decades to come. Yet those achievements were evolutionary, not revolutionary, in nature. So what of today’s predictions and innovations? The reasons why 1980s A.I. visions proved so disappointing may not hold for today’s research and applications. As Future Tense contributors Miles Brundage and Joanna Bryson argue, systems like IBM’s Watson represent an attempt to mix and match different aspects of intelligence in a unified architecture. Japanese dreams of a new, Tokyo-dominated knowledge economy were rooted in a far more narrow vision and projected application of machine intelligence.

Security analysts—in analyzing how artificial intelligence could impact security and geopolitics—must be strategically intelligent about the uncertainties regarding how the A.I. wave will play out. Like Mahan’s 19th-century observations of the first stirrings of globalization, analysts are correct that our era is one of turbulent technological and economic change. Perhaps the rise of intelligent systems will be to our world what the various economic and technological advances in seapower and trade were to Mahan’s. But pronouncements of disruptive change must be tempered with knowledge of how previous promises (and warnings) did not deliver. We must look not just forward to the future, but also backward to the not too distant past when thinking about the implications of artificial intelligence for geopolitics and security.

This article is part of Future Tense, a collaboration among Arizona State University, New America, and Slate. Future Tense explores the ways emerging technologies affect society, policy, and culture. To read more, visit the Future Tense blog and the Future Tense home page. You can also follow us on Twitter.