This article arises from Future Tense, a collaboration of Slate, the New America Foundation, and Arizona State University. On May 21, Future Tense will host an event in Washington, D.C., called “How To Save America’s Knowledge Enterprise.” We’ll discuss how the United States approaches science and technology research, the role government should play in funding, and more. For more information and to RSVP, visit the New America Foundation’s website.

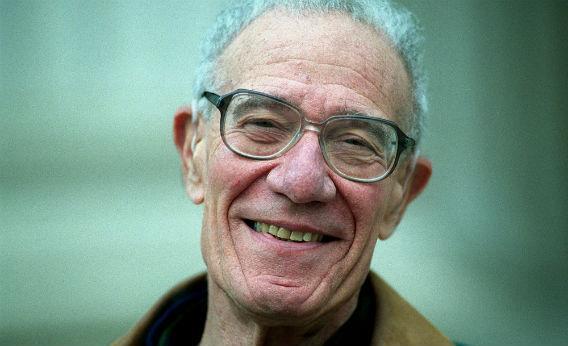

Robert Solow, winner of the 1987 Nobel Memorial Prize in Economic Sciences, is famous for, in the recent words of a high-ranking State Department official, “showing that technological innovation was responsible for over 80 percent of economic growth in the United States between 1909 and 1949.” Or as Frank Lichtenberg of Columbia University’s business school has written, “In his seminal 1956 paper, Robert Solow showed that technical progress is necessary for there to be sustained growth in output per hour worked.”

Typically, technical or technological progress isn’t explicitly defined by those invoking Solow, but people take it to mean new gadgets.

However, Solow meant something much broader. On the first page of “Technical Change and the Aggregate Production Function,” the second of his two major papers, he wrote: “I am using the phrase ‘technical change’ as a shorthand expression for any kind of shift in the production function. Thus slowdowns, speedups, improvements in the education of the labor force, and all sorts of things will appear as ‘technical change.’ ” But his willfully inclusive definition tends to be forgotten.

Solow was constructing a simple mathematical model of how economic growth takes place. On one side was output. On the other side was capital and labor. Classical economists going back to Adam Smith and David Ricardo had defined the “production function”—how much stuff you got out of the economy—in terms of capital and labor (as well as land). Solow’s point was that other factors besides capital, labor, and land were important. But he knew his limitations: He wasn’t clear on what those factors were. This is why he defined “technical change” as any kind of shift (the italics are his) in the production function. He wasn’t proving that technology was important, as economists in recent years have taken to saying he did. All Solow was saying is that the sources of economic growth are poorly understood.

The cautionary tale of Solow is emblematic of how economists get science and technology wrong. One economist creates a highly idealized mathematical model. The model’s creator is, as Solow was, honest about its limitations. But it quickly gets passed through the mill and acquires authority by means of citation. A few years after Solow’s paper came out, Kenneth Arrow, another Nobel Prize winner, would write that Solow proved the “overwhelming importance [of technological change in economic growth] relative to capital formation.” It’s a sort of idea laundering: Solow said that we don’t know where growth in economic output comes from and, for want of a better alternative, termed that missing information “technical change.” But his admission of ignorance morphed into a supposed proof that new technologies drive economic growth.

It would take a thick head indeed to believe that new technologies aren’t valuable to the economy. But determining how they are important, and how important they are, gets thorny. That’s because we don’t have access to a counterfactual world in which a given technology was never developed. Even if you buy the methodology behind, say, a McKinsey study of 13 large economies that says the Internet contributed “7 percent of growth from 1995 to 2009 and 11 percent from 2004 to 2009,” that doesn’t mean that in the absence of the Internet, the world’s economy would have grown by 7 percent or 11 percent less. We don’t, and can’t, know what the world’s economy would have looked like had packet-switched data networks not evolved as they did in the last 20 years. (And if the Internet hadn’t emerged as it did in the final few years of the last century, we probably wouldn’t have experienced the dotcom boom-and-bust cycle, which then changes the entire trajectory of the global economy over the last decade and a half.)

To take another example of just how rudimentary economists’ understanding of the importance of technology to the economy is, look at a best-selling book that came out last year, The Great Stagnation, by prominent economist Tyler Cowen. The central argument of The Great Stagnation is that technological progress is slowing down, which will hurt economic growth.

But what is Cowen’s proxy for “technological progress”? He relies almost exclusively on a paper published in Technological Forecasting and Social Change by Jonathan Huebner, a physicist at the Naval Air Warfare Center. Huebner’s paper, “A Possible Declining Trend for Worldwide Innovation” relied on a simple methodology. He scanned a book called The History of Science and Technology: A Browser’s Guide to the Great Discoveries, Inventions, and the People Who Made Them From the Dawn of Time to Today. It lists more than 7,000 “discoveries” by the year in which they were made. Huebner divided the number of discoveries made each decade since 1455 by the population of the world in that decade and made a graph. He then concluded that “worldwide innovation” might be declining.

So Cowen’s book—the “the most debated nonfiction book so far this year,” David Brooks wrote a month after it came out—ends up being based on a cursory tallying of discoveries from a high school-level reference book. But one “discovery” is not interchangeable with another. How do you compare the introduction of bifocal eyeglasses to the “invention of the Earth Simulator, a Japanese supercomputer” or Gutenberg’s moveable type to the polymerase chain reaction (all real examples from the book)? If you’re Huebner, or Cowen citing him, you say each counts as “one discovery.”

In his Nobel acceptance speech , Solow warned against such oversimplifciation, lamenting that economists are “trying too hard, pushing too far, asking ever more refined questions of limited data, over-fitting our models and over-interpreting the results.” But then he conceded that such overinterpretation is “probably inevitable and not especially to be regretted.”

He is right about the likely inevitability of this push. But his complacency (“not especially to be regretted”) comes from a comfortable lectern in Sweden. I can’t quantify for you the damage that such overzealousness causes without falling into the very trap I’m pointing out. It’s reasonable to ask the questions economists ask. But when they claim to have measured things that they haven’t—the importance of technology to the economy, the “rate of technological change,” or the bang per federal research buck—and policymakers believe them, it leads to bad policy.

Take the case of STAR METRICS, an effort by the Obama administration to “document the outcomes of science investments to the public”. STAR METRICS uses things like counting patents or how often a scientific paper has been cited to measure “the impact of federal science investment on scientific knowledge.” It’s easy to measure how many times a scientific paper has been cited. But make this of major bureaucratic importance, and you’ll get researchers citing things for the sake of citing them.

The notion that something is unquantifiable is alien to the mindset of the modern economist. Tell them it’s not quantifiable, and they will hear that it has not been quantified yet. This mistake matters, because economists and their business-school colleagues are very influential in the formulation of public policy. If economists rather than biologists decide what is good biology through supposedly quantitative, objective evaluations, you get worse biology. If economists decide what makes good physics, or good chemistry, you get worse physics, and worse chemistry. If you believe, as I do, that scientific progress, broadly speaking, is good for society, then making economists the arbiters of what is and isn’t useful ends up hurting everybody.

The greatest irony is that economists are ultimately no different from any other academics. Their argument for saying that we should give more money to study the economics of science is pretty much: “Trust us, what we’re doing is useful.” This is precisely the same argument with which they ding the physicists and biologists.

So really, what we need is to study the economics of the economics of science. Of course to know just how useful this is, we’ll need an economics of the economics of the economics of science. Where does it end?