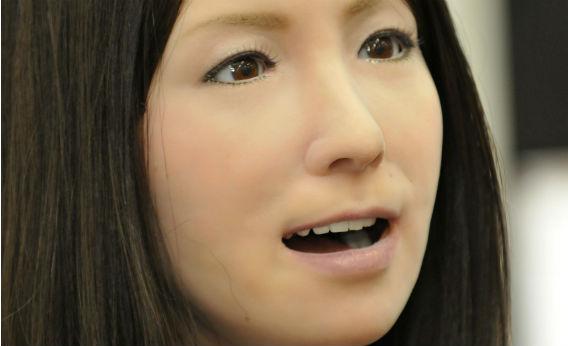

Sometimes, the creation is better than its creator. Robots today perform surgeries, shoot people, fly planes, drive cars, replace astronauts, baby-sit kids, build cars, fold laundry, have sex, and can even eat (but not human bodies, the manufacturer insists). They might not always do these tasks well, but they are improving rapidly. In exchange for such irresistible benefits, the Robotic Revolution also demands that we adapt to new risks and responsibilities.

This adaptation to new technology is nothing new. The Industrial Revolution brought great benefits and challenges too, from affordable consumer goods to manufacturing pollution. Likewise, we’re reaping the benefits of the Computer Revolution but also still sorting out ethics and policy arising from it, such as online privacy and intellectual property rights.

If you believe Bill Gates, who says that the robotics industry is now at the point the computer industry was 30 years ago, then we’ll be soon grappling with difficult questions about how to build robots into our society. Here are three key fronts we’ll need to defend.

Safety and Errors

As any fallible human would be, roboticists and computer scientists are challenged in creating a perfect piece of very complex software. Somewhere in the millions of lines of code, typically written by teams of programmers, errors and vulnerabilities are likely lurking. While this usually does not result in significant harm with, say, office applications, even a tiny software flaw in machinery, such as a car or a robot, could potentially result in fatalities.

For instance, in August 2010, the U.S. military lost control of a helicopter drone during a test flight. For more than 30 minutes and 23 miles, it veered toward Washington, D.C., violating airspace restrictions meant to protect the White House and other governmental assets. In October 2007, a semi-autonomous robotic canon deployed by the South African army malfunctioned, killing nine “friendly” soldiers and wounding 14 others. Experts continue to worry about whether it is humanly possible to create software sophisticated enough for armed military robots to discriminate combatants from noncombatants, as well as threatening behavior from nonthreatening.

Never mind the many other military-robot accidents and failures, human deaths can and have occurred in civilian society. The first human to be killed by a robot is widely believed to have happened in 1979, in an auto factory accident in the United States. It doesn’t take much to reasonably foresee that a mobile city-robot or autonomous car of the future—a heavy piece of machinery—could also be involved in a tragedy, such as accidentally run over a small child.

Hacking is an associated concern. What makes a robot useful—its strength, ability to access and operate in difficult environments, expendability, etc.—could also be turned against us. This issue will become more important as robots become networked and more indispensible to everyday life, as computers and smartphones are today. Already more than 50 nations have developed military robotics, including Iran and China; this past week, North Korea reported that it bought several (older) military aerial drones that the United States had previously sold to Syria.

Thus, some of the questions we will face in this area include:

- Is it even possible for us to create machine intelligence that can make nuanced distinctions, such as between a gun and an ice-cream cone pointed at it, or understand human speech that is often heavily based on context?

- What are the trade-offs between nonprogramming solutions for safety—e.g., weak actuators, soft robotic limbs or bodies, using only nonlethal weapons, or using robots in only specific situations, such as a “kill box” in which all humans are presumed to be enemy targets—and the limitations they create?

- How safe ought robots be prior to their introduction into the marketplace or society?

- How would we balance the need to safeguard robots from running amok with the need to protect them from hacking or capture?

Law and Ethics

If a robot does make a mistake, it may be unclear who is responsible for any resulting harm. Product liability laws are largely untested in robotics and, anyway, continue to evolve in a direction that releases manufacturers from responsibility. With military robots, for instance, there is a list of characters throughout the supply chain who may be held accountable: the programmer, the manufacturer, the weapons legal-review team, the military procurement officer, the field commander, the robot’s handler, and even the president of the United States.

As robots become more autonomous, it may be plausible to assign responsibility to the robot itself, if it is able to exhibit enough of the features that typically define personhood. If this seems too far-fetched, consider that there is ongoing work in integrating computers and robotics with biological brains. A conscious human brain (and its body) presumably has human rights, and if we can replace parts of the brain with something else and not impair its critical functions, then we could continue those rights in something that is not fully human. We may come to a point where more than half of the brain or body is artificial, making the organism more robotic than human, which makes the issue of robot rights more plausible.

One natural way to think about minimizing risk of robotic harm is to program them to obey our laws or follow a code of ethics. Of course, this is much easier said than done, since laws can be too vague and context-sensitive for robots to understand, at least in the foreseeable future. Even the three (or four) laws of robotics in Isaac Asimov’s stories, as elegant and adequate as they first appear to be, fail to close many loopholes that result in harm.

Programming aside, the use of robots must also comply with existing law and ethics. And again, those rules and norms may be unclear or untested with respect to robots. For instance, the use of military robots may raise legal and ethical questions that we have yet to fully consider and that, in retrospect, may seem obviously unethical or unlawful.

Privacy is another legal concern here, most commonly related to spy drones and cyborg insects. But advancing biometrics capabilities and sensors can also empower robots to conduct intimate surveillance at a distance, such as detecting faces as well as hidden drugs and weapons on unaware targets. If linked to databases, these mechanical spies could run background checks on an individual’s driving, medical, banking, shopping, or other records to determine whether the person should be apprehended. Domestic robots, too, can be easily equipped with surveillance devices—as home security robots already are—that could be monitored or accessed by third parties.

Thus, some of the questions in this area include:

- Are there unique legal or moral hazards in designing machines that can autonomously kill people? Or should robots merely be considered tools, such as guns and computers, and regulated accordingly?

- Are we ethically allowed to give away our caretaking responsibility for our elderly and children to machines, which seem to be a poor substitute for human companionship (but perhaps better than no—or abusive—companionship)?

- Will robotic companionship for other purposes, such as drinking buddies, pets, or sex partners, be morally problematic?

- At what point should we consider a robot to be a “person,” eligible for rights and responsibilities? If that point is reached, will we need to emancipate our robot “slaves”?

- As they develop enhanced capacities, should cyborgs have a different legal status than ordinary humans? Consider that we adults assert authority over children on the grounds that we’re more capable.

- At what point does technology-mediated surveillance count as a “search,” which would generally require a judicial warrant?

Social Impact

How might society change with the robotics revolution? As with previous revolutions, one key concern is about a loss of jobs. Factories had replaced legions of workers who used to perform the same work by hand, giving way to the faster, more efficient processes of automation. Internet ventures, such as Amazon.com, eBay, and even smaller e-tailers, are still edging out brick-and-mortar stores, which have much higher overhead and operating expenses. Labor is one of the largest costs. Likewise, as potential replacements for humans—performing certain jobs better, faster, and so on—robots may displace human jobs, regardless of whether the workforce is growing or declining.

The standard response is that human workers, whether replaced by other humans or machines, would then be free to focus their energies where they can have a greater impact. To resist this change is to support inefficiency: For instance, by outsourcing call-center jobs to other nations where the pay is less, displaced workers (in theory) can migrate to “higher-value” jobs, whatever those may be. Further, the demand for robots itself creates additional jobs. Yet, theory and efficiency provide little comfort for the human worker who needs an actual, paying job to feed her or his family, and cost benefits may be canceled by unintended effects, such as a worse customer experience with call-center representatives who don’t share the native language of their callers.

Some experts are further concerned about technology dependency. For example, if robots prove themselves to be better than humans in difficult surgeries, the resulting loss of those jobs may also mean the gradual loss of that medical skill or knowledge, to the extent that there would be fewer human practitioners. This is not the same worry with labor and service robots that perform dull and dirty tasks, in that we care less about the loss of those skills; but there is a similar issue of becoming overly reliant on technology for basic work. This dependency seems to cause society to be more fragile: For instance, the Y2K problem caused a significant amount of panic, since so many critical systems—such as air-traffic control and banking—were dependent on computers whose ability to correctly advance their internal clock to Jan. 1, 2000 (as opposed to resetting it to Jan. 1, 1900) was uncertain. Though the flaw was not as destructive as many feared, it demonstrated how systems can be vulnerable to technological oversight.

Like the social networking and email capabilities of the Internet revolution, robotics may profoundly affect human relationships. Already, robots are starting to take care of some of our elderly and children. Yet there are not many studies on the effects of such care, especially in the long term. Some soldiers have emotionally bonded with the bomb-disposing PackBots that have saved their lives, sobbing when the robot meets its end. And robots are predicted to soon become our lovers and companions: They will always listen and never cheat on us. Given the lack of research studies in these areas, it is unclear whether psychological harm might arise from replacing human relationships with robotic ones.

Potential harm is not limited to humans; robots could also hurt the environment. In the computer industry, “e-waste” is a growing and urgent problem, given the disposal of heavy metals and toxic materials in the devices at the end of their product lifecycle. Robots as embodied computers will likely exacerbate the problem, as well as increase pressure on rare-earth elements needed today to build computing devices and energy resources needed to power them. This also has geopolitical implications given that few nations, such as China, control most of those raw materials.

Thus, some of the questions in this area include:

- What is the predicted economic impact of robotics, all things considered? How do we estimate the expected costs and benefits?

- Are some jobs too important, or too dangerous, for machines to take over? What do we do with the workers displaced by robots?

- How do we mitigate disruption to a society dependent on robotics, if those robots were to become inoperable or corrupted, e.g., through an electromagnetic pulse or network virus?

- Is there a danger with emotional attachments to robots? Are we engaging in deception by creating anthropomorphized machines that may lead to such attachments, and is that bad?

- Is there anything essential in human companionship and relationships that robots cannot replace?

- What is the environmental impact of a much larger robotics industry than we have today?

- Could we possibly face any truly cataclysmic consequences from the widespread adoption of social robotics, and, if so, should a precautionary principle apply (that we should slow down or halt development until we can address these serious risks)?

“The Future Is Always Beginning Now”

These are only some of the questions in the emerging field of robot ethics. Beyond philosophy, many of the questions lead to doorsteps of psychology, sociology, economics, politics, and other areas. And we have not even considered the more popular Terminator scenarios in which robots—through super artificial intelligence—subjugate humanity. These and other scenarios are highly speculative, yet they continually overshadow more urgent and plausible issues.

Yes, robotic technologies jump out of the pages of science fiction, and the ethical problems they raise seem too distant to consider, if not altogether unreal. But as Asimov foretold: “It is change, continuing change, inevitable change, that is the dominant factor in society today. No sensible decision can be made any longer without taking into account not only the world as it is, but the world as it will be. … This, in turn, means that our statesmen, our businessmen, our everyman must take on a science fictional way of thinking.”

Of course robots can deceive, play, kill, and work for us—they’re designed in our image. And the reflection they cast back triggers some soul-searching, forcing us to take a hard look at what we’re doing and where we’re going.

This essay is adapted from the new book Robot Ethics, released by MIT Press.