Read the rest of the entries by clicking here.

Last week, I complained that Stanford’s machine learning class, which I’m taking online with thousands of others, is training me to be an actuary, not the robot overlord I aspire to. So far, the course has dealt primarily with problems where we have a bunch of data—mostly housing prices—in which our job is to find an algorithm to “fit” the data as best as possible. Are you bored yet? I was a little bored.

Most of what we had been doing was fitting lines to data. It was the sort of stuff that, as I said, I did in Mrs. Burke’s Algebra II class in high school. (Thanks for now following me on Twitter, Mrs. Burke!)

Mercifully, though, this week we shifted to a different kind of problem. No more predicting house prices—we now are teaching machines to predict one of two discrete outcomes: Is a tumor malignant, given its size? Will a student pass a class, based on incomplete grading information? These are the sorts of questions that computers can be “taught” to predict with great accuracy, if done correctly.

I say “taught” in quotation marks because, to this point, it’s still not entirely clear to me why we describe this process as teaching computers to learn as opposed to just solving problems using their great processing power. We’re only on Week 3, so I don’t expect this to be entire obvious yet, but it’s already feeling not so different than the way humans learn, when all we have is a bunch of examples of something from which to formulate a theory: We use trial and error to hone in on the correct answer to a problem. In some of the programming exercises we’ve been doing, we’ve allowed the computer 1,500 attempts to get the very best possible values for an algorithm, making sure that it’s getting a little closer each time.

As it turns out, when you have data that can be classified as true or false—pass/fail, malignant/benign, or even one of several discrete states—then straight lines frequently don’t do much for you. You have to start getting into fancier algorithms, with advanced concepts like squaring a variable, to find a good way to predict future cases based on the historical data we already have.

In the case of breast tumors, Stanford researchers have trained computers to take in a variety of information about a newly discovered tumor and predict, very precisely, whether it is malignant. In the first class we were thinking of the problem just in terms of the size of the tumor, but in reality there are a tremendous number of factors that go into any problem. The resulting algorithm, if it’s good, can guess not only whether a tumor is bad, but the probability that it is.

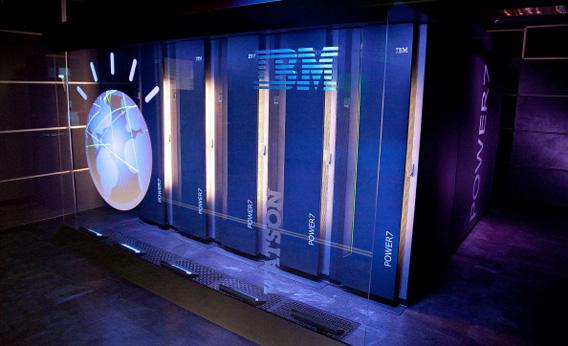

We want computers making these calculations so that humans—doctors, in this case—can make informed decisions about how to act free of whatever bias or faulty intuition they make bring to the table. In the case of Watson, everyone’s favorite robotic Jeopardy! champion, this idea that algorithms can predict an outcome with a specific degree of certainty was one of the biggest advances that project made in artificial intelligence. Watson didn’t just have a guess. He knew how confident he was in that guess as well. In fact, this is exactly what Watson’s brain is now being repurposed to do: produce diagnostic information for physicians, complete with degrees of confidence in the diagnosis.

I am only three weeks into the discipline. But to me, this seems to be the great promise of learning machines: not that they will guide our lives, but that they will act as consiglieres in a world that operates on knowing the odds.

Grades:

Logistical regression: 4.5/5

Regularization (a way to handle huge numbers of variables): 5/5, but -20 percent for being late again.

Coding grade for linear regression: 114/100, thanks to some extra credit questions.