Reddit has an image problem and a user problem. After years of idealistically celebrating the site, the media, following the 2014 celebrity photo hack, now frequently portrays Reddit as a cesspool of hate in dire need of repair. Yet many of the site’s testy users are quick to rebel against any perceived intrusion on their autonomy and free speech. It appears impossible to address one problem without aggravating the other.

This was one immediate issue confronting Reddit’s new management last week following the resignation of interim CEO Ellen Pao. Pao’s replacement, Reddit co-founder Steve Huffman, sat for a fairly candid ask-me-anything chat on the site, which, given the circumstances, went shockingly well. Asked about /r/coontown, a subreddit forum that unrepentantly mocks black people and even celebrates their deaths, Huffman affirmed a commitment to the site’s free speech principles while stressing that they were not absolute: “I don’t want to ever ban content. Sometimes, however, I feel we have no choice because we want to protect reddit itself.” (His use of bold.) What Huffman chooses to do next could very well determine whether Reddit survives as both a business and a central node of Internet culture. If Reddit cannot keep its user base happy while pleasing its investors and reforming its image, it may be doomed to slow decline and obsolescence—or worse, a mass user exodus to a competitor, as happened with Reddit’s predecessor Digg after its management alienated its user base. How can Reddit be detoxed?

Rather than taking one side in this conflict, which has generated a dismal amount of noise and sanctimony in the last few months, I propose a technical solution to appease both sides. By carefully employing social stigma, the site can effectively quarantine its hate speech and its most hateful users without violating the free speech principles of its founders or alienating its user base. Reddit doesn’t need to ban hate outright to become a less hateful place.

To its credit, Reddit has taken some positive steps, but not enough. In the past the site has shut down subreddit forums that hosted illegal content, like /r/jailbait and /r/creepshots. And Huffman has signaled that he endorses the policy of nuking subreddits like /r/fatpeoplehate that incite harassment (defined by Pao as “Systematic and/or continued actions to torment or demean” and by Huffman as anything “that causes other individuals harm or to fear for their well-being”) against particular people. Reddit’s ban on revenge porn should also stand.

But Reddit still has a hate speech problem, exemplified by racist subreddits like /r/coontown. Yet in the absence of harassment or illegal activity, Reddit has so far hesitated to shut these forums down. It doesn’t need to ban such vile (but legal) speech. All it needs is a super-quarantine.

Contrary to recent alarmist headlines, hate speech on Reddit is vile, but it’s not an epidemic. Most existing hate subreddits have dozens or hundreds of subscribers; /r/coontown has 17,500. By contrast, most default, or heavily promoted, subreddits have millions or tens of millions of subscribers and far more unregistered viewers. So we’re looking at a set of hateful users that makes up at most 1 percent of Reddit’s 5 million registered active users. The problem is not their numbers but their virulence—and their uncomfortable proximity and interactions with everyone else on Reddit. Reddit needs to build a wall to seal off that 1 percent. Instead of policing their speech, Reddit just needs to classify it.

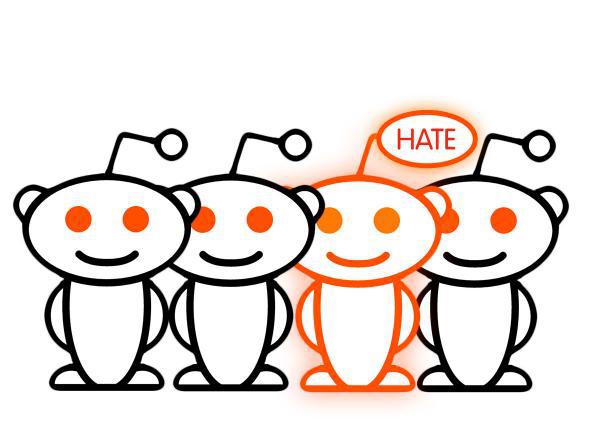

Reddit already classifies hateful subreddits as 18-plus, along with subreddits devoted to pornography and gore. What’s needed is another category, labeled with no ambiguity: “hate”. Subreddits that support, encourage, or fail to moderate hate speech will be tagged with the hate label, which will accompany them whenever they are mentioned on the site, either as a superscript title (“/r/coontownHATE”) or as part of the name itself (“/r/hate/coontown”). Moreover, users who moderate or post to these hate subreddits should have their own accounts tagged with “flair” markers that follow them wherever else they post. One pseudonymous /r/coontown moderator would show up as DylannStormRoofHATE wherever he posted, carrying the stigma wherever he goes on Reddit. (Hate mods would not be allowed to moderate nonhate subreddits.) If I were to post anything on /r/coontown (no plans to do so), no matter how innocuous, I too would be stigmatized with a hate marker that would follow me around on every other subreddit. Mere participation would be enough. This marker would be an Internet substitute for the original Greek concept of stigma, characterized by Erving Goffman as “bodily signs designed to expose something unusual and bad about the moral status of the signifier.” That’s exactly the goal.

Throughout Reddit, the volunteer moderators of each individual subreddit would have the option to ban hate users entirely, the likely result being that participation in any hate subreddit would automatically prevent users from participating in a good deal of civilized subreddits that don’t wish to have any association with them. This decision, however, would be left to individual subreddit moderators and their users; individual communities, not Reddit itself, would be ostracizing hate users. Given the choice of banning or not banning hate users, I suspect most subreddits, particularly the most popular, would choose to exclude them. Mods are not as obsessed with free speech as the press portrays them, and many already do a good job of keeping toxicity and hatred out of their subreddits. Moderator elfa82, who moderates /r/futurology and dozens of other subreddits, bluntly told me, “We don’t give a fuck what people think about censorship, if you’re an asshole we are gonna remove your comment.”

If Reddit were to implement a stigma system, it would be important to limit it to hate speech, so let’s drill down some into what actually constitutes it, since it’s not a legal term. I think Facebook’s current definition is reasonable: “Content that attacks people based on their actual or perceived race, ethnicity, national origin, religion, sex, gender, sexual orientation, disability or disease.” Judging from the lack of outrage at Facebook, it seems most people agree.

Facebook’s definition would include the racist subreddits I listed above, but it would not include distasteful or merely offensive subreddits. As sociologist Zeynep Tufekci told me, “Most of the cases that I’ve advocated banishing from Reddit are clear cases. I see no case for banning communities like [men’s rights group] TheRedPill, which seems like a group of men organized as a backlash to the success of feminism.” Facebook not only allows men’s rights groups, but even allows League of the South, Jihad Watch, and the New Black Panther Party, all labeled by the Southern Poverty Law Center as hate groups, alongside other pages like Fuck Armenia and the Holocaust Hoax. I see no reason for Reddit to be held to a higher standard than Facebook. As Tufekci says, classification of hate subreddits should be limited and difficult to contest. If you start lumping nonhate groups into the hate category, Reddit users will rebel, deliberately pushing back against the hate marker just to protest its broadness. We should focus on the 1 percent of the truly hateful.

Reddit, the company, would have the responsibility of setting this policy and officially dolling out the hate subreddit classifications, for which there will have to be an appeals process. Otherwise, power would devolve down to the moderators. With this quarantine, Reddit could effectively silo off its truly antisocial content, preventing it from infecting the civilized part of the site. Up until now, one issue has been the permeability between respectable and vile parts of Reddit, which makes it difficult to distinguish mere cranks from virulent bigots.

The volunteer moderators of the top subreddits already tightly police their communities with assistance from Reddit employees, and Reddit keeps inappropriate garbage from appearing on its front page. So Reddit’s supposedly laissez-faire stance on speech is more compromised than it appears, yet no mass exodus has transpired. While a significant minority of users will grouse over any further impingement, Reddit management can survive unpopularity as long as it keeps its mods happy, as I argued in my column last week. It’s important to note that Pao herself didn’t quit over a free speech controversy. Rather, Reddit’s volunteer moderators revolted due to a lack of proper support and tools from Reddit the company, made manifest when Reddit fired its director of talent, Victoria Taylor, without bothering to notify the moderators who depended on her. While Pao received a great deal of sexist abuse from Reddit users, the abuse was not the cause of her leaving the company, as Pao herself has said. Reddit can minimize such abuse with better moderation tools and better employee response to harassment—not by trying to change the culture by fiat (which never works, as we’ve learned).

But Reddit’s hate problem is soluble—and it should be treated urgently due to the ability of small groups of terrible people to appear bigger than they are, congregating in ways that disproportionately affect the civilized human beings on the site. Without formally restricting speech, a super-quarantine can allow the larger and quieter majority of Reddit to clearly disassociate itself from these fringe elements. Then Reddit can better mirror our messy and imperfect liberal society, in which we are required to tolerate the speech of people whose views are truly odious—right before we consign it to the outer margins.

This article is part of Future Tense, a collaboration among Arizona State University, New America, and Slate. Future Tense explores the ways emerging technologies affect society, policy, and culture. To read more, visit the Future Tense blog and the Future Tense home page. You can also follow us on Twitter.