Last week at Reading University, 30 judges text-chatted with a bunch of humans and a bunch of computer programs pretending to be human, and the judges tried to figure out who was who. That is the setup behind the famous Turing test—in which a computer program tries to convince an interlocutor, through free conversation on any subject whatsoever, that it is human—posited by legendary computer scientist Alan Turing in 1950 as a measure of artificial intelligence. The results out of Reading claim that one particular program, “Eugene Goostman,” has passed the Turing test, successfully tricking 10 out of 30 judges in five-minute conversations into thinking it was human. On closer inspection, though, the first question to ask is whether computers are getting smarter or people are getting dumber.

Winning program Eugene Goostman was developed primarily by software engineer Vladimir Veselov, and presents itself as a 13-year-old Ukrainian boy. Neither the current version of the program nor the transcripts have been made available yet by the contest administrators. I did obtain, however, some transcripts from a 2012 contest with an earlier version of Eugene persuading several judges of its humanity.

At first blush, the thought of a computer masquerading as human is frightening. Has machine intelligence really progressed so much? Are we close to such programs usurping our own authority and taking over the world? No and no. The question in need of answering is: How did Eugene convince the judges? What about Eugene’s responses made Eugene appear human?

To answer this question, I will try to explain some of the guts of Eugene, based on my best guess as to how it operates. As you’ll see, Eugene is something of a Wizard of Oz-like figure. From the outside, the program may seem quite conversant, but it’s making use of psychological smoke and mirrors to play on people’s presuppositions about what is “human” and what is not. In the following transcript, a judge is conversing with Eugene, trying to determine its true nature. The judge is allowed to say anything whatsoever, so a smart judge should try to do things to trip up a computer program and get it to react in a way that a human never would. But this judge eventually guessed Eugene to be a foreign female child (although Eugene never got around to giving a name or gender in the transcript).

Judge: Hello, what is the weather like tomorrow?

Eugene: In my native town people says that meteorologists are like sappers: they make a mistake only once, but the difference is that sappers do that once in a lifetime and meteorologists - daily. Do you like weather today?

Eugene identified the word “weather” here and delivered a potted joke about “sappers” (combat engineers) and meteorologists. This is a wholly scripted line by the program’s creator; nothing was invented on the fly. Unable to answer the question, Eugene’s joke and subsequent question attempt to make the judge forget that his own question was not answered.

Judge: Why do birds suddenly appear?

Eugene: Just because 2 plus 2 is 5! By the way, what’s your occupation? I mean - could you tell me about your work?

Eugene responds to a “why” question with the potted response “2 plus 2 is 5”, which actually seems apropos in light of the judge’s non sequitur. Eugene quickly asks another question, phrased twice to make it appear less formal, more foreign, and more “human.”

Judge: Yes, I am a doctor. Are you healthy?

Eugene: Well, so tell me about your work. I think - doctor is a nice specialty!

Eugene is able to pluck “doctor” out of the “I am a doctor” sentence to use it in a response. It’s unable to answer “Are you healthy?” But Eugene has already demanded more follow-up from the judge.

Judge: It is ok, I get sick of sick people. How is your stomache feeling today? Is it upset maybe?

Eugene: I think you can’t smile at all. I bet you work in a funeral agency.

Judge: What do you work as?

Eugene: I am a scholar. I’m too young to make money.

Judge: I see, what school do you go to?

Eugene: I attend an ordinary school in Odessa, my native city. Its number is 28 if it makes any difference to you.

Judge: it doesn’t. What is your favourite subject?

Eugene: It’s hard to decide. Where do you came from, by the way? Could you tell me about the place where you live?

The judge might be wondering here if Eugene is indeed a computer program—but then Eugene manages to answer several questions in a row. Most likely, Eugene identified keywords such as “work” and “school” to deliver appropriate responses to those questions. Almost any question with “work” or “school” in it would have produced these responses, however. Eugene does not understand the last question about his favorite subject. Rather, the presence of “favourite” in a question triggers the generic response “It’s hard to decide.” (“What is your favourite food?” would probably also have produced “It’s hard to decide.”) Meanwhile, Eugene keeps trying to control the conversation to steer it toward prescripted interactions.

Judge: I come from Manchester. Did you see the Jubilee?

Eugene: Could you invite me to Manchester? I would be so grateful:-)

Eugene locates the place name in the judge’s response and asks for an invitation. Eugene does not understand the question about the Jubilee and just ignores it.

Judge: Did you see the jubliee?

Eugene: Try to guess! Actually, I don’t understand why you are interested. I know you are supposed to trick me.

Judge: Not really

Eugene: Not really? Why? What are any interesting site-seeings in Manchester?

The judge gives up on the Jubilee, perhaps chalking Eugene’s ignorance up to Eugene’s youth or foreignness.

Judge: Lots of nice things. Do you like foottball?

Eugene: Does anyone hate it? Some another topic?

Another generic answer from Eugene in response to a question containing “like.” The judge misspells “football,” and Eugene can’t figure out what he’s talking about.

Judge: Will you be watching the football on Sunday?

Eugene: I play sports sometimes. On computer. But I prefer racing.

Here the word “football,” spelled correctly this time, triggers a potted line about sports. Had the judge spelled “football” correctly in the previous question, Eugene might have given this response the first time.

In summary, Eugene will not be taking over the world anytime soon. It does not understand anything of the conversation. Rather, it tries to avoid letting the judge control the conversation—that way, it has to respond as little as possible. The more Eugene can monologue in a socially acceptable way, the more the program can just use prefabricated text to drag out the conversation until the time is up. There is minimal parsing of the judge’s words (“Manchester,” “doctor”) so that some conversational context is present, but it is quite minimal. And by declaring itself to be both young and foreign, Eugene further minimizes the judge’s expectations of a coherent conversation.

Now, this isn’t the first time computer programs have come off as real through such chicanery. My favorite, Mark Humphrys’ chatbot MGonz, managed to convince an unsuspecting student (who, unlike a Turing test judge, wasn’t told that MGonz might be a computer program) that it was human for 90 minutes all the way back in 1989. Here is a representative excerpt:

MGONZ: ok honestly when was the last time you got laid

USER: what?

MGONZ: cut this cryptic shit speak in full sentences

USER: i didn’t catch the last thing you sent.

MGONZ: ok thats it im not talking to you any more

USER: it probably was stupid anyway.

MGONZ: what are you talking about

USER: just a repeat. as i expected from an idiot virgin such as you.

MGONZ: do you think religion is harmful

USER: it obviously has been in your case.

MGONZ: ok honestly when was the last time you got laid

USER: i think that your brain has been permenently damaged.

MGONZ: ok thats it im not talking to you any more

USER: i refuse to continue answering the same stupid laid question,

MGONZ: you are obviously an asshole

USER: unless you can tell me why you keep asking it.

MGONZ: ok thats it im not talking to you any more

By adopting a hostile, combative pose, MGonz could veil his lack of real responsiveness (and lack of understanding), much as Eugene Goostman’s youth and foreignness excused his poor conversational skills.

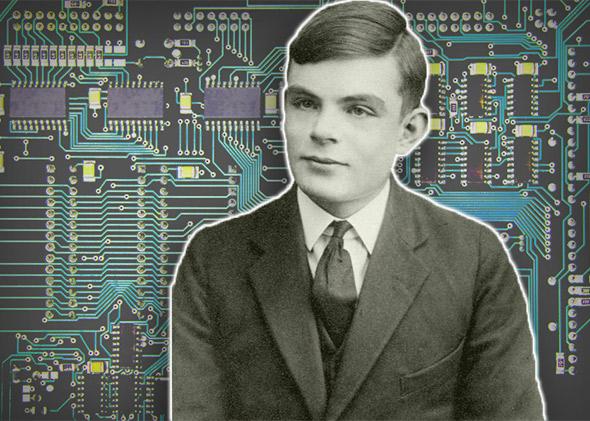

Still, it’s worth looking at just what was accomplished here. The Turing test was coined in several different versions by the brilliant computer scientist Alan Turing circa 1950. Turing’s greatest accomplishments include formulating the fundamental model of computation used in all theoretical computer science—the Turing machine—as well as helping to crack the German code cipher in World War II. He created the famous test as part of his explorations into the philosophy of artificial intelligence.

In its original version, the test took the form of an “Imitation Game” in which a man and a woman each try to convince a judge, through written communication, that the man is a woman and the woman is a man. Turing then posits: What would happen if a computer were to replace the man pretending to be a woman? Could the computer pretend to be a woman as well as a man could?

Turing later redefined the Imitation Game to the standard version known today as the Turing test: In text-message-style written exchanges, could a computer persuade a judge that it was human with some degree of success? But it’s worth dwelling on the original formulation. Turing was looking for some way to measure computers not in terms of calculational ability or operations per second, but in terms of their ability to negotiate a specifically human problem—in this case, deceiving another human being.

Trashing the Reading results, Hunch CEO Chris Dixon tweeted, “The point of the Turing Test is that you pass it when you’ve built machines that can fully simulate human thinking.” No, that is precisely not how you pass the Turing test. You pass the Turing test by convincing judges that a computer program is human. That’s it. Turing was interested in one black-box metric for how we might gauge “human intelligence,” precisely because it has been so difficult to establish what it is to “simulate human thinking.” Turing’s test is only one measure.

So the Reading contest was not the travesty of the Turing test that Dixon claims. Dixon’s problem isn’t with the Reading contest—it’s with the Turing test itself. People are arguing over whether the test was conducted fairly and whether the metrics were right, but the problem is more fundamental than that.

“Intelligence” is a notoriously difficult concept to pin down. Statistician Cosma Shalizi has debunked the idea of any measurable general factor of intelligence like IQ. Nonetheless, the word exists, and so we search for some way to measure it. Turing’s question—how could we term a computer intelligent?—was more important than his answer. The Turing test, famous as it is, is only one possible concrete measure of human intelligence, and by no means the best one.

In fact, artificial intelligence researchers tend to disparage or ignore the Turing test. Marvin Minsky dismisses it as “a joke,” while Stuart Russell and Peter Norvig, in the standard textbook Artificial Intelligence, write, “[I]t is more important to study the underlying principles of intelligence than to duplicate an exemplar. The quest for ‘artificial flight’ succeeded when the Wright brothers and others stopped imitating birds and started using wind tunnels and learning about aerodynamics.” Turing was trying to establish a beachhead for conceiving of intelligence in a way that wasn’t restricted to humans. Now that we can conceive of it, the question shifts not to whether a computer can trick people in one particular way, but to the vast varieties of ways in which it can display intelligence, human or no.

The gut appeal of the Turing test remains, however. We are obviously fascinated and threatened by the thought that machines could uncannily imitate us. We like to think we’re special; what if we’re not? We like to think no machine could ever fool us, certainly not the likes of Eugene Goostman or MGonz. But actually, they already are. (Automated bots on Twitter are already capable of attracting followers and behaving in plausibly human ways; in a sufficiently regimented social context, such as Twitter, human behavior becomes sufficiently stupid that a computer can imitate it with little to no understanding.) No technological milestone was passed this last weekend at Reading University. But humans certainly didn’t come out looking any better for it.