“When it went off the rails, it really went off the rails,” said David Lazer, a computer scientist at Northeastern University. Lazer is talking about Google Flu Trends, the internal Google analytical tool that tracks data such as keyword searches—things like “cough,” “fever,” and “exploding tongue”—geographically and temporally in an attempt to predict the location and severity of outbreaks before people start going to the doctor en masse. If spikes in keyword searches are indications of spikes in symptoms, so the thinking goes, then it should be possible to anticipate outbreaks before the Centers for Disease Control and Prevention, which can only respond to what doctors are reporting. (Disclosure: I used to work at Google, and my wife is a Google software engineer.)

Google Flu Trends (GFT) would appear to be the perfect benevolent “big data” project. Since its launch in 2008, GFT has been one of the flagship projects of Google’s charity arm Google.org, and as such, it purports to represent the most munificent part of the company whose model is “Don’t be evil.” But GFT also shows how Google’s charitable and corporate aims do not line up as neatly as it would like.

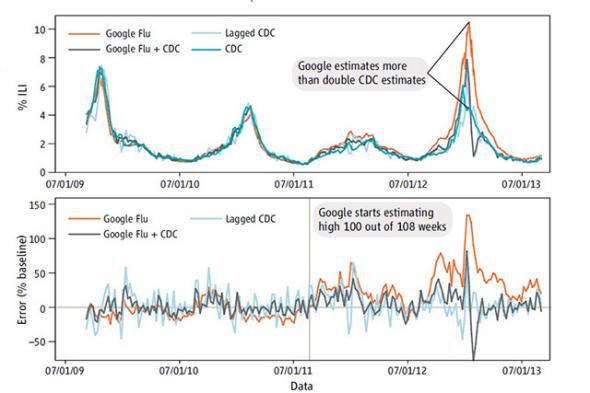

Lazer and three colleagues analyzed the project in a just-published report, “Google Flu Trends Still Appears Sick: An Evaluation of the 2013–2014 Flu Season.” The paper claims that GFT chronically and badly overestimated influenza-related doctor’s visits in the 2013–2014 season, following another huge overestimation in 2012–2013, a lesser overestimation in 2011–2012, and a significant underestimation of the first wave of swine flu in 2009. In these cases, the results were inferior to the “lagged CDC” projection model for estimating flu cases, itself considered a fairly good metric. Lazer and his co-authors also report inconsistencies in Google’s reported data and an inability to get answers to their questions from Google.

Courtesy of Science

The first big takeaway is that GFT is incomplete and partial. The GFT model uses a number of selected signals (such as keyword searches) that are then modified in an attempt to produce a correlation with subsequent flu cases. A loose analogy for the GFT estimates is Google search results themselves. When you search on a keyword, Google takes it as an indication that you’re probably looking for one of its top 10 (or three) results, based on data including what people have clicked on in previous instances of that keyword search. A great percentage of the time, they’re right about what you’re looking for. But that doesn’t guarantee that searching on a keyword means you will click on the top result, any more than searching on “influenza” during flu season means you’re going to develop the flu.

The job of Google’s researchers is to contain and quantify that margin of error, and they do so by incorporating other factors into their model. In the case of 2013–2014, they applied a dampening factor to mitigate the effects of spikes in media reporting on flu outbreaks—although it’s not clear that those media spikes were the cause of the previous overestimation.

Better statisticians than me will be analyzing the report more closely. But there’s a larger and simpler issue that merits immediate attention, and that’s transparency. One of the main problems is that Google’s data is private—very private. Google does not release its raw data or the details of its analyses or even the set of keywords it uses for a particular result. This makes the studies impossible to replicate or check. “We surmise that the authors felt an unarticulated need to cloak the actual search terms identified,” wrote Lazer et al. Google’s raw data, being part of its bread and butter, is not something it wants to share with the world; Google is not a research university. But science relies on transparency. Consider that a very influential pro-austerity paper by economists Ken Rogoff and Carmen Reinhart was only debunked when grad student Thomas Herndon at University of Massachusetts–Amherst obtained the raw data, attempted to replicate the analysis, and found that he could not—meaning that the harsh austerity programs put in place in Europe were founded on faulty research.

Even if Google’s methodology is perfect—and there’s reason to believe it’s not—there needs to be validation. Here Google’s corporate and research agendas come into conflict: If it wants credit for scientific research, it needs to show its work, even at the cost of compromising competitive advantage.

As with most scientists, statisticians are notoriously better at pointing out flaws in existing models than in constructing accurate models. Probability is one of the most important yet vexing fields, and the temptation to simplify the difficulties or jump to conclusions is immense. That’s part of the reason pretty much every news article declaring “X shown to be linked to Y!” can be safely ignored. Such claims sound a lot more meaningful than they actually are. Much of GFT is a case of “Keyword searches shown to be linked to flu cases!” That claim is undeniably true, and GFT’s evidence shows as much. But nailing down the exact nature of that relationship, and whether it’s a sufficiently controllable and reliable correlation in the absence of further factors, is an ongoing problem.

There is great merit in the GFT approach in terms of synthesizing high-level knowledge about the interests and habits of Internet users. So I’m not quite with marketing analytics expert Kaiser Fung in saying that such “big data” analyses are currently so abysmal as to be effectively useless. But the hype around them, as with many new technologies, definitely exceeds their current results. If Google wants Flu Trends to be treated as more than a promising pilot project, Google’s researchers should engage publicly with their critics and reveal far more about their models and aggregate data. We should only entrust public health to Google’s results once they can be independently verified.