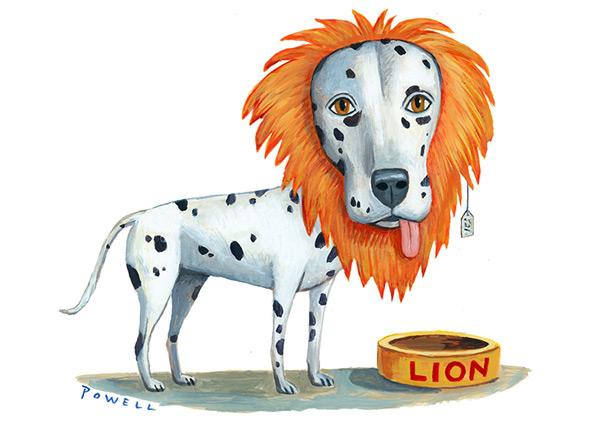

It’s a scandal that’s been quietly rocking zoos all around the world. For nearly a decade now, an increasing number of zookeepers at smaller zoos and animal parks have been taken in by an embarrassing scam. Several years after they acquire one or more lions and put them on display, a sharp-eyed zoo-goer points out that the so-called lions are nothing more than carefully coiffed dogs. But now, mortified zookeepers can breathe a sigh of relief, because a solution to the fake-lion problem is at hand. After intensive collaboration with the Université Joseph Fourier in France, a coalition of veterinarians and zookeepers has developed a genetic test, dubbed LionDetect, that quickly and efficiently determines whether or not a newly acquired lion is the genuine article or a mastiff with a mane. The test isn’t perfect; it can’t yet sound the alarm when someone tries to sell a rubber snake, a fake panda, or a bogus snow leopard to an animal park. Nevertheless, zoo personnel everywhere are breathing a sigh of relief now that they’re a lot less likely to be mortified by the acquisition of a phony lion.

Don’t believe that story? Of course not. Even if there were high-level zoo managers so mind-numbingly stupid as to not be able to tell the difference between a lion and a dog, there’s no way that a shaved dog could fool the keepers, the veterinarians, and the other zoo employees. They’d have to be in on the scam. And the idea that the solution to phony lions is a genetic test—well, that’s about the dumbest part. If you can’t tell feline from canine with the most cursory of inspections, well, let’s just say that you should cross veterinarian and zookeeper off your list of potential careers.

Yet just last week, a scientist at the Université Joseph Fourier did almost the exact same test—not for the business of animal parks, but for the business of scientific publishing. In collaboration with the Springer publishing house, he released SciDetect, a computer program that spots a particular category of phony papers that has been plaguing computer science publications. (Just last year Springer had to pull 18 fake papers, while another publisher, IEEE, had to pull more than 100.) “SciDetect indicates whether an entire document or its parts are genuine or not,” the press release brags. “The software reports suspicious activity by relying on sensitivity thresholds that can be easily adjusted.” Great news, right? Not so much. For the fake papers it aims to detect—which are generated by an algorithm known as SCIgen—are the shaved mastiffs of the computer science world. They are so obviously fake that nobody who has any business being within 10 feet of a computer science journal would fail to spot them with even the most careless examination. A technological solution is completely unnecessary. So why did Springer spend so much time and effort (and presumably money) to build SciDetect?

The answer to that question exposes the dirty secret of modern scientific publishing. It is that secret, not the occasional publication of fake papers, that the scientific publishing world should be mortified about, for it is damaging the underpinnings of the whole scientific endeavor.

When something at the core of scientific publishing begins to rot, the smell of corruption quickly spreads to all areas of science. This is because the act of publishing a scientific finding is an essential part of the practice of science itself. You want a job? Tenure? A promotion? A juicy grant? You need to have a list of peer-reviewed publications, for publications are the coin of the scientific realm.

This coin has worth because of a long-standing social contract between scientists and publishers. Scientists hand over their work to a publication for free, and even sometimes pay a fee of several hundred to several thousand dollars for the privilege. What’s more, scientists often feel duty-bound to vet their colleagues’ work for little or no compensation when a publication asks them to. In return, the publications promise a thorough review process that establishes that a published article has some degree of scientific merit. Just like modern coinage, most of scholarly publications’ value resides in a stamp of approval from a trustworthy body.

However, it’s a coin that’s easy to counterfeit, especially now that anyone with an Internet connection and publishing software can cobble together a respectable-looking “publication” in a few hours. Take the scientists’ work, charge them a hefty fee for publishing it, but skimp on (or do away with) the review process. It’s a goldmine. Just slap up pretty much every submitted paper, no matter how poor the quality, and you’ve got a steady stream of income with almost no expense. These kinds of predatory publications have been cropping up in huge numbers in the past decade—publications that are eager to publish such seminal articles as “Cuckoo for Coco [sic] Puffs” and “Get Me Off Your Fucking Mailing List.” (The latter, naturally, was inspired by the huge volume of publish-in-my-worthless-journal spam that fills every academic’s mailbox.) It’s blindingly obvious that nobody bothered even to look at these submissions before offering to publish them—fake peer-review reports notwithstanding.

But it’s not just the newcomer predatory publishers that are counterfeiting. The SCIgen scandal shows that even old-fashioned big-budget scientific publishing houses are sometimes faking the process of scholarly peer review.

The SCIgen algorithm is more than a decade old now; written by MIT students, it strings together nonsensical phrases larded with computer science buzzwords, slaps on a graph or two, throws in some phony references … voila! You’ve got a reasonable facsimile of a comp-sci paper. Reasonable, that is, if you know absolutely zilch about computers and have no reading comprehension skills. Take, for example, this introduction to a SCIgen paper:

The development of congestion control has synthesized checksums, and current trends suggest that the exploration of scatter/gather I/O will soon emerge. The notion that analysts connect with compilers is usually well-received. The notion that biologists collude with 802.11b is usually considered robust. However, simulated annealing alone cannot fulfill the need for the construction of 802.11b.

It’s not just you; nobody on the planet can figure out what that paragraph means. And the more you know about computers, the less sense that passage makes. You might not know that 802.11b is a standard for wireless networking, but if you did, you’d have a hard time figuring out how biologists could collude with it. Checksums aren’t synthesized, and if they were, they certainly wouldn’t be synthesized by the development of congestion control. Pretty much every single sentence in the entire article is a meaningless hash of jargon. The likelihood of a real computer scientist being fooled by a SCIgen paper is roughly the same as that of a classicist mistaking pig Latin for an undiscovered Cato oration. No peer reviewer could ever have let it slip by.

Yet in 2007, that particular SCIgen paper, “Cooperative, Compact Algorithms for Randomized Algorithms” was accepted by and printed in a “peer-review” journal: Applied Mathematics and Computation, published by Elsevier, one of the giants of the scientific publishing industry. And for the better part of a decade, SCIgen-written papers have been appearing in conference proceedings and computer science journals all around the world, exposing bogus peer review wherever it crops up. (It’s not hard to find oodles and oodles of examples if you know what to look for.) So, last year, when Springer and IEEE had to withdraw more than 120 articles written by SCIgen, it was a clear demonstration that fake peer review was a big deal in major mainstream scientific publications, not just predatory ones. (Springer, with more than 2,000 journals in its portfolio, is a juggernaut like Elsevier; IEEE is the leading society for electrical engineers and computer scientists and a major publisher in its own right.)

It’s not just an issue in computer science. In 2013 science journalist John Bohannon created a biomedical equivalent of SCIgen and submitted more than 300 obviously bogus papers to publishers around the world. More than half were accepted. Though Bohannon’s sting was mostly aimed at predatory publishers, some reputable houses were caught in the dragnet, such as Dove, Sage, and Elsevier. More recently I exposed more than 100 journal articles that looked like they were products of a scientific version of Mad Libs; they tended to have obvious signs of plagiarism or poor scholarship of various sorts. A rigorous peer-review process should have caught these problems. Yet these papers appeared in journals published by PLoS, Nature Publishing Group, Wiley, Taylor & Francis, BioMed Central, and other publishing powerhouses. On top of that, a number of publishers have had to admit that they were tricked into using nonexistent peers to conduct the reviews. (Last week BioMed Central wrapped up its investigation into 43 articles that were “published on the basis of reviews from fabricated reviewers.”)

Peer review isn’t perfect. Even under the best of circumstances and in the best of journals, some truly awful papers are going to get published. And when scientists are willing to lie and cheat to get their articles into journals, there’s no way that the journals will be able to prevent some from slipping through. But so long as publishers ensure that there’s a good-faith attempt to vet articles and to winnow out the chaff—rather than merely trying to make as much money as possible by offering a résumé-building publication to all comers—there will always be a role for peer-reviewed journals.

Which is why Springer’s deployment of a SCIgen-detecting algorithm is so damning. A SCIgen paper, remember, is so transparently fake that even the most cursory review—like, say, reading the first four sentences of the article—should be enough for a computer science novice to know that it’s totally bogus. To spend any time, effort, or money to come up with a technological solution to screening out those papers is a tacit admission that even at the most reputable publishing houses, some peer-reviewed journals are incapable of providing even the most minimally competent peer review. It’s an open declaration that even the people in charge of the mint are now churning out counterfeit coins, publishing “peer-reviewed” journals that have no functional review process. An automated screen for SCIgen papers is simply an attempt to counteract an incredibly effective mechanism for exposing publishers whose journals are faking it.

We’re entering an era of cargo cult peer review. It now appears that even major scientific publishers are peddling products that have all the trappings of a scholarly journal but are fundamentally hollow at core. As it is, the majority of research published in the scientific literature could be wrong. Try to imagine just how much worse it is going to get as scholarly journals turn themselves into vanity presses.