A bumper sticker was popular in the city where I went to college. It was yellow, with large black print that read: “Mopeds are dangerous.” Beneath the text was the blocky silhouette of a moped and nothing else. The sticker didn’t illustrate the claim that mopeds were dangerous—it didn’t show a moped crumpled against a tree or running someone over—but it was eye-catching, the yellow contrasting sharply with the black, and on message. I believed that bumper sticker, and still do, for all that I’ve rarely encountered a moped or read about a moped accident or even really grasped the difference between a moped and a Segway.

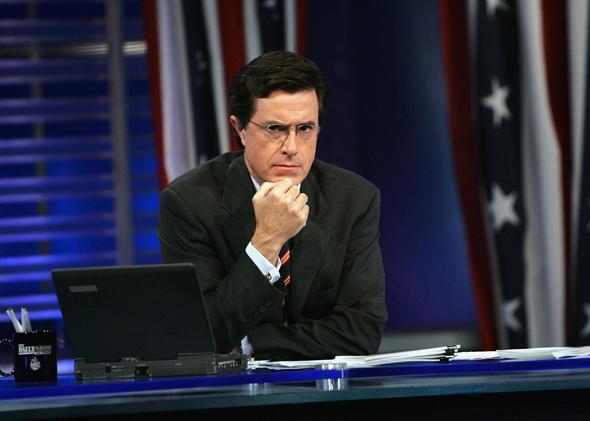

Truthiness is the word Stephen Colbert coined to describe the intuitive, not always rational feeling we get that something is just right. Are mopeds dangerous? Sure, if by dangerous you mean significantly riskier than cars but slightly less direful than motorcycles. They are not dangerous compared to smoking a lot of cigarettes or owning a gun. The point is that, while nothing about the bumper sticker backed up its ominous claim, I automatically accepted it.

Truthiness is “truth that comes from the gut, not books,” Colbert said in 2005. The word became a lexical prize jewel for Frank Rich, who alluded to it in multiple columns, including one in which he accused John McCain’s 2008 campaign of trying to “envelop the entire presidential race in a thick fog of truthiness.” Scientists who study the phenomenon now also use the term. It humorously captures how, as cognitive psychologist Eryn Newman put it, “smart, sophisticated people” can go awry on questions of fact.

Newman, who works out of the University of California–Irvine, recently uncovered an unsettling precondition for truthiness: The less effort it takes to process a factual claim, the more accurate it seems. When we fluidly and frictionlessly absorb a piece of information, one that perhaps snaps neatly onto our existing belief structures, we are filled with a sense of comfort, familiarity, and trust. The information strikes us as credible, and we are more likely to affirm it—whether or not we should.

How do our brains decide which assertions seem most lucid and credible? One way to ease cognitive processing is to hoop an idea with relevant details. This is similar to what happens with priming: Barrage people with words like leash, collar, tail, and paw and then ask them for a word that rhymes with smog. They’re much likelier to fetch the word dog than, say, bog or agog, because the neural nebula containing Fido is already active. In one experiment, people who read the sentence “The stormy seas tossed the boat” were more prone than those who read the sentence “He saved up his money and bought a boat” to report they’d come across the word boat in a previous exercise, whether they really had or not. The “semantically predictive” statement, salted with seafaring concepts like storm, allowed readers to anticipate the kicker: boat. Then, when the boat appeared, they processed the word so fluently they assumed they must have encountered it previously—the low cognitive effort created an illusion of familiarity.

Photographs can also reduce the amount of cognitive effort needed to understand a claim. This easy processing beguiles us into viewing the claim as friendly, familiar, and correct. In one study, Newman and her colleagues showed volunteers, who were mostly college students, names of politicians and other moderately famous people they were unfamiliar with. Half of the names belonged to living celebrities and half to dead ones. Some names were paired with images of the person they named; some stood alone. One team of participants was asked to assess the truth of the statement “This celebrity is alive,” while the second team did the same for the claim “This celebrity is dead.”

The researchers found that people in both groups more often credited the statement when a picture accompanied it. Newman wasn’t too surprised that photos increased the truthiness of “alive” claims—we trust photography as a medium to document reality, and the images depicted the celebrities as animate humans in the world. What fascinated her, she wrote in her paper, was how “the same photos also inflated the truthiness of ‘dead’ claims: The photos did not produce an ‘alive bias’ but a ‘truth bias.’ ”

To clinch their findings, the scientists then presented participants with a series of assertions: “Turtles are deaf,” “The liquid inside a thermometer is liquid magnesium,” “Macadamia nuts are in the same evolutionary family as peaches.” When people read the claims alongside photos that related to but not did not illustrate the claims—a turtle, a thermometer, a bowl of macadamia nuts—they were more likely to judge the claims to be true. Why? The visual cues made the statements easier to understand, which gave them a halo of trustworthiness, of truthiness.

The idea of cognitive fluency—that certain parcels of information go down easy, comprehension-wise—applies to sounds as well. We like and trust what we can comfortably pronounce, preferring to tour a new city with a (hypothetical) guide named Andrian Babeshko rather than one named Yevgeny Dherzinsky. What’s more, when Newman and her colleagues gave psych students a list of unverified facts and told them that the trivia had come from their peers, the students were less likely to believe the claims were true if the “peers” had tongue-twisty names. (“Our results have practical implications,” the researchers wrote. “For instance, would the pronounceability of eyewitnesses’ names shape jury verdicts?”) The bias toward low cognitive effort even extends to font and color schemes: In a landmark 1999 study, Rolf Reber and Norbert Schwarz determined that people believed the statement “Osorno is in Chile” when it appeared as blue text on a white background but not when it was barely visible as yellow text on a white background. (Osorno really is a city in Chile, FYI.)

Which brings me back to my bumper sticker. Arresting color contrast? Check. Simple, easy-to-understand language? Check? A handy visualization? Check. With no evidence for or against the oracular pronouncement that “Mopeds are dangerous,” I absolutely believe that they are. I fear them. I hope never to meet one in a dark alleyway. This is truthiness.

We’ve established that we readily believe things that don’t ask too much of us mentally. But truthiness has a flip side: When we are not asking much of ourselves mentally, are there particular things we are more likely to believe?

It is no accident that the current understanding of truthiness unfurled from Stephen Colbert’s satire of the American right. Psychologists have found that low-effort thought promotes political conservatism. In a study by Scott Eidelman, Christian Crandall, and others, volunteers were placed in situations that, by forcing them to multitask or to answer questions under time pressure, required them to fall back on intellectual shortcuts. They were then polled about issues such as free trade, private property, and social welfare. Time after time, participants were more likely to espouse conservative ideals when they turned off their deliberative mental circuits. In the most wondrous setup, the researchers measured the political leanings of a group of bar patrons against their blood alcohol levels, predicting that as the beer flowed, so too would the Republican talking points. They were correct, it turns out. Drunkenness is a tax on cognitive capacity; when we’re taxed too much, we really do veer right.

The researchers pointed to a few cognitive biases that might prompt an overloaded mind to embrace pillars of conservative thought. First, we reflexively attribute people’s behavior to their character rather than to their circumstances (although studies suggest this “fundamental attribution error” is more prevalent in the West). This type of judgment invites a focus on personal responsibility. Second, we learn more easily when knowledge is arranged hierarchically, so in a pinch we may be inclined to accept fixed social strata and gender roles. Third, we tend to assume that persisting and long-standing states are good and desirable, which stirs our faith in the status quo absent any kind of deep reflection. Fourth, we usually reset to a mode of self-interest, so it takes some extended and counterintuitive chin-stroking for those not actively suffering to come around to the idea of pooling resources for a social safety net. (Elaborating on his original definition of truthiness, Colbert told interviewers: “It’s not only that I feel it to be true, but that I feel it to be true. There’s not only an emotional quality, but there’s a selfish quality.”) Consider the conservative (and dead wrong) maxim: “A rising tide lifts all boats.” Clear visual logic, a lilting simplicity, and the complacent sense that seeking my good is good enough—the saying is truthiness gold.

And conservative ideology sparks something elemental and reflexive in our predator-wary neural wiring. Motivated social cognition theory states that right-wing political beliefs arise from a need to manage uncertainty and threat. A 2014 study from Rice University showed that self-identified Republicans responded more intensely and fearfully than liberals to negative stimuli (pictures of burning houses or maggot-infested wounds); even in neutral settings, brain scans revealed increased activity in the neural corridors that react to danger. Obama is setting up death panels. Immigrants will flow over the borders until the country is unrecognizable. To researchers, such theories are more than AM radio fear-mongering—they are beliefs steeped in truthiness, made potent by the ease with which they are processed in the receptively anxious echo chambers of Rush Limbaugh’s cranium.

Of course, the overlap between GOP tenets and low-hanging cognitive fruit does not mean that conservatives arrive at their beliefs by disconnecting their brains. (“Conservatives are dumb” would be the reductive, unfair, truthy version of this article’s argument.) Just as sometimes the simplest answer is also the correct one, sometimes the dimly intuited dangers bedeviling our reptilian nervous systems are real. When thoughtful reflection and instinct point to the same conclusion, it’s the mode of transport that matters, at least as much as the destination. On that note, never ride a moped.