Who Will Win Best Picture?

A cool new tool for predicting the biggest Oscar of the night.

Jemal Countess/Getty Images

Heading into Sunday night’s Academy Awards, odds are that either 12 Years a Slave or Gravity will be walking away with the Best Picture award. But what if 12 Years a Slave starts sweeping the acting awards on Oscar night? What if Steve McQueen upsets Alfonso Cuarón for Best Director? Does that mean 12 Years a Slave’s odds of winning will be even higher when it’s time to give out the final statuette of the night?

The answer is yes—if you have faith in numbers. Below is an Oscars “What-If” calculator, which will allow you to see how victories in various categories, from acting to editing to writing, affect the odds of the three Best Picture front-runners taking home the statuette. As you’ll see, some categories have more impact on the Best Picture odds than others. For example, Best Actor has more influence on Best Picture odds than Best Actress, as the male category is historically more likely to be linked to the eventual winner. However, neither acting category is as indicative of the Best Picture winner as Best Editing.

Before the big night, the calculator will let you use your hunches about the early-in-the-evening categories to predict the big winner. And on Oscar night, you can fill in the winners as they’re called to the podium and adjust your Best Picture guess accordingly.

Discussion: Many statisticians have tried to predict who will win Oscar gold based on the contenders’ performance in previous award competitions, like the Golden Globes or Directors Guild Awards. But when it comes to Best Picture, we don’t get some of the most predictive information until the ceremony starts. For instance, 33 of the last 40 directors to win Best Director later saw their film win Best Picture, making Best Director a stronger indicator of the ultimate Oscar prize than any pre-Oscars award or nomination.

Of course, these probabilities are only good if you assume this year’s Oscars will be similar to past years’—an assumption that may not hold for many reasons. Many Oscars watchers feel that 12 Years a Slave is the favorite to win Best Picture, but its director, Steve McQueen, is a long shot to win Best Director. This could be a year when the awards go against the numbers, and human intuition reigns supreme over statistics.

How much confidence should you have in any statistical analysis of the Oscars? I’m someone who usually believes that numbers trump instincts and data points are more telling than narratives. But after examining the problem of predicting this year’s Best Picture winner, I’m now convinced that when it comes to the academy, fancy formulas aren’t actually of much use, my handy widget notwithstanding.

The problem isn’t that basic data points—like nominations and wins at other awards shows—are not a good indicator of who will win the Best Picture Oscar. The problem is that Best Picture is often a very lopsided contest, so an elaborate quantitative model hardly seems worth the effort. Last year, Argo won best picture at just about every other awards show; we didn’t really need Nate Silver’s model to tell us it was a shoo-in for the Academy Award. Silver did predict Argo would win, but so did everyone else. In Las Vegas, Argo’s odds were 1-7, meaning you had to bet 7 dollars to get 8 back (putting the probability at roughly 88 percent).

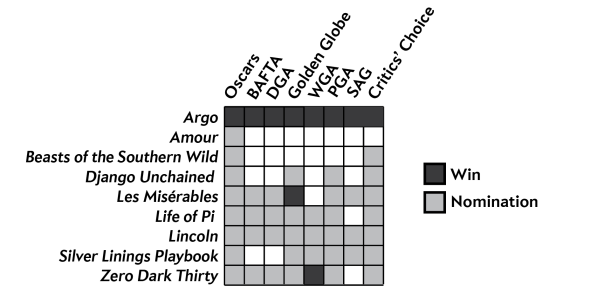

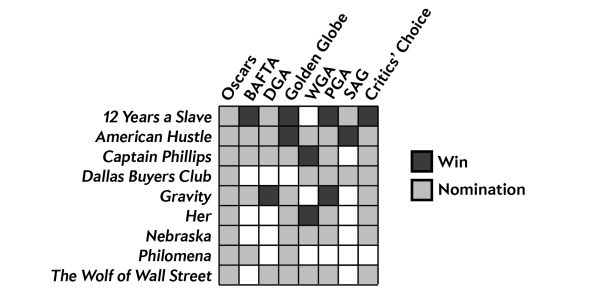

But what about a year like this one, when the other awards have not been as consistent? Let’s look at the other awards so far this Oscar season:

The eventual winner is clearly less obvious this time around. 12 Years a Slave has the most wins, but was notably beat out by Gravity for the DGA, which is the most predictive of all awards; it correlates with the Best Picture Oscar 80 percent of the time.

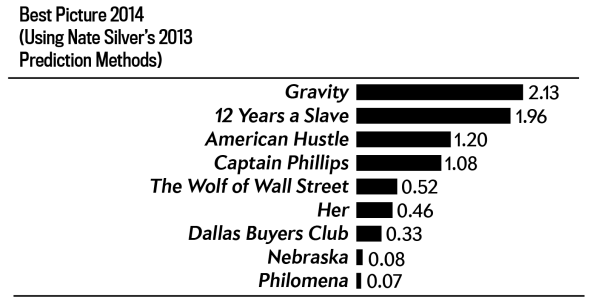

Nate Silver has not released any predictions for 2014. Out of curiosity, I used his scoring system from last year, which assigns a unique weight to each of 16 pre-Oscars awards, to see what his projections would be for this year (assuming he would stick with the same methods). Here’s what it’d look like:

These rankings seem reasonable, but you’re probably wondering what Gravity’s 2.13 score means. How much of a favorite would Silver consider Gravity to be over 12 Years a Slave, which only has a score of 1.96? And is Gravity only twice as likely to win as Captain Phillips, which has a 1.08?

The answer is no—the numbers in Silver’s system curiously do not relate to any probability metric. The numbers are used for ranking, but unlike Silver’s election odds, these rankings are not expressed with probability. If the score corresponded to the share of the probability that each movie would be expected to have, Argo would have been given only a 53 percent chance of winning by his metric.

It’s of course important to remember that the data Silver uses to predict the Oscars is nowhere near as comprehensive as the data he uses to predict elections. For the Oscars he went back only 25 years. Going back 25 years in political polling may actually encompass thousands of contests, giving you many more opportunities to judge the efficacy of a given poll. Going back 25 years to predict the Oscars provides only 25 data points. Going back much further, however, may provide diminishing returns, as the awards season has changed over time and many awards now considered important (PGA, SAG, WGA) aren’t that old.

The other big problem, from a statistical point of view, is that awards nominations and wins are censored data—that is, not all the information is visible. When a political election occurs, we know the exact final tally a candidate receives, so we can tell how accurate the pre-election polling was. When a movie wins Best Picture, we don’t know whether it was a unanimous choice or whether it squeaked by on a slim plurality, making validation of predictions close to impossible.

So predicting the Oscars with data may be more difficult than any statistician, including myself, would like to admit. But I gave it a shot in the calculator above. The methods I used to create my predictions were similar to Silver’s, but I used a method called multinomial logistic regression, which returns outcomes in probabilities that sum to one instead of arbitrary scores like Silver’s model. My dataset consisted of 40 years’ worth of awards data (or as far back as each award dates). Forty years is still not ideal for this type of analysis, both because it’s relatively small and it’s unclear how relevant the 1974 Oscars are to the 2014 Oscars, but it is large enough to produce predictions while relying solely on past returns.

Here are the odds produced by my model before any awards during the ceremony are revealed:

Gravity: 35.7 percent

12 Years a Slave: 33.4 percent

American Hustle: 26.3 percent

Her: 2.6 percent

The Wolf of Wall Street: 0.6 percent

Philomena: 0.4 percent

Dallas Buyers Club: 0.3 percent

Captain Phillips: 0.2 percent

Nebraska: 0.1 percent

(Only the top three films were included in the calculator at the top of the page, and their probabilities were rescaled appropriately to sum to 100 percent.)

Do I think the predictions I produced are as good as any statistical model could provide? I think they are reasonably close to what anyone could do with modeling. Do I think the predictions above are as good as Vegas odds or the work of a smart pundit? No. If there is one prediction I am willing to make, it’s that it will take many more years of data piling up before the Oscars can be forecast accurately with numbers alone.

*Correction, Feb. 28, 2014: This article orignally misspelled the name of the movie The Book Thief and David O. Russell's last name.