Visitors to Microsoft in Redmond, Wash., can avail themselves of free shuttle cars to help them make their way about the sprawling suburban campus. The cars are clean and quiet and always appear within minutes of being summoned, and the drivers always know where they are going. Or almost always: During my weeklong stay my drivers repeatedly told me this was their first trip to Building 126, Microsoft’s corporate archives. One or two had to look it up on Bing Maps.

Nonetheless, the facility is impressive: a dedicated building with 6,600 shelf-feet of cold storage, all holdings duly packaged in the archive world’s ubiquitous soft gray Hollinger boxes, as well as earthquake safeguards and redundant off-site storage at Iron Mountain in Pennsylvania. I had come to Microsoft to do research for the book I am writing on the literary history of word processing. Microsoft Word, I reasoned, was the most widely used piece of writing software in the world, the No. 2 pencil of the digital age. Outside researchers here are scarce indeed, but I was lucky enough to get in thanks to assistance from Microsoft Research Connections, where there are people who understand why an English professor might be interested in what they do. Over the course of a week spent sequestered in the remote archives building on the edge of the Microsoft campus, I came to appreciate exactly what it means to think about software as an artifact: not some abstract, ephemeral essence, not even as lines of code, but as something made, something that builds up layers of tangible history through the years, something that contains stories and subplots and dramatis personae. And I started thinking anew about how we preserve software for the future: future users, future programmers, and future historians. If, hundreds of years from now, a literary scholar wanted to run Word 97, the first consumer version to implement the popular “track changes” feature, how would she find it? What machine would accommodate this ancient artifact of textual technology?

Just as early filmmakers couldn’t have predicted the level of ongoing interest in their work more than 100 years later, who can say what future generations will find important to know and preserve about the early history of software? While the notion that someone might go diving into some long outmoded version of Word might seem improbable, knowledge of the human past turns up in all kinds of unexpected places. Historians of the analog world have long known this: Writing, after all, began as a form of accounting—would the Sumerian scribes who incised cuneiform into wet clay have thought their peculiar angular scratchings would have been of interest to a future age?

We tend to conceptualize software and communicate about it using very tangible metaphors. “Let’s fork that build.” “Do you have the patch?” “We can use these libraries.” “What’s the code base?” Software may be, as David Gelernter once wrote, stuff unlike any other, but it is still a thing, tangible and present for all of its supposed virtual ineffability. Software developers themselves have a name for the way in which software tends to accrete as layers of sedimentary history, fossils and relics of past versions and developmental dead ends: cruft, a word every bit as textured as crust or dust or fluff, all of which refer to physical rinds and remainders.

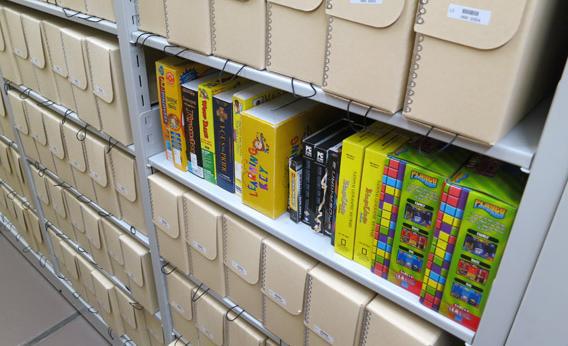

And even outside the program itself, what else is the software? Is it just the code, or is it also the shrink-wrapped artifact, complete with artwork, documentation, and “feelies,” extras like faux maps or letters that would become part of the play of a game? Is it the program or the operating system? What about the hardware? The firmware? What about controllers and other peripherals integral to the experience of a given piece of software? How to handle all the different versions and iterations of software? What about fan-generated add-ons like mods and macros? What about discussion boards and strategy guides and blogs and cheat sheets, all of which capture the lively communities around software?

More simply: What do we save, and how do we save it?

—

In the green hills west of Washington, D.C., sits the Library of Congress’ Culpeper, Va., campus, properly known as the Packard Campus for Audio-Visual Conservation. Built with support from David Woodley Packard, it’s a state-of-the-art facility whose sweeping lines and aery interiors seem more characteristic of its Silicon Valley patronage than the Virginia countryside or federal bureaucracy. The 45-acre Packard campus is charged with the long-term safekeeping of the nation’s films, television programs, radio broadcasts, and sound recordings—the largest audio and moving-image collection in the world.

For decades the library has also been receiving computer games, and in 2006 the games became part of the moving-image collections at the Packard campus. While the library registers the copyrights, what it means to preserve and restore vintage computer games—or any kind of computer software—is less clear. What kind of intervention is necessary to keep computer games from meeting the fate of the 80 percent of pre-1930 American films now lost forever thanks to their volatile nitrate film stock?

That question was recently explored at a two-day meeting dubbed “Preserving.exe” at the Library of Congress’ Madison building in Washington. A roomful of computer historians, technical experts, archivists, academics, and industry representatives discussed what role the nation’s cultural-heritage institutions, from the library and the Smithsonian to the Internet Archive, ought to play in gathering and maintaining collections of games and other software for posterity. While libraries, archives, and museums now routinely confront the challenges of massive quantities of files and records in digital format, actual software—“executable content” in the parlance of the meeting—raises some especially vexed problems for preservation.

Fans of vintage computer games will be familiar with emulators, software programs that create a virtual simulation of some bygone computer, allowing one to actually execute legacy code in a kind of Potemkin environment. The experience of using an emulator can be uncanny, with sounds, graphics, and the behavior of the original system all instantly recreated on a modern machine. Emulators, moreover, are not only duplicates of the original system; they also allow for forms of study—a peek into the underlying state of the program’s machine code, for example—that the original software running on original hardware couldn’t. Examples of this approach were well represented at the Library of Congress meeting. The Olive project at Carnegie Mellon is investigating streaming virtualization technologies, allowing users to configure legacy hardware as needed from the cloud; Jason Scott, the flamboyant archives populist and activist, demoed a browser-based system that would allow users to embed vintage computers like they do YouTube videos.

Still, others at the meeting emphasized the need to retain actual exemplars of old systems, in order to allow users the most complete experience of the original look and feel. While a good emulator can work marvels—duplicating the processor speed of the original hardware, for example—the impossibility of recapturing the fullness of the original experience was brought home again and again during the meeting. Sometimes the interaction between software and hardware can be especially subtle. I well remember the frustration of banging away at the keyboard while playing old-school interactive fiction games like Wishbringer or Zork, only to be told by the parser “I DON’T UNDERSTAND THAT” or “YOU CAN’T DO THAT HERE.” But when I hit upon the right solution, I’d know it immediately: The program would pause, the disk would audibly spin up, and a new text description would be loaded as the progress of the game advanced. This sort of pacing and rhythm established the tempo of the experience, in the same way that processor speeds do for more kinetic forms of game play.

But in a world with limited shelf space (and even more limited funding for archives), perhaps my experience of playing Adventure as a 14-year-old can’t make the historical cut. “We don’t want to be a computer history museum,” presenters repeated over and over again at the meeting. After all, machines are fickle, temperamental, and take up space. Replacement parts have to be purchased on eBay or Craigslist, a red flag for certain kinds of institutions. As Clifford Lynch, director of the Coalition for Networked Information observed, libraries provide access to 18th-century books, but they don’t promise to recreate the conditions—flickering candlelight, inadequate ventilation, smallpox—that might have accompanied reading it in the 18th century.

There is no preservation without loss. Archivists know this better than anyone. Emulation and hardware conservation, as well as other approaches—even printing out the source code—all have their partisans and proponents. Each entails trade-offs and compromises, and there will never be a one-size-fits-all solution. But one thing I’ve learned in my travels in software preservation is that the most important issues are not technical; they are social and cultural. Many early computer magazines in fact preserved software precisely by printing the source code—which readers could then manually transcribe—but the Library of Congress routinely discarded such magazines as mere ephemera before around 1990. That was a policy decision based on perceived value, not technical considerations. Whatever the details of the technical challenges and solutions for software preservation may turn out to be, first and foremost must be our human desire to harvest historical memory and understanding wherever it may lie.

At the Library of Congress meeting, a representative from GitHub, the massive open-source online repository, made the connection to such ideals all but explicit, declaring the software culture on the Web the new cultural canon and invoking Emerson, Beowulf, and Adam Smith. Rachel Donahue, an information studies doctoral student at the University of Maryland, suggested (only partially in jest) that sentient machines might one day demand a record of their computational past. One preserves software not because its value to the future is obvious, but because its value cannot be known.

Near the end of the meeting Lynch was called upon to give a summary of the proceedings. He pointed out that “software” was a far broader category than had even been discussed, with implications for everything from kitchen appliances to Boeing 777s. He then paused and wondered whether perhaps the Library of Congress ought to be compiling canonical lists of the most culturally significant computer software, just as it now does for books and movies. Such lists are powerful collective motivators, spurring discussion and argument. Could one be made for software? If so, what should be on it?